I've been adding bits, as foreshadowed, to the latest data page, with another rearrangement. I have added the maintained monthly plotter, and I have used it as a framework for updated back trend plots - ie plots of trend from x-axis date to present, that I've used for the recessional of the Pause. It's here, with more detail at the original posts. There is a button to switch between modes - they use the same updated data. There is also a data button so you can see the original numerical data.

I did a calc of the new ERSSTv4 global average for Bob Tisdale's post, and I'll add that to the maintained set.

I have also included the WebGL maps (updated daily) of daily surface data, currently for days of this year, but I may extend. For recent days, it also shows the global average. I see that for the last week or so, these have been exceptionally warm, which balances cold earlier in the month.

So that's probably it for 2014. A Merry Christmas and Happy New Year to all readers.

Wednesday, December 24, 2014

Tuesday, December 16, 2014

December update on 2014 warmth.

NOAA has posted their report on November 2014 (h/t DK). It shows a global anomaly relative to 1901-2000 of 0.65°C. This is down from October's 0.74°C. TempLS grid was down 0.11°C, which looks like very good agreement, but is something of a break with the recent eerily close tracking.

They may take a few days to update the detailed MLOST file that I normally use (base 1961-90), so, with Christmas coming, I'll use a synthetic value by dropping the Oct value by 0.09°C too, so as to produce the last of the anticipatory posts about record prospects.

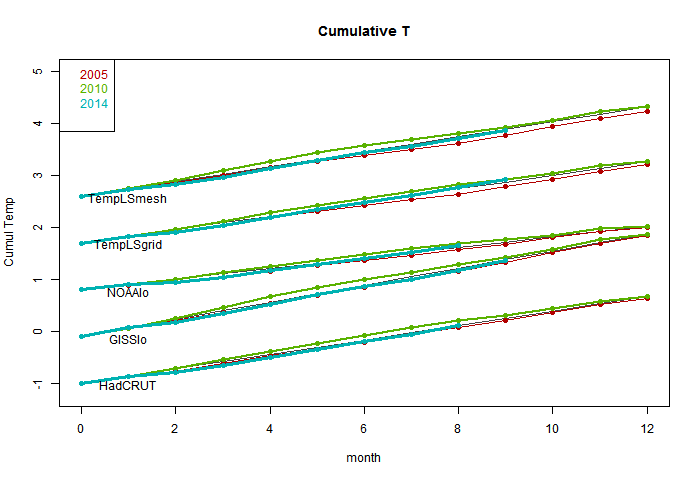

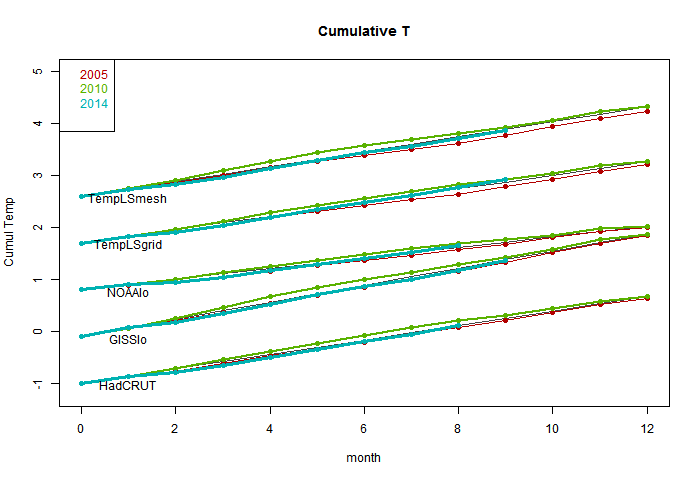

I'm following the format of an earlier post, with sequel here. You can click buttons to rotate through datasets (HAD 4, GISS, NOAA, TempLS mesh and grid, and HADSST3). I haven't shown the satellite troposphere indices, because these are nowhere near a record. The plot is cumulative sums of monthly ave relative to 2010, the next highest. I see that NOAA has a similar plot, but with average to date rather than total. This is just a scale difference, becoming small near end year.

Highlights are, first HADSST3, which is way ahead of 2010. In fact, for that, 1998 was higher, at 0.43°C, but that level too should be exceeded. This emphasises that high SST was the driver for 2014.

GISS is less clear; Nov 2014 was cooler while Nov 2010 was warm, so it's prospects receded slightly. Meanwhile, my NCEP/NCAR daily index showed the first week or so in December very cool, but then warmer. So GISS is no certainty. However, Dec 2010 was quite cool. NOAA is well ahead, and while there is no November data for HADCRUT 4, it is also well placed.

The plot is below the jump:

The index will be a record if it ends the year above the axis. Months warmer than the 2010 average make the line head upwards.

Use the buttons to click through.

![]()

They may take a few days to update the detailed MLOST file that I normally use (base 1961-90), so, with Christmas coming, I'll use a synthetic value by dropping the Oct value by 0.09°C too, so as to produce the last of the anticipatory posts about record prospects.

I'm following the format of an earlier post, with sequel here. You can click buttons to rotate through datasets (HAD 4, GISS, NOAA, TempLS mesh and grid, and HADSST3). I haven't shown the satellite troposphere indices, because these are nowhere near a record. The plot is cumulative sums of monthly ave relative to 2010, the next highest. I see that NOAA has a similar plot, but with average to date rather than total. This is just a scale difference, becoming small near end year.

Highlights are, first HADSST3, which is way ahead of 2010. In fact, for that, 1998 was higher, at 0.43°C, but that level too should be exceeded. This emphasises that high SST was the driver for 2014.

GISS is less clear; Nov 2014 was cooler while Nov 2010 was warm, so it's prospects receded slightly. Meanwhile, my NCEP/NCAR daily index showed the first week or so in December very cool, but then warmer. So GISS is no certainty. However, Dec 2010 was quite cool. NOAA is well ahead, and while there is no November data for HADCRUT 4, it is also well placed.

The plot is below the jump:

The index will be a record if it ends the year above the axis. Months warmer than the 2010 average make the line head upwards.

Use the buttons to click through.

Sunday, December 14, 2014

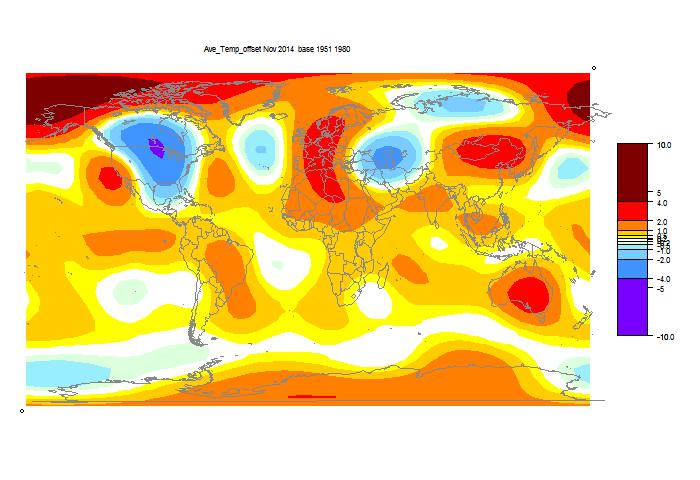

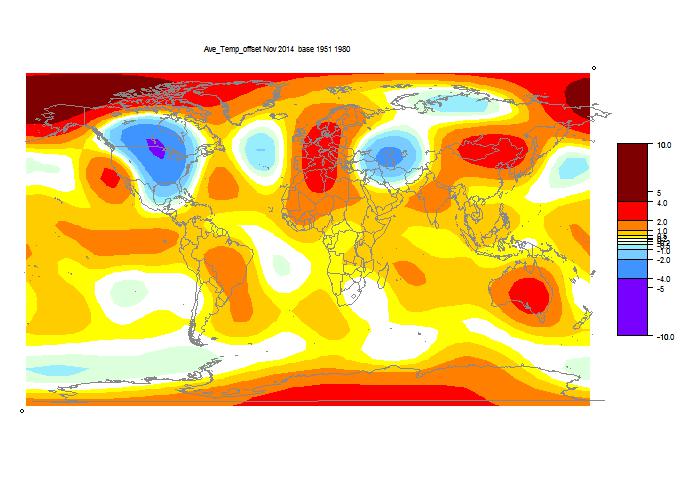

November was cooler - GISS down 0.11°C

GISS has reported for November 2014, down from 0.76°C to 0.65°C. The title alludes to my post of 4 Dec, which reported a similar drop by TempLS Mesh. That drop has reduced somewhat as more data arrived. I have been following these monthly events with added interest, because it is the first real test of early TempLS mesh predictions (described here), and was foretold by another index I have been calculating (reanalysis NCEP/NCAR). This reports daily, and indicated a considerable cooling in November.

As usual, I have also done a TempLS Grid calc. As explained here, I expect the mesh calc to more closely emulate GISS, while the grid calc should be closer to the NOAA and HADCRUT indices. TempLS grid did show a greater reduction, from 0.631°C to 0.519°C.

When NOAA comes out, I'll post on the likelihood of a record this year. The NCEP/NCAR index has been quite cool in December as well, so a GISS record is in the balance. NOAA and HADCRUT are currently ahead by a greater margin, but TempLS suggests may see a greater reduction.

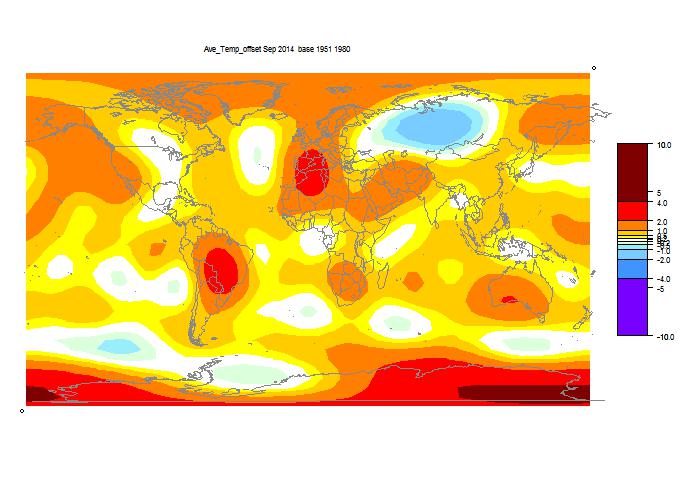

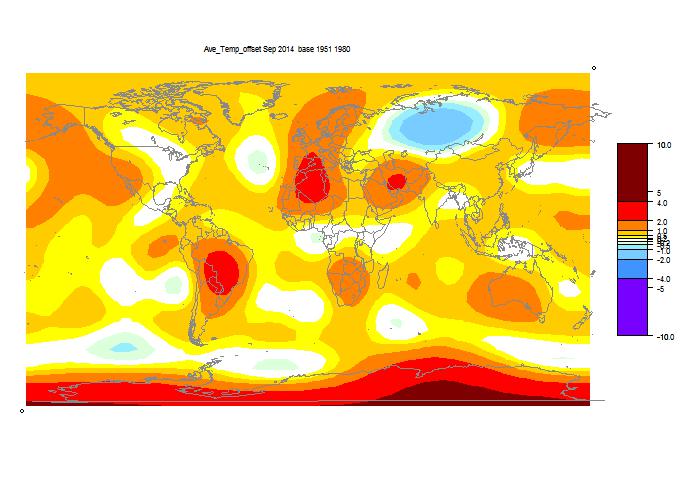

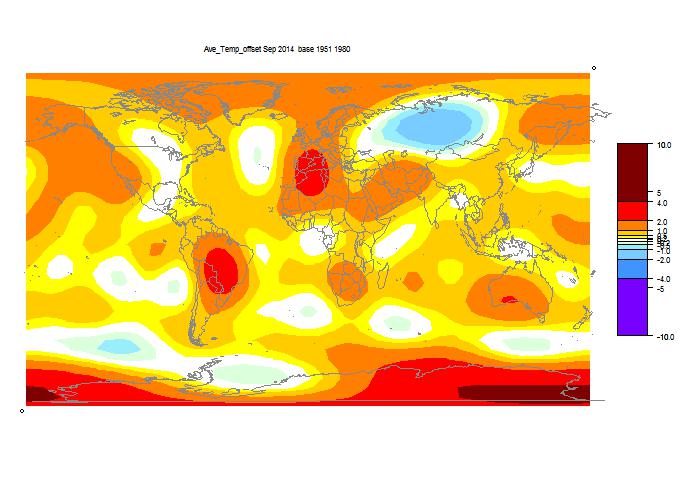

Details of the GISS map for the month, and comparison with TempLS, are below the jump.

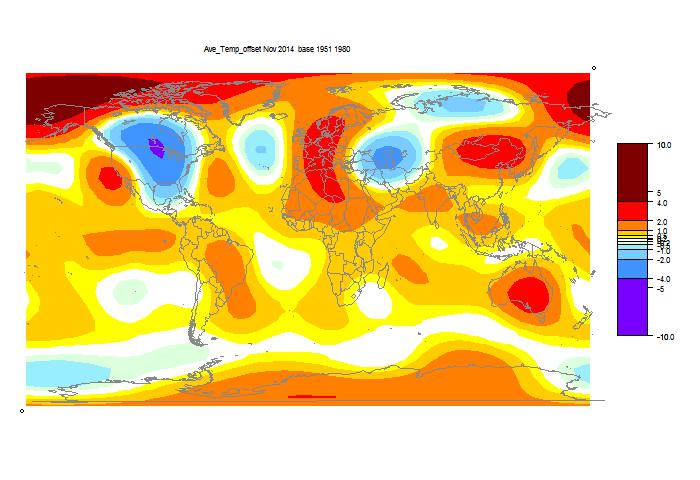

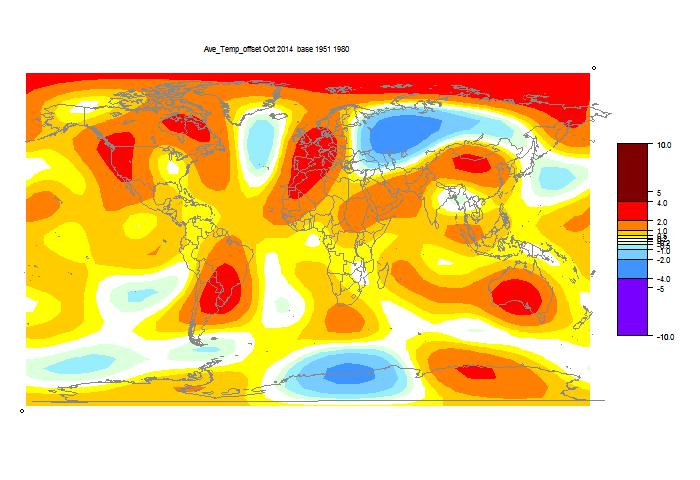

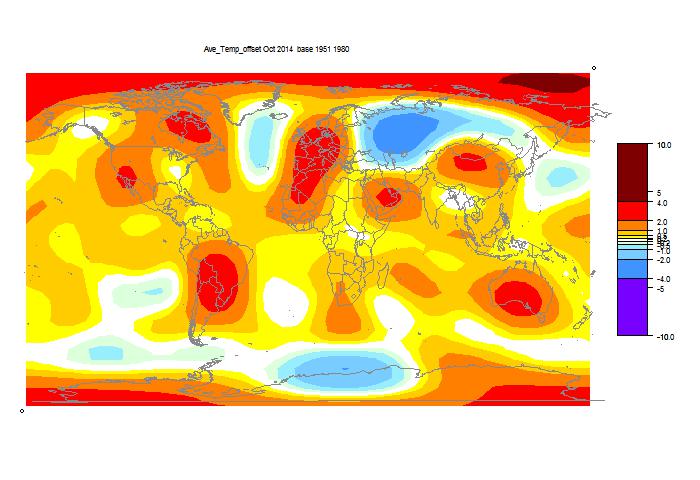

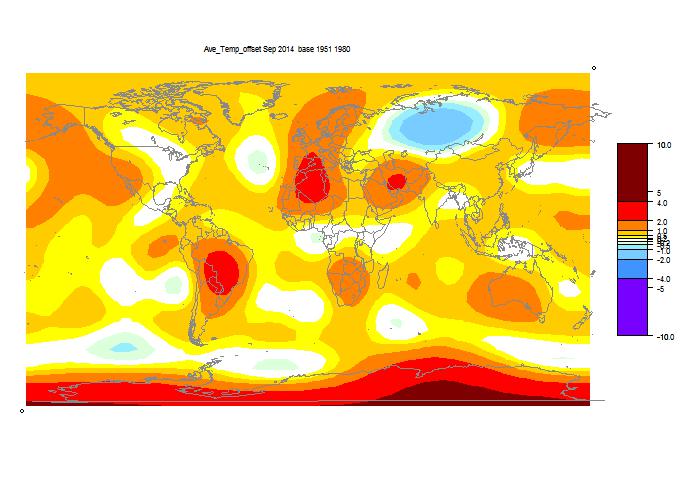

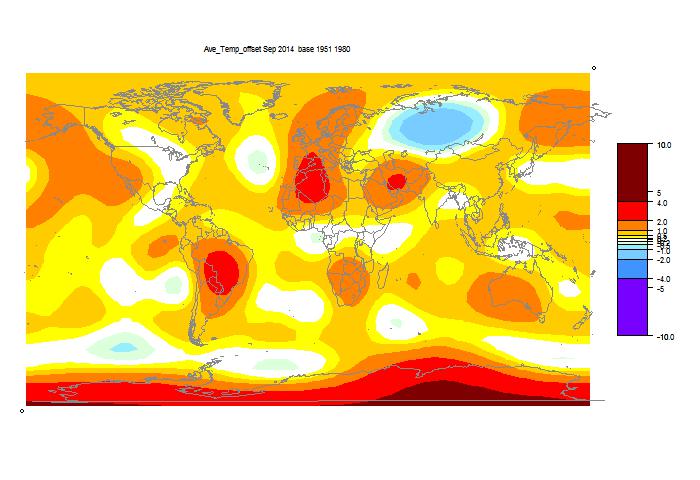

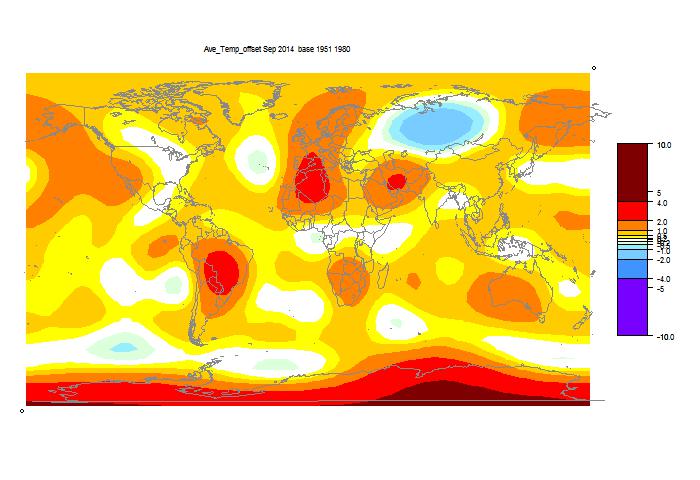

And here, with the same scale and color scheme, is the earlier mesh weighted TempLS map:

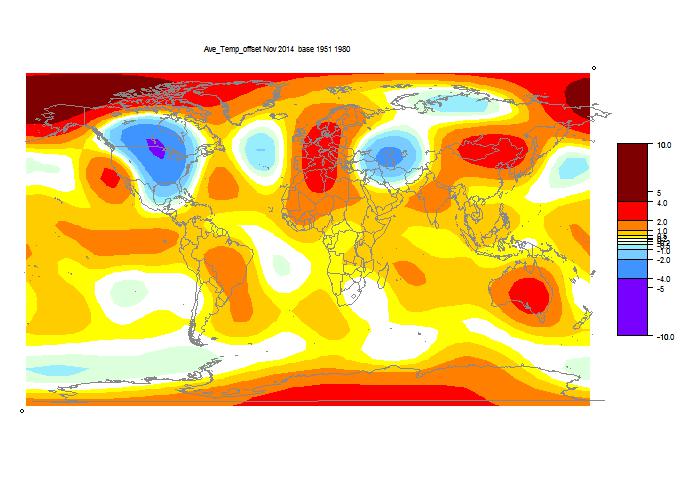

And finally, here is the TempLS grid weighting map:

As usual, I have also done a TempLS Grid calc. As explained here, I expect the mesh calc to more closely emulate GISS, while the grid calc should be closer to the NOAA and HADCRUT indices. TempLS grid did show a greater reduction, from 0.631°C to 0.519°C.

When NOAA comes out, I'll post on the likelihood of a record this year. The NCEP/NCAR index has been quite cool in December as well, so a GISS record is in the balance. NOAA and HADCRUT are currently ahead by a greater margin, but TempLS suggests may see a greater reduction.

Details of the GISS map for the month, and comparison with TempLS, are below the jump.

And here, with the same scale and color scheme, is the earlier mesh weighted TempLS map:

And finally, here is the TempLS grid weighting map:

Thursday, December 4, 2014

November was cooler - latest TempLS

Since I wrote in October about a new scheme for early mesh-based TempLS reporting, I've been looking forward to producing such an early result. Last month didn't work, because GHCN was late. But this month everything is on time. For me, there is added interest, because I developed the daily NCEP/NCAR based index, and it has been suggesting a perhaps unexpected drop in November temperature.

The early TempLS mesh report is now out, and it does show a corresponding drop, from 0.647°C (Oct) to 0.557°C (Nov, base 1961-1990). The Oct value also came back a little, which reduced the difference slightly.

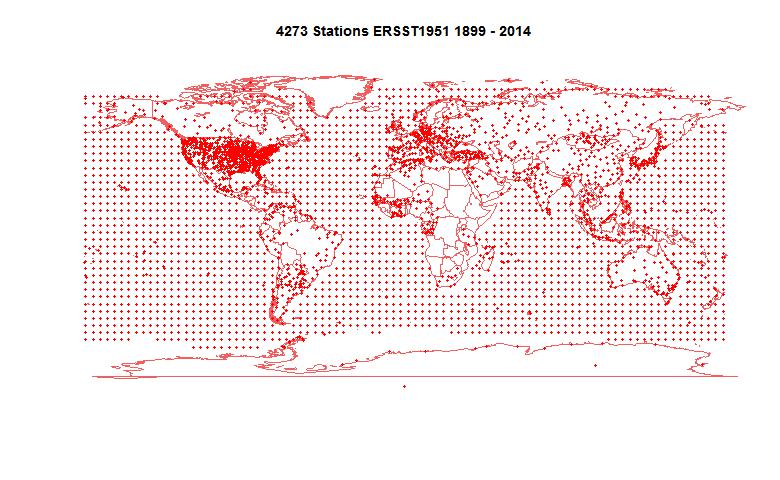

It is indeed a very early report, and will change. 3108 stations, probably about 70% of final. There is essentially no data from Canada, China, Australia, and most of S America and Africa. So it's too early to have much faith in the regional map, but the cold in the US certainly showed up.

Both satellite indices showed small reductions, with RSS going from 0.274°C to 0.246°C, and UAH similar. In terms of a record warm 2014, I think the likelihood is essentially unchanged.

Update 8/12 With most GHCN data now in, TempLS has risen a bit, to 0.579°C. But the NCEP/NCAR measure went the other way. It stayed cold in November, with the average down to 0.106°C, and December (to 4th) has been cold too.

The early TempLS mesh report is now out, and it does show a corresponding drop, from 0.647°C (Oct) to 0.557°C (Nov, base 1961-1990). The Oct value also came back a little, which reduced the difference slightly.

It is indeed a very early report, and will change. 3108 stations, probably about 70% of final. There is essentially no data from Canada, China, Australia, and most of S America and Africa. So it's too early to have much faith in the regional map, but the cold in the US certainly showed up.

Both satellite indices showed small reductions, with RSS going from 0.274°C to 0.246°C, and UAH similar. In terms of a record warm 2014, I think the likelihood is essentially unchanged.

Update 8/12 With most GHCN data now in, TempLS has risen a bit, to 0.579°C. But the NCEP/NCAR measure went the other way. It stayed cold in November, with the average down to 0.106°C, and December (to 4th) has been cold too.

Wednesday, December 3, 2014

Reanalysis revisited

I have been working with climate reanalysis. I found some more resources, mainly through the Univ Maine Climate Change Institute. They have a collection of reanalysis offerings, some of which are just remappings of flat plots on the globe. But they have useful information collections, and also guides. A comprehensive guide page is here. They have a collection of GHCN daily data here, convenient, although not up to date. They have globe maps of daily temperature, as I do here, but again with a considerable lag. And they have a section on monthly reanalysis time series here, which is the focus of this post.

They let you draw graphs of annual data, and plots of each month over years, but frustratingly, not a monthly plot. This may be to avoid including seasonal variation; they are not anomalies. However, they do give tables of the monthly average temperature for various reanalysis methods, to only 1 decimal precision :(. Despite that limitation, it is useful for me, because I had wondered whether the convenience and currentness of the NCEP/NCAR data was undermined by its status as a first generation product. I now think not; the integrated global temperature anomaly is quite similar to more advanced products (MERRA is something of an outlier). More below the fold.

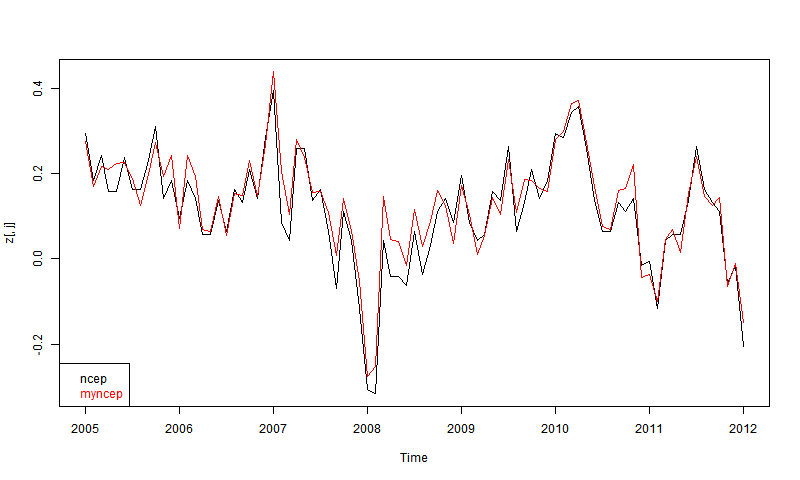

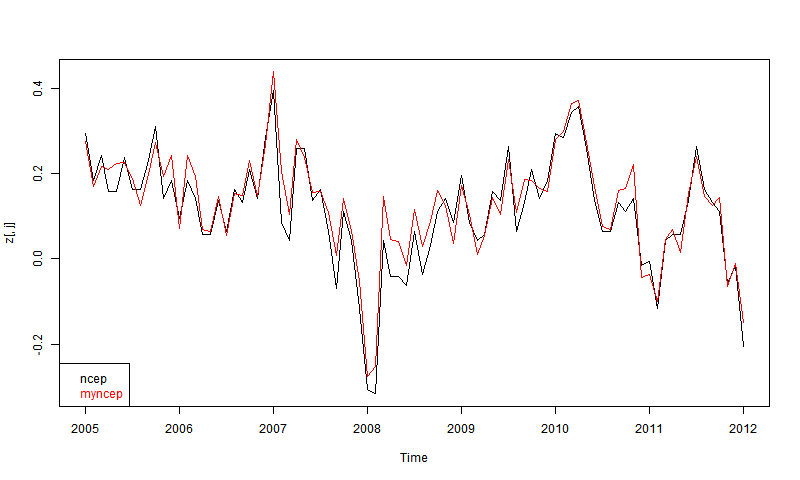

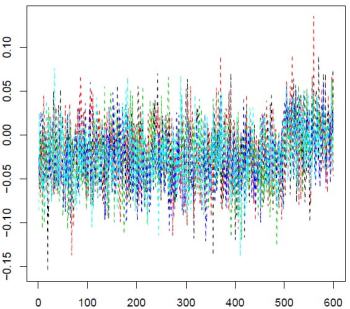

The first thing I wanted to check, since they give NCEP/NCAR v1, is whether they get the same answers as I did by integrating the grids. Again, CCI data only goes to 2012. Here is the plot for the most recent 8 years, set to 1994-2013 anpmaly base (actually to 2012 for the CCI version).

At first I was disappointed that they didn't overlay more closely. Then I remembered their 1 decimal accuracy, and then it seemed quite good agreement. You don't see 0.1 steps, because I've subtracted each month's mean. There may also be discrepancies because my numbers are likely more recent.

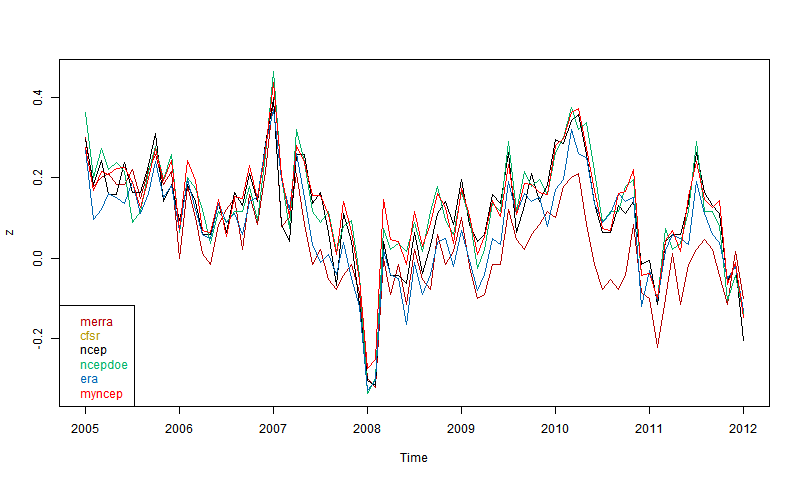

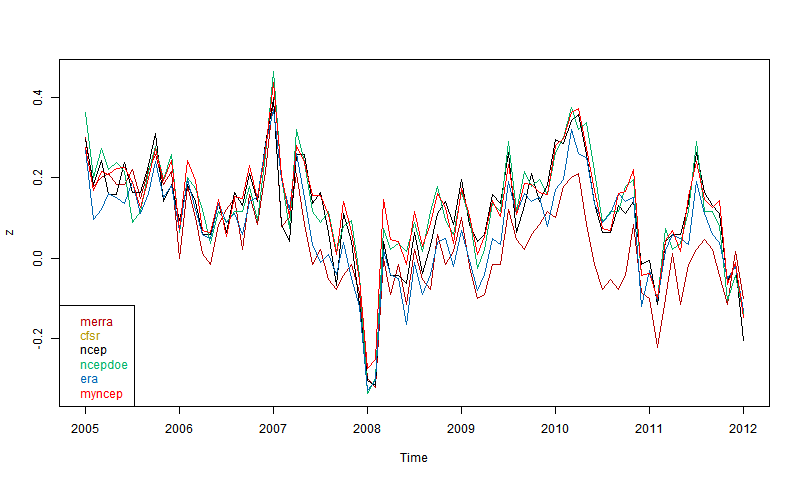

So then I plotted 5 reanalyses as shown by CCI. These are the main ones that go to near present (usually some time in 2012). You can read about them in the CCI guide. ERA is ERA-Interim. Ncepdoe is NCEP/DOE.

Again. quite good agreement if you allow for the limited precision. Surprising that MERRA, supposed to be one of the best, seems most deviant, even though others like CFSR are also well-regarded. It may of course be that MERRA is right. But anyway. there is nothing in the plot to disqualify NCEP/NCAR.

So I'll stick with it. It seems to be the most current, and I appreciate the 8 Mb download (for daily update). CFSR, for example, seems to come in multi-Tb chunks, which for me would be a multi-year task just to download. Resolution is not an issue for a global average.

They let you draw graphs of annual data, and plots of each month over years, but frustratingly, not a monthly plot. This may be to avoid including seasonal variation; they are not anomalies. However, they do give tables of the monthly average temperature for various reanalysis methods, to only 1 decimal precision :(. Despite that limitation, it is useful for me, because I had wondered whether the convenience and currentness of the NCEP/NCAR data was undermined by its status as a first generation product. I now think not; the integrated global temperature anomaly is quite similar to more advanced products (MERRA is something of an outlier). More below the fold.

The first thing I wanted to check, since they give NCEP/NCAR v1, is whether they get the same answers as I did by integrating the grids. Again, CCI data only goes to 2012. Here is the plot for the most recent 8 years, set to 1994-2013 anpmaly base (actually to 2012 for the CCI version).

At first I was disappointed that they didn't overlay more closely. Then I remembered their 1 decimal accuracy, and then it seemed quite good agreement. You don't see 0.1 steps, because I've subtracted each month's mean. There may also be discrepancies because my numbers are likely more recent.

So then I plotted 5 reanalyses as shown by CCI. These are the main ones that go to near present (usually some time in 2012). You can read about them in the CCI guide. ERA is ERA-Interim. Ncepdoe is NCEP/DOE.

Again. quite good agreement if you allow for the limited precision. Surprising that MERRA, supposed to be one of the best, seems most deviant, even though others like CFSR are also well-regarded. It may of course be that MERRA is right. But anyway. there is nothing in the plot to disqualify NCEP/NCAR.

So I'll stick with it. It seems to be the most current, and I appreciate the 8 Mb download (for daily update). CFSR, for example, seems to come in multi-Tb chunks, which for me would be a multi-year task just to download. Resolution is not an issue for a global average.

Monday, December 1, 2014

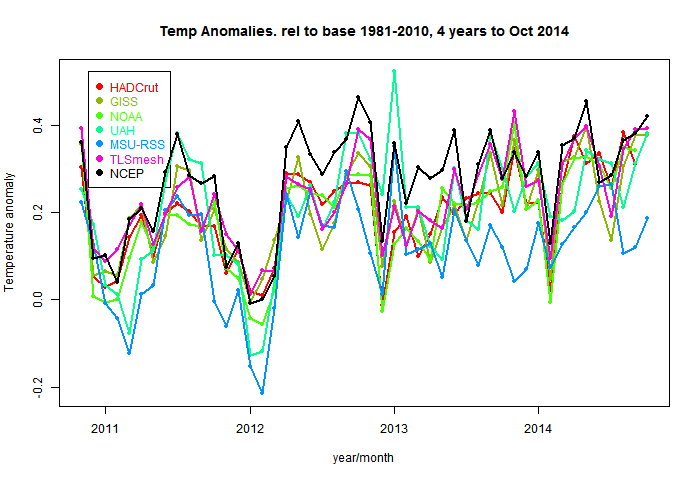

Maintained monthly active temperature plotter

This post follows on from a thought by commenter JCH. On the latest data page, I maintain an active graph of six recent temperature indices, set to a common anomaly base 1981-2010. But I actually maintain a file of about fifteen. JCH mentioned a difficulty of now getting recent data for HADCRUT, for example. So I thought I should add some user facilities to that active graph to make use of this data.

I tried dynamic plotting once previously with annual data - it is the climate plotter page. It hasn't been much used, and I think I may have tried to cram too much into a small space. So I've been experimenting with different systems. I've learnt more about Javascript since then.

So the first addition is a panel for choosing which datasets to show. It has a floating legend, with buttons for changing color, asking for regression (OLS), or smoothing (12-month boxcar). If you ask for regression, a similar panel pops up with again color, and start and end time text boxes. Initially it sets these to the visible screen, but you can type in other times. If you press on any color button, another panel pops up with color choices. The OLS trend in °C/Century for the stated interval, is in the red-lined box.

The original plot worked by mouse dragging. If you drag in the main space, the plot follows. But if you drag below or left of the axes, it stretches/shrinks along that axis. I've kept that, but added an alternative.

There is a faint line at about 45° from the origin. If you move the mouse in that region, you'll see faint numbers at each end of the x-axis. These are tentative years. If you click with Shift pressed, the plot adapts so that those become the endpoints. The scheme is similar to the triangle of the trend viewer, but backwards. Near the origin, you get short intervals in recent time; the scale of mouse move gives better resolution here. Move along the x-axis makes the start recede; along the diagonal, both recede keeping the interval short. In the upper triangle, it's similar with the y-limits. It's easier to try than to read.

The Redraw button is hardly needed, because there is much automatic redraw. The Regress button forces a recalc when you've manually edited the text boxes for intervals. Each pop-up window has an exit button; the Legend button is the way to bring it back (it toggles). Each pop-up is draggable (gently).

More on regression - you can at any stage amend the textboxes with dates, and then click either the regress button (main table) or the red-bordered cell containing the regression trend in C/Cen to get a new plot with the specified period. By default the period will be set to the visible screen, which may include months in the future (but trend will be calculated over actual data). Trend lines will be shown.

So here it is below the fold. It's still experimental, and feedback welcomed. When stable, I'll embed it in the page.

I tried dynamic plotting once previously with annual data - it is the climate plotter page. It hasn't been much used, and I think I may have tried to cram too much into a small space. So I've been experimenting with different systems. I've learnt more about Javascript since then.

So the first addition is a panel for choosing which datasets to show. It has a floating legend, with buttons for changing color, asking for regression (OLS), or smoothing (12-month boxcar). If you ask for regression, a similar panel pops up with again color, and start and end time text boxes. Initially it sets these to the visible screen, but you can type in other times. If you press on any color button, another panel pops up with color choices. The OLS trend in °C/Century for the stated interval, is in the red-lined box.

The original plot worked by mouse dragging. If you drag in the main space, the plot follows. But if you drag below or left of the axes, it stretches/shrinks along that axis. I've kept that, but added an alternative.

There is a faint line at about 45° from the origin. If you move the mouse in that region, you'll see faint numbers at each end of the x-axis. These are tentative years. If you click with Shift pressed, the plot adapts so that those become the endpoints. The scheme is similar to the triangle of the trend viewer, but backwards. Near the origin, you get short intervals in recent time; the scale of mouse move gives better resolution here. Move along the x-axis makes the start recede; along the diagonal, both recede keeping the interval short. In the upper triangle, it's similar with the y-limits. It's easier to try than to read.

The Redraw button is hardly needed, because there is much automatic redraw. The Regress button forces a recalc when you've manually edited the text boxes for intervals. Each pop-up window has an exit button; the Legend button is the way to bring it back (it toggles). Each pop-up is draggable (gently).

More on regression - you can at any stage amend the textboxes with dates, and then click either the regress button (main table) or the red-bordered cell containing the regression trend in C/Cen to get a new plot with the specified period. By default the period will be set to the visible screen, which may include months in the future (but trend will be calculated over actual data). Trend lines will be shown.

So here it is below the fold. It's still experimental, and feedback welcomed. When stable, I'll embed it in the page.

Sunday, November 30, 2014

Cooler November?

The main purpose of this post is to note that the daily NCEP data is now regularly updated here. As with TempLS mesh, there is a kind of Moyhu effect whereby when I set up a system like this and want to tell everyone about it, there is a hiatus in the data source. This time I think it is just Thanksgiving.

Anyway, the global story it tells is that there was a cool dip around Nov 13, at the height of the N America freeze, and a second a few days later. Currently (to Nov 24) the average anomaly for Nov is 0.157°C, compared with 0.281°C in October. I think this will pan out to November being about 0.1°C cooler than October in the surface temperature indices.

What does this mean for talk of a surface record 2014? I think it is neutral. To reach a record, month temperatures have to exceed on average the 2010 average, and it looks like November will be close to that number. For example, GISS Oct was 0.76; 2010 average 0.66. This may matter for GISS, which was only just above the average anyway to date. NOAA and HADCRUT have a greater margin.

Update: Three more days data arrived, and still cool. The month average is now down to 0.135°C, a drop of about 0.15 from October. That is negative for GISS record prospects.

Anyway, the global story it tells is that there was a cool dip around Nov 13, at the height of the N America freeze, and a second a few days later. Currently (to Nov 24) the average anomaly for Nov is 0.157°C, compared with 0.281°C in October. I think this will pan out to November being about 0.1°C cooler than October in the surface temperature indices.

What does this mean for talk of a surface record 2014? I think it is neutral. To reach a record, month temperatures have to exceed on average the 2010 average, and it looks like November will be close to that number. For example, GISS Oct was 0.76; 2010 average 0.66. This may matter for GISS, which was only just above the average anyway to date. NOAA and HADCRUT have a greater margin.

Update: Three more days data arrived, and still cool. The month average is now down to 0.135°C, a drop of about 0.15 from October. That is negative for GISS record prospects.

Tuesday, November 25, 2014

Daily reanalysis NCEP/NCAR temperatures with WebGL.

In a previous post I described how a global index could be created simply by integrating the surface temperature data provided by NCEP/NCAR. This data is to within last few days, and I've described here how numerical data is maintained on the latest data page.

As gridded data, I then sought to display the daily temperature anomalies (base 1994-2013) with WebGL, and that is shown here. I'm also planning to maintain this, on the latest data page if it does not drag out loading. Currently the daily data is just for 2014, although that is easily extended.

So it's below the fold. As usual, the Earth is a trackball that you can drag, zoom (right mouse) and orient (button). I'm trying a new way of choosing dates. High on right there is a tableau of small squares, each representing one day. Click on this to choose. It's all a bit small, but to the left of the Orient button, you'll see printed the date your mouse is on. So just move until the right day shows, and click.

Because temperature ranges are large, it has been quite hard to get the colors right. You might like to look at the recent North American cold spell for shades of blue. Incidentally, I see that the global average has slipped again, so November looks like a much cooler month than recently.

As gridded data, I then sought to display the daily temperature anomalies (base 1994-2013) with WebGL, and that is shown here. I'm also planning to maintain this, on the latest data page if it does not drag out loading. Currently the daily data is just for 2014, although that is easily extended.

So it's below the fold. As usual, the Earth is a trackball that you can drag, zoom (right mouse) and orient (button). I'm trying a new way of choosing dates. High on right there is a tableau of small squares, each representing one day. Click on this to choose. It's all a bit small, but to the left of the Orient button, you'll see printed the date your mouse is on. So just move until the right day shows, and click.

Because temperature ranges are large, it has been quite hard to get the colors right. You might like to look at the recent North American cold spell for shades of blue. Incidentally, I see that the global average has slipped again, so November looks like a much cooler month than recently.

Monday, November 24, 2014

Updates to latest data page

Blogger tells me that of the Moyhu pages, latest data is the most viewed. I'll be adding to it, so I thought I should improve the organization, and also the load time. I've also added my version of the reanalysis index for recent days and months.

It now has a table of contents and links. Tables are in frames with an "Enlarge" toggle button. I'm planning to add webGL plots (next post) for NCEP/NCAR (and maybe MERRA if I can get Carrick's ideas working) daily data. Again I have to not overburden download times.

It now has a table of contents and links. Tables are in frames with an "Enlarge" toggle button. I'm planning to add webGL plots (next post) for NCEP/NCAR (and maybe MERRA if I can get Carrick's ideas working) daily data. Again I have to not overburden download times.

Saturday, November 22, 2014

Update on 2014 warmth.

A month ago I posted plots to see whether some global indoces might show record warmth in 2014. The troposphere indices UAH and RSS are not in record territory. But others are. NOAA has just come out with an increased value for October, so a record there is very likely. The indices here all improved their prospects in October. HADCRUT is still to come.

In the previous post, I noted that the reanalysis data for November showed a considerable dip mid-month, corresponding to the North America freeze. November will probably be cooler than October - my guess is more like August.

Plots are below, showing the cumulative of the difference between month ave and 2010 annual average, divided by 12 (so final will be the difference in annual averages). NOAA and HADCRUT look very likely to reach a record, and also the TempLS indices. Cumulatively GISS is currently just above the 2010 average, but warm months are keeping the slope positive.

Update - just something I noticed. About 3 months ago I commented how closely NOAA and TempLS were tracking. TempLS has since produced a new version, mesh-based, which tracks GISS quite closely. But the relation between old TempLS, now called TempLS grid, and NOAA is still remarkably close. Last month, both rose by 0.042°C. The previous month, small rises - TLS by 0.012 and NOAA by 0.014.

This time I'll just give the active plot with the 2010 average subtracted. There is an explanatory plot in the earlier post. The index will be a record if it ends the year above the axis. Months warmer than the 2010 average make the line head upwards.

Use the buttons to click through.

![]()

In the previous post, I noted that the reanalysis data for November showed a considerable dip mid-month, corresponding to the North America freeze. November will probably be cooler than October - my guess is more like August.

Plots are below, showing the cumulative of the difference between month ave and 2010 annual average, divided by 12 (so final will be the difference in annual averages). NOAA and HADCRUT look very likely to reach a record, and also the TempLS indices. Cumulatively GISS is currently just above the 2010 average, but warm months are keeping the slope positive.

Update - just something I noticed. About 3 months ago I commented how closely NOAA and TempLS were tracking. TempLS has since produced a new version, mesh-based, which tracks GISS quite closely. But the relation between old TempLS, now called TempLS grid, and NOAA is still remarkably close. Last month, both rose by 0.042°C. The previous month, small rises - TLS by 0.012 and NOAA by 0.014.

This time I'll just give the active plot with the 2010 average subtracted. There is an explanatory plot in the earlier post. The index will be a record if it ends the year above the axis. Months warmer than the 2010 average make the line head upwards.

Use the buttons to click through.

Tuesday, November 18, 2014

A "new" surface temperature index (reanalysis).

I've been looking at reanalysis data sets. These provide a whole atmosphere picture of recent decades of weather. They work on a grid like those of numerical weather prediction programs, or also GCM's. They do physical modelling which "assimilates" data from a variety of sources. They typically produce a 200 km horizontal grid of six-hourly data (maybe hourly if you want) at a variety of levels, including surface.

Some are kept up to date, within a few days, and it is this aspect that interests me. They are easily integrated over space (regular grid, no missing data). I do so with some nervousness, because I don't know why the originating organizations like NCAR don't push this capability. Maybe there is a reason.

It's true that I don't expect an index which will be better than the existing. The reason is their indirectness. They are computing a huge amount of variables over whole atmosphere, using a lot of data, but even so it may be stretched thin. And of course, they don't directly get surface temperature, but the average in the top 100m or so. There are surface effects that they can miss. I noted a warning that Arctic reanalysis, for example, does not deal well with inversions. Still, they are closer to surface than UAH or RSS-MSU.

But the recentness and resolution is a big attraction. I envisage daily averages during each month, and WebGL plots of the daily data. I've been watching the recent Arctic blast in the US, for example.

So I've analysed about 20 years of output (NCEP/NCAR) as an index. The data gets less reliable as you go back. Some goes back to the start of space data; some to about 1950. But for basically current work, I just need a long enough average to compute anomalies.

So I'll show plots comparing this new index with the others over months and years. It looks good. Then I'll show some current data. In a coming post, I'll post the surface shaded plots. And I'll probably automate and add it to the current data page.

Update: It's on the data page here, along with daily WebGL plots.

I've been fosussing on NCEP/NCAR because

There are two surface temperature datasets, in similar layout:

SFC seems slightly more currently updated, but is an older set. I saw a file labelled long term averages, which is just what I want, and found that it ended in 1995. Then I found that the reason was that it was made for a 1996 paper. It seems that reanalysis data sets can hybridize technologies of different eras. SFC goes back to 1979. I downloaded it, but found the earlier years patchy.

Then I tried sig995. That's a reference to the pressure level (I think), but it's also labelled surface. It goes back to 1948, and seems to be generally more recent. So that is the one I'm describing here.

Both sets are on a 2.5° grid (144x73) and offer daily averages. Of course, for the whole globe at daily resolution, it's not that easy to define which day you mean. There will be a cut somewhere. Anywhere, I'm just following their definition. sig995 has switched to NETCDF4; I use the R package ncdf4 to unpack. I integrate with cosine weighting. It's not simple cosine; the nodes are not the centers of the grid cells. In effect, I use cos latitude with trapezoidal integration.

Here is an interactive user-scalable graph. You can drag it with the mouse horizontally or vertically. If you drag up-down to the left of the vertical axis, you will change the vertical scaling (zoom). Likewise below the horizontal axis. So you can see how NCEP fares over the whole period.

The mean for the first 13 days of November was 0.173°C. That's down a lot on October, which was 0.281°C. I think the reason is the recent North American freeze, which was at its height on 13th. You can see the effect in the daily temperatures:

Anyway, we'll see what coming days bring.

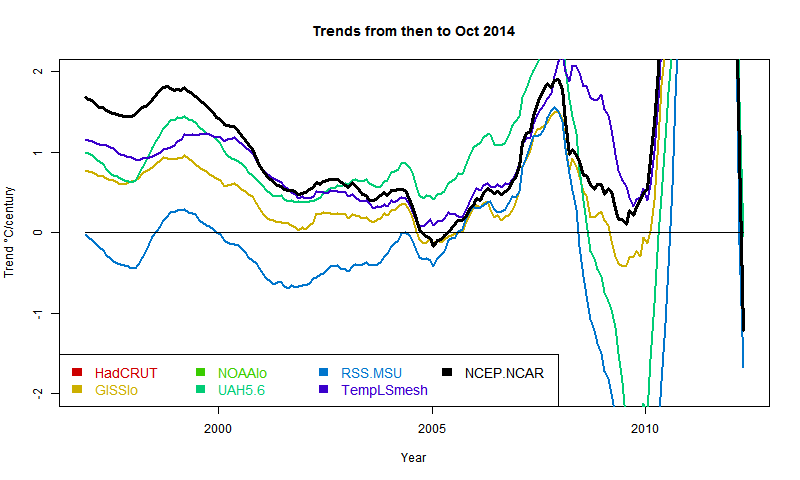

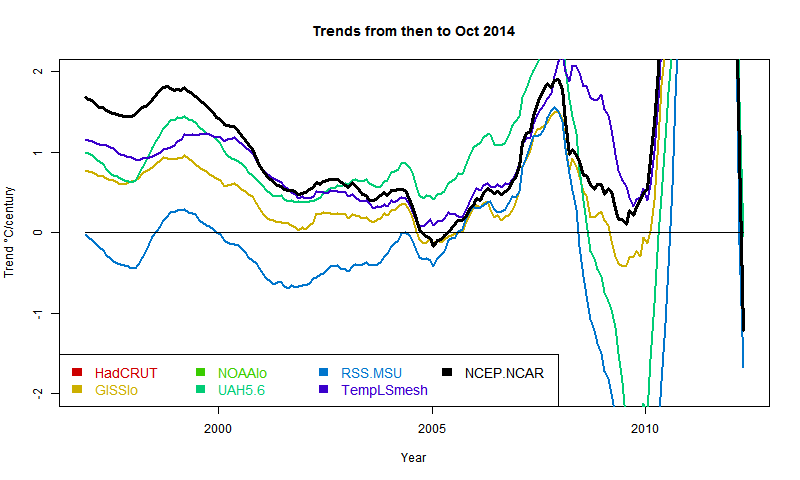

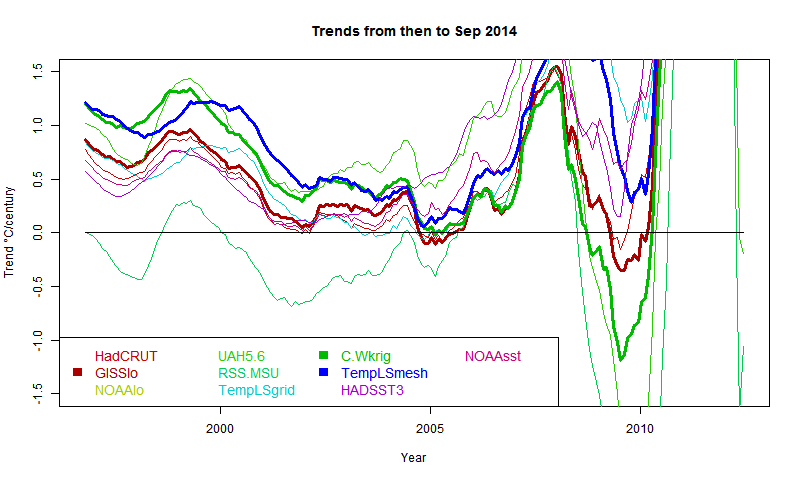

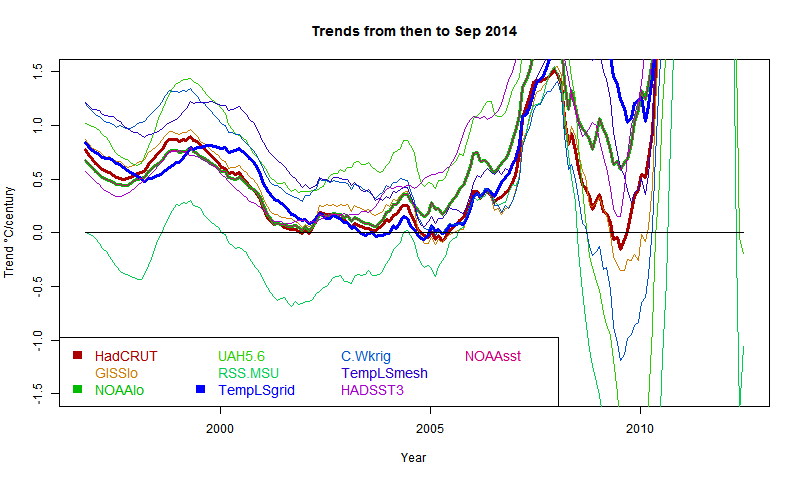

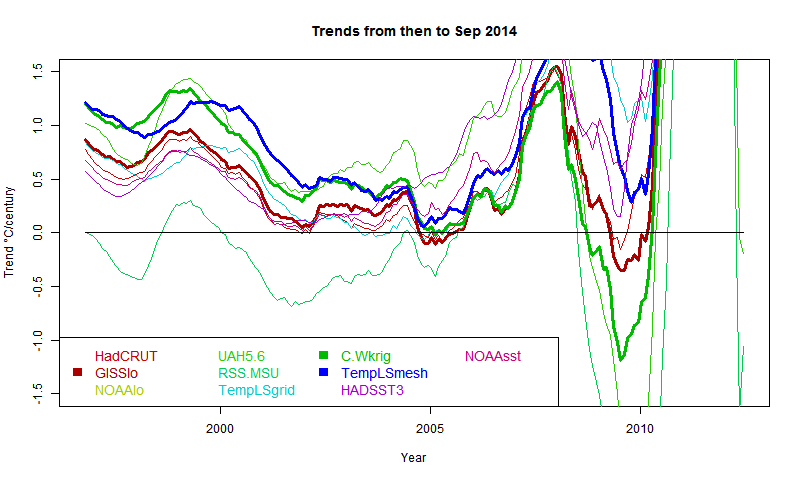

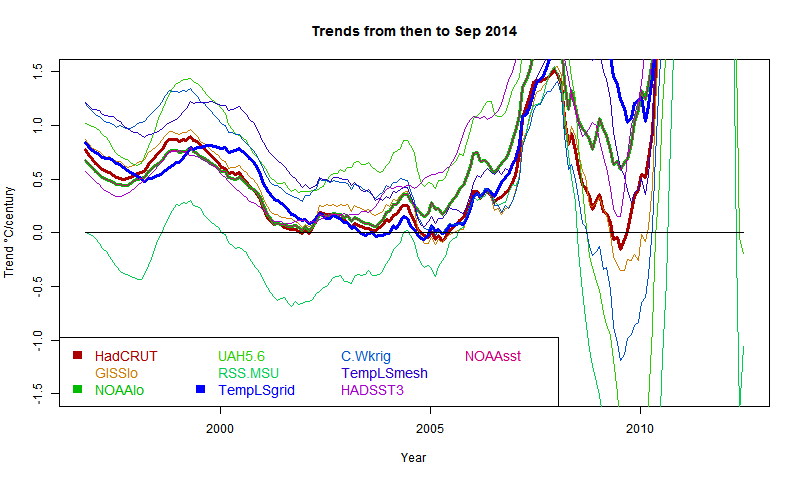

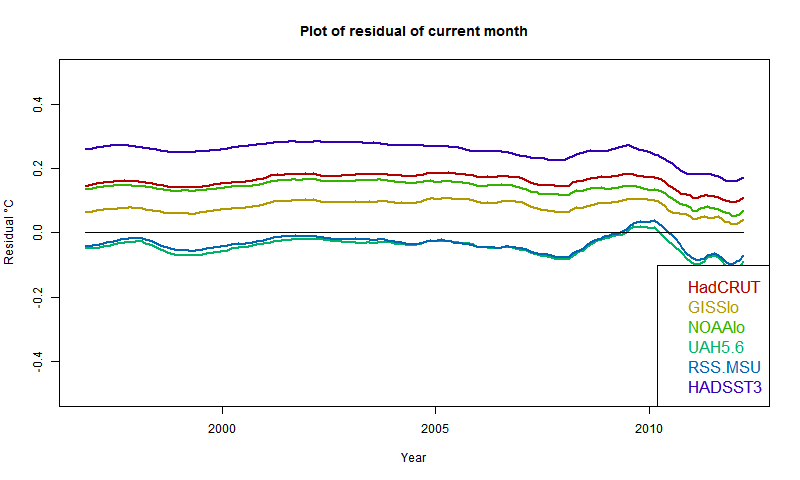

Update (following a comment of MMM). Below is a graph showing trends in the style of these posts - ie trend from the x-axis date to present, for various indices. I'll produce another post (this graph is mostly it) in the series when the NOAA result comes out. About the only "pause" dataset now, apart from MSU-RSS, is a brief dip by GISS in 2005. And now, also, NCEP/NCAR. However, the main thing for this post is that NCEP-NCAR drifts away in the positive direction pre 2000. This could be that it captures Arctic warming better, or just that trends are not reliable as you go back.

Some are kept up to date, within a few days, and it is this aspect that interests me. They are easily integrated over space (regular grid, no missing data). I do so with some nervousness, because I don't know why the originating organizations like NCAR don't push this capability. Maybe there is a reason.

It's true that I don't expect an index which will be better than the existing. The reason is their indirectness. They are computing a huge amount of variables over whole atmosphere, using a lot of data, but even so it may be stretched thin. And of course, they don't directly get surface temperature, but the average in the top 100m or so. There are surface effects that they can miss. I noted a warning that Arctic reanalysis, for example, does not deal well with inversions. Still, they are closer to surface than UAH or RSS-MSU.

But the recentness and resolution is a big attraction. I envisage daily averages during each month, and WebGL plots of the daily data. I've been watching the recent Arctic blast in the US, for example.

So I've analysed about 20 years of output (NCEP/NCAR) as an index. The data gets less reliable as you go back. Some goes back to the start of space data; some to about 1950. But for basically current work, I just need a long enough average to compute anomalies.

So I'll show plots comparing this new index with the others over months and years. It looks good. Then I'll show some current data. In a coming post, I'll post the surface shaded plots. And I'll probably automate and add it to the current data page.

Update: It's on the data page here, along with daily WebGL plots.

More on reanalysis

Reanalysis projects flourished in the 1990's. They are basically an outgrowth of numerical weather forecasting, and the chief suppliers are NOAA/NCEP/NCAR and ECMWF. There is a good overview site here. There is a survey paper here (free) and a more recent one (NCEP CFS) here.I've been fosussing on NCEP/NCAR because

- They are kept up to date

- They are freely available as ftp downloadable files

- I can download surface temperature without associated variables

- It's in NCDF format

There are two surface temperature datasets, in similar layout:

SFC seems slightly more currently updated, but is an older set. I saw a file labelled long term averages, which is just what I want, and found that it ended in 1995. Then I found that the reason was that it was made for a 1996 paper. It seems that reanalysis data sets can hybridize technologies of different eras. SFC goes back to 1979. I downloaded it, but found the earlier years patchy.

Then I tried sig995. That's a reference to the pressure level (I think), but it's also labelled surface. It goes back to 1948, and seems to be generally more recent. So that is the one I'm describing here.

Both sets are on a 2.5° grid (144x73) and offer daily averages. Of course, for the whole globe at daily resolution, it's not that easy to define which day you mean. There will be a cut somewhere. Anywhere, I'm just following their definition. sig995 has switched to NETCDF4; I use the R package ncdf4 to unpack. I integrate with cosine weighting. It's not simple cosine; the nodes are not the centers of the grid cells. In effect, I use cos latitude with trapezoidal integration.

Results

So here are the plots of the monthly data, shown in the style of the latest data page with common anomaly base 1981-2010. The NCEP index is in black. I'm using 1994-2013 as the anomaly base for NCEP, so I have to match it to the average of the other data (not zero) in this period. You'll see that it runs a bit warmer - I wouldn't make too much of that.NCEP/NCAR with major temperature indices - last 5 months  |

NCEP/NCAR with major temperature indices - last 4 years  |

Here is an interactive user-scalable graph. You can drag it with the mouse horizontally or vertically. If you drag up-down to the left of the vertical axis, you will change the vertical scaling (zoom). Likewise below the horizontal axis. So you can see how NCEP fares over the whole period.

Recent months and days

Here is a table of months. This is now in the native anomaly bases. NCEP/NCAR looks low because it's base is recent, even hiatic.The mean for the first 13 days of November was 0.173°C. That's down a lot on October, which was 0.281°C. I think the reason is the recent North American freeze, which was at its height on 13th. You can see the effect in the daily temperatures:

| Date | Anomaly |

| 1 | 0.296 |

| 2 | 0.25 |

| 3 | 0.259 |

| 4 | 0.287 |

| 5 | 0.229 |

| 6 | 0.214 |

| 7 | 0.202 |

| 8 | 0.165 |

| 9 | 0.135 |

| 10 | 0.154 |

| 11 | 0.091 |

| 12 | 0.018 |

| 13 | -0.049 |

| 14 | 0.049 |

| 15 | 0.147 |

Anyway, we'll see what coming days bring.

Update (following a comment of MMM). Below is a graph showing trends in the style of these posts - ie trend from the x-axis date to present, for various indices. I'll produce another post (this graph is mostly it) in the series when the NOAA result comes out. About the only "pause" dataset now, apart from MSU-RSS, is a brief dip by GISS in 2005. And now, also, NCEP/NCAR. However, the main thing for this post is that NCEP-NCAR drifts away in the positive direction pre 2000. This could be that it captures Arctic warming better, or just that trends are not reliable as you go back.

Sunday, November 16, 2014

October GISS unchanged, still high

GISS has posted its October estimate for global temperature anomaly. It was 0.76°C, the same as the revised September (had been 0.77°C). TempLS mesh was also almost exactly the same (0.664°C). TempLS grid, which I expect to behave more like HADCRUT and NOAA, rose from 0.592°C to 0.634°C.

The comparison maps are below the jump.

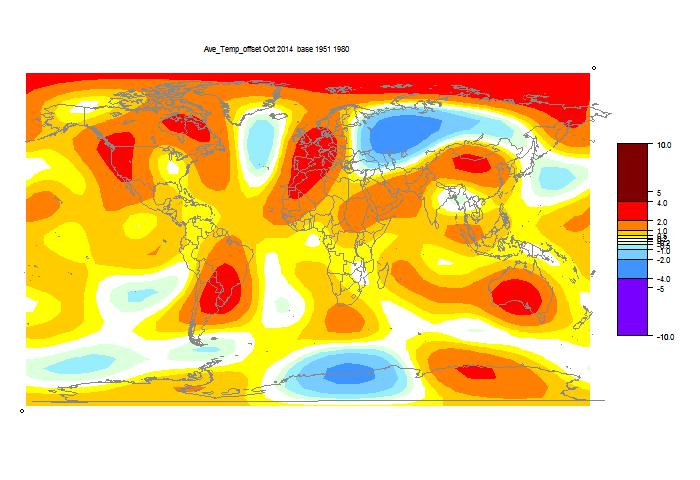

Here is the GISS map:

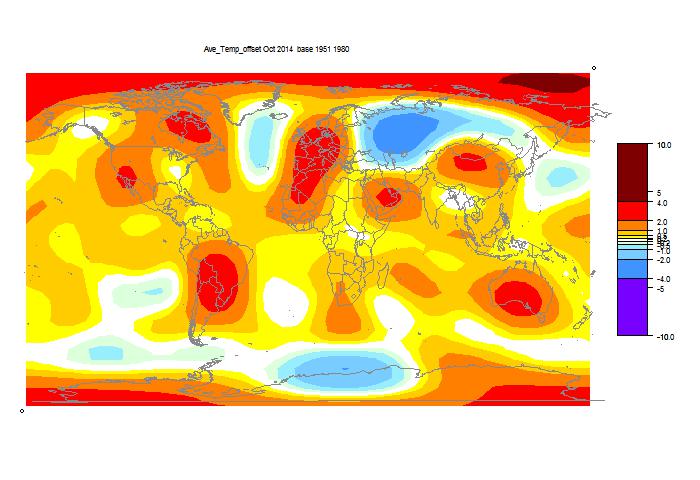

And here, with the same scale and color scheme, is the earlier mesh weighted TempLS map:

And finally, here is the TempLS grid weighting map:

List of earlier monthly reports

More data and plots

The comparison maps are below the jump.

Here is the GISS map:

And here, with the same scale and color scheme, is the earlier mesh weighted TempLS map:

And finally, here is the TempLS grid weighting map:

List of earlier monthly reports

More data and plots

Saturday, November 15, 2014

Lingering the pause

As I predicted, the Pause, as measured by periods of zero or less trend in anomaly global temperature, is fading. And some, who were fond of it, have noticed. In threads at Lucia's, and at WUWT, for example.

Now I don't think there's any magic in a zero trend, and there's plenty of room to argue that trends are still smaller than expected. Lucia wants to test against predictions, which makes sense. But I suspect many pause fans prefer their numbers black and white, and we'll hear more about periods of trend not significantly different from zero. So the pause lingers.

We already have. A while ago, when someone objected at WUWT to Lord M using exclusively the RSS record of long negative trend, Willis responded

"Sedron, the UAH record shows no trend since August 1994, a total of 18 years 9 months."

When I and Sedron protested that the UAH trend over that time was 1.38°C/century, he said:

"I assumed you knew that everyone was talking about statistically significant trends, so I didn’t mention that part."

And that is part of the point. A trend can fail a significance test (re 0) and still be quite large. Even quite close to what was predicted. I posted on this here.

I think we'll hear more of some special candidates, and the reason is partly that the significance test allows for autocorrelation. Some data sets have more of that than others. SST has a lot, and I saw HADSST3 mentioned in this WUWT thread. So below the fold, I'll give a table of the various datasets, and the Quenouille factor that adjusts for autocorrelation. UAH and the SSTs do stand out.

Here is a table of cases you may hear cited (SS=statistically significant re 0):

These trends are not huge, but far from zero.

So here is the analysis of autocorrelation. If r is the lag-1 autocorrrelation, used in an AR1 Arima model, then the Quenouille adjustment for autocorrelation reduces the number of degrees of freedom by Q=(1-r)/(1+r). Essentially, the variance is inflated by 1/Q. Put another way, since initially d.o.f. is number of months, all other things being equal, the period without statistical significance is inflated by 1/Q.

So here, for various datasets and recent periods, is a table of Q, calculated from r=ar1 coefficient from the R arima() function:

Broadly, SST has low Q, land fairly high, and Land/Ocean measures, made up of land and SST, are the expected hybrid. The troposphere measures, especially UAH, have lower Q, and so longer periods without statistically significant non-zero trend.

Now I don't think there's any magic in a zero trend, and there's plenty of room to argue that trends are still smaller than expected. Lucia wants to test against predictions, which makes sense. But I suspect many pause fans prefer their numbers black and white, and we'll hear more about periods of trend not significantly different from zero. So the pause lingers.

We already have. A while ago, when someone objected at WUWT to Lord M using exclusively the RSS record of long negative trend, Willis responded

"Sedron, the UAH record shows no trend since August 1994, a total of 18 years 9 months."

When I and Sedron protested that the UAH trend over that time was 1.38°C/century, he said:

"I assumed you knew that everyone was talking about statistically significant trends, so I didn’t mention that part."

And that is part of the point. A trend can fail a significance test (re 0) and still be quite large. Even quite close to what was predicted. I posted on this here.

I think we'll hear more of some special candidates, and the reason is partly that the significance test allows for autocorrelation. Some data sets have more of that than others. SST has a lot, and I saw HADSST3 mentioned in this WUWT thread. So below the fold, I'll give a table of the various datasets, and the Quenouille factor that adjusts for autocorrelation. UAH and the SSTs do stand out.

Here is a table of cases you may hear cited (SS=statistically significant re 0):

| Dataset | No SS trend since... | Period | Actual trend in that time |

| UAH | June 1996 | 18 yrs 4 mths | 1.080°C/Century |

| HADCRUT 4 | June 1997 | 17 yrs 3 mths | 0.912°C/century |

| HADSST3 | Jan 1995 | 19 yrs 9 mths | 0.921°C/Century |

These trends are not huge, but far from zero.

So here is the analysis of autocorrelation. If r is the lag-1 autocorrrelation, used in an AR1 Arima model, then the Quenouille adjustment for autocorrelation reduces the number of degrees of freedom by Q=(1-r)/(1+r). Essentially, the variance is inflated by 1/Q. Put another way, since initially d.o.f. is number of months, all other things being equal, the period without statistical significance is inflated by 1/Q.

So here, for various datasets and recent periods, is a table of Q, calculated from r=ar1 coefficient from the R arima() function:

| Dataset | 1990-2013 | 1995-2013 | 2000-2013 | 2005-2013 |

| HadCRUT 4 | 0.1078 | 0.1711 | 0.269 | 0.3092 |

| GISS Land/Ocean | 0.1378 | 0.2155 | 0.2907 | 0.3244 |

| NOAA Land/Ocean | 0.121 | 0.1949 | 0.3186 | 0.3396 |

| UAH5.6 | 0.0789 | 0.1132 | 0.1538 | 0.1837 |

| RSS.MSU | 0.0978 | 0.142 | 0.2 | 0.1814 |

| TempLS grid | 0.1349 | 0.1959 | 0.3298 | 0.3748 |

| BEST Land/Ocean | 0.1201 | 0.1799 | 0.2326 | 0.3081 |

| Cowtan/Way krig | 0.1032 | 0.1642 | 0.2215 | 0.2939 |

| TempLS mesh | 0.1266 | 0.1862 | 0.2698 | 0.3165 |

| BEST Land | 0.2923 | 0.3953 | 0.4608 | 0.4835 |

| GISS.Ts | 0.1465 | 0.2351 | 0.342 | 0.3978 |

| CRUTEM Land | 0.2041 | 0.31 | 0.4614 | 0.5105 |

| NOAA Land | 0.3451 | 0.4795 | 0.6438 | 0.6319 |

| HADSST3 | 0.036 | 0.0504 | 0.0736 | 0.0888 |

| NOAA SST | 0.0178 | 0.0251 | 0.0387 | 0.0514 |

Broadly, SST has low Q, land fairly high, and Land/Ocean measures, made up of land and SST, are the expected hybrid. The troposphere measures, especially UAH, have lower Q, and so longer periods without statistically significant non-zero trend.

Wednesday, November 12, 2014

Seasonal insolation

This post was started by some recent posting at WUWT. It's about the expected thermal effect of the Earth's eccentric orbit. It produces a variable total solar insolation for the planet, which one might expect to be reflected in temperatures. A few days ago, Willis contrasted the small solar cycle fluctuation which this much larger oscillation, suggesting that if we couldn't detect the orbital effect then the solar cycle couldn't be much. And just now, Stan Robertson at WUWT took up the idea, looking for the eccentricity in annual global anomaly indices.

I've also wondered the effect of eccentricity. But when you think about anomalies, it is clear that they subtract out any annual cycle. So the effect can't be found there. And in fact it's going to be hard to disentangle it from axis tilt effect. A GCM could of course run alternatively with a circular orbit, which would determine it.

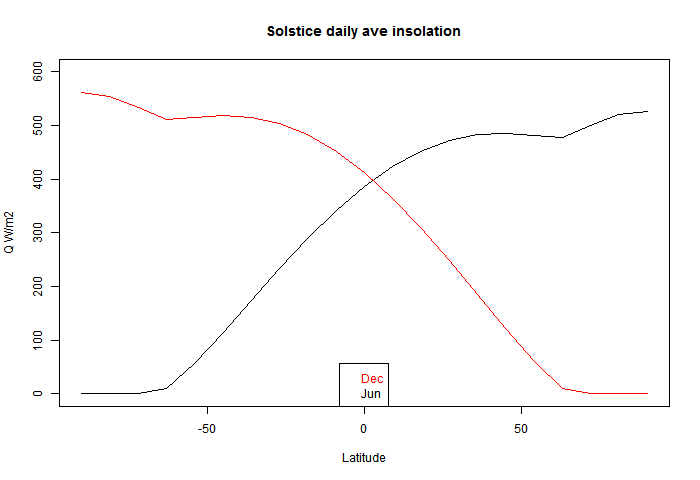

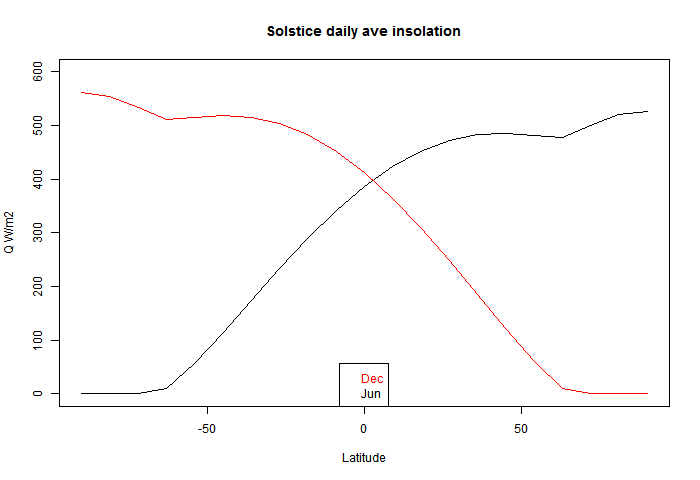

Anyway, someone posted a plot of average daily insolation against time of year and latitude. That is, at TOA, or for an airless Earth. I was surprised that the maximum for the year was at the solstice at the relevant Pole. I found a good plot and the relevant maths in Wikipedia. So I'll show that below the jump, with a brief version of the math, and a plot of variation with latitude at the solstice. It isn't even monotonic.

So here is the plot, with caption also from Wiki.

, the theoretical daily-average insolation at the top of the atmosphere, where θ is the polar angle of the Earth's orbit, and θ = 0 at the vernal equinox, and θ = 90° at the summer solstice; φ is the latitude of the Earth. The calculation assumed conditions appropriate for 2000 A.D.: a solar constant of S0 = 1367 W m−2, obliquity of ε = 23.4398°, longitude of perihelion of ϖ = 282.895°, eccentricity e = 0.016704. Contour labels (green) are in units of W m−2.

, the theoretical daily-average insolation at the top of the atmosphere, where θ is the polar angle of the Earth's orbit, and θ = 0 at the vernal equinox, and θ = 90° at the summer solstice; φ is the latitude of the Earth. The calculation assumed conditions appropriate for 2000 A.D.: a solar constant of S0 = 1367 W m−2, obliquity of ε = 23.4398°, longitude of perihelion of ϖ = 282.895°, eccentricity e = 0.016704. Contour labels (green) are in units of W m−2.

y axis is latitude, x axis is angle of orbit, starting at the March equinox. You can see the effect of orbit eccentricity in making the S pole warmer. That pole also shows clearly the non-monoticity; there is a pinch near the Antarctic circle.

So here is the Wiki math, using the above notation:

Solve

for ho

Solve

for δ

But here I find a vary rare math error in Wiki. The last term should not have an ω. So I removed it.

Now substituting

in

we have the solution.

I used this to plot the solstice curves:

You can see that there is a discontinuity of (2nd - see PP in comments) derivative at the polar circles, where one goes into night, and the other gets the benefit of 24 hr insolation, which is enough to exceed even average daily tropical insolation (of course, without atmospheric losses).

I've also wondered the effect of eccentricity. But when you think about anomalies, it is clear that they subtract out any annual cycle. So the effect can't be found there. And in fact it's going to be hard to disentangle it from axis tilt effect. A GCM could of course run alternatively with a circular orbit, which would determine it.

Anyway, someone posted a plot of average daily insolation against time of year and latitude. That is, at TOA, or for an airless Earth. I was surprised that the maximum for the year was at the solstice at the relevant Pole. I found a good plot and the relevant maths in Wikipedia. So I'll show that below the jump, with a brief version of the math, and a plot of variation with latitude at the solstice. It isn't even monotonic.

So here is the plot, with caption also from Wiki.

, the theoretical daily-average insolation at the top of the atmosphere, where θ is the polar angle of the Earth's orbit, and θ = 0 at the vernal equinox, and θ = 90° at the summer solstice; φ is the latitude of the Earth. The calculation assumed conditions appropriate for 2000 A.D.: a solar constant of S0 = 1367 W m−2, obliquity of ε = 23.4398°, longitude of perihelion of ϖ = 282.895°, eccentricity e = 0.016704. Contour labels (green) are in units of W m−2.

, the theoretical daily-average insolation at the top of the atmosphere, where θ is the polar angle of the Earth's orbit, and θ = 0 at the vernal equinox, and θ = 90° at the summer solstice; φ is the latitude of the Earth. The calculation assumed conditions appropriate for 2000 A.D.: a solar constant of S0 = 1367 W m−2, obliquity of ε = 23.4398°, longitude of perihelion of ϖ = 282.895°, eccentricity e = 0.016704. Contour labels (green) are in units of W m−2.y axis is latitude, x axis is angle of orbit, starting at the March equinox. You can see the effect of orbit eccentricity in making the S pole warmer. That pole also shows clearly the non-monoticity; there is a pinch near the Antarctic circle.

So here is the Wiki math, using the above notation:

Solve

for ho

Solve

for δ

But here I find a vary rare math error in Wiki. The last term should not have an ω. So I removed it.

Now substituting

in

we have the solution.

I used this to plot the solstice curves:

You can see that there is a discontinuity of (2nd - see PP in comments) derivative at the polar circles, where one goes into night, and the other gets the benefit of 24 hr insolation, which is enough to exceed even average daily tropical insolation (of course, without atmospheric losses).

Monday, November 10, 2014

Update on GHCN and TempLS early reporting

About a month ago, I posted on a proposed new scheme for reporting monthly averages with a mesh version of TempLS. The idea was to report continuously as data (land source GCHN) came in. I wondered how reliable the very early estimates might be. I was quite optimistic.

So, wouldn't you know, November is the first month in my experience when GHCN didn't keep to their regular schedule. Normally there are daily updates from month start, with the largest in the first day or two. But this month, nothing at all until the 8th. Sure enough, my program faithfully produced an average (0.589°C) based on SST alone; it's been told now not to do that again.

Anyway, the data has arrived, and is up on the latest data page. October (with GHCN) was 0.664°C; almost exactly the same as September, which was pretty warm. For once, there was little cold in N America, and W Europe was warm. The main cold spot was Russia/Kazakhstan.

So, wouldn't you know, November is the first month in my experience when GHCN didn't keep to their regular schedule. Normally there are daily updates from month start, with the largest in the first day or two. But this month, nothing at all until the 8th. Sure enough, my program faithfully produced an average (0.589°C) based on SST alone; it's been told now not to do that again.

Anyway, the data has arrived, and is up on the latest data page. October (with GHCN) was 0.664°C; almost exactly the same as September, which was pretty warm. For once, there was little cold in N America, and W Europe was warm. The main cold spot was Russia/Kazakhstan.

Saturday, November 8, 2014

GCM's are models

I'd like to bring together some things I expound from time to time about GCM's and predictions. It's a response to why didn't GCMs predict the pause? Or why can't they get the temperature right in Alice Springs?

GCM's are actually models. Suppose you were designing the Titanic. You might make a scale model, which, with suitably scaled dimensions (Reynolds number etc) could be a good model indeed. It would respond to various forcings (propellor thrust, wind, wave motion) just like the real boat. You would test it with various scenarios. Hurricanes, maybe listing, maybe even icebergs. It can tell you many useful things. But it won't tell you whether the Titanic will hit an iceberg. It just doesn't have that sort of information.

So it is with GCM's. They too will tell you how the Earth's climate will respond to forcings. You can subject them to scenarios. But they won't predict weather. They aren't initialized to do that. And, famously, weather is chaotic. You can't actually predict it for very long from initial conditions. If models are doing their job, they will be chaotic too. You can't use them to solve an initial value problem.

GCM's are actually models. Suppose you were designing the Titanic. You might make a scale model, which, with suitably scaled dimensions (Reynolds number etc) could be a good model indeed. It would respond to various forcings (propellor thrust, wind, wave motion) just like the real boat. You would test it with various scenarios. Hurricanes, maybe listing, maybe even icebergs. It can tell you many useful things. But it won't tell you whether the Titanic will hit an iceberg. It just doesn't have that sort of information.

So it is with GCM's. They too will tell you how the Earth's climate will respond to forcings. You can subject them to scenarios. But they won't predict weather. They aren't initialized to do that. And, famously, weather is chaotic. You can't actually predict it for very long from initial conditions. If models are doing their job, they will be chaotic too. You can't use them to solve an initial value problem.

Friday, November 7, 2014

Climate blog index again

About a year ago, I described a Javascript exercise I began mid-2013, when Google Reader discontinued. I thought I might write my own RSS reader, with indexing capability. I found that feedly was a good replacement for Reader, so that didn't continue. However, I thought a more limited RSS index of climate blogs would be handy. A big motivation was just to have an index of my own comments (to avoid boring the public with repetition).

So I set up a page, and set my computer to reading the RSS outputs every hour. The good news is that that has happened more or less continuously. The bad news was that junk accumulated, and downloading was slow.

So I've done two new main things:

As with my blogroll, I've included blogs with broad readership; not necessarily the ones I recommend.

Here are some examples of selections:

Stoat, last two months (two months takes a few seconds to load)

My comments at WUWT, last two months

Posts by Bob Tisdale at WUWT in November

So I set up a page, and set my computer to reading the RSS outputs every hour. The good news is that that has happened more or less continuously. The bad news was that junk accumulated, and downloading was slow.

So I've done two new main things:

- Pruned the initial download. I had already reduced the initial offerring to just two days of comments. But I still downloaded details of all threads and commenters. More than half the commenters listed had only ever made one comment. They include of course various spammers, and typos. So I removed them, unless their comment was in the last month. I also divided the threads into current and dormant (no activity in two months). Current are downloaded at start; dormant can be added (button), or will come automatically if data more than two months ago is requested. It's faster, if not fast.

- I've added a facility where a string is shown that you can add to the URL to get it to go to the current state. That includes selected index items (commenter etc) and months. The main idea is that you can store a URL which will go straight to a list of your own comments over some period (remembering that each month takes a while to download). Examples below.

As with my blogroll, I've included blogs with broad readership; not necessarily the ones I recommend.

Here are some examples of selections:

Stoat, last two months (two months takes a few seconds to load)

My comments at WUWT, last two months

Posts by Bob Tisdale at WUWT in November

Wednesday, October 29, 2014

Calculating the environmental lapse rate

I have posted over the years on the mechanisms of the lapse rate - the vertical temperature gradient in the atmosphere. It started with one of my first posts on what if there were no GHE. My basic contention has been that lapse rates are a consequence of vertical air motions in a gravity field. Wind tends to drive the gradient toward the DALR - dry adiabatic lapse rate = -9.8 °K/km. Maintaining the gradient takes kinetic energy from the wind to operate a heat pump. The pump forces heat down, to make up for the flux transported up by the gradient. The pump effect is proportional to the difference between the actual lapse rate La and the DALR L. L is the stability limit, and a steeper gradient will convert the pump into an engine, with convective instability. This also pushes La (down) toward L.

I developed these ideas in posts here, here and here. But I have wondered about the role of infrared radiation (with GHGs), and why the actual gradient is usually below the DALR. The latter is often attributed to latent heat of water, and called the moist ALR. But that is only effective if there is actual phase change (usually condensation).

I now see how it works. The heat pump reduces entropy, proportionally to the energy it takes from the wind. The entropy can indeed be related to the gradient and the effective thermal conductivity; the largest component of that is a radiative mechanism. So the lapse rate rises to the maximum level that the wind energy can sustain, given the conductive leakage.

I'll write a simplified argument first. Consider a parcel of dry air, mass m, which rises vertically dist dz for a time, at ambient pressure P=Pa, starting at ambient temperature T=Ta. The motion is adiabatic, but it then comes to rest and exchanged heat with ambient.

The temperature inside the parcel drops at the same rate as the DALR, so the difference : d(T-Ta)/dz = -(L-La)

The density difference is proportional to this

d(ρ-ρa)/dz = -(L-La)*ρ/T

I'm ignoring second order terms in dz.

The net (negative) bouyancy force is

F = V g (ρ-ρa)

dF/dz = -V g (L-La)*ρ/T

The work done against bouyancy (power) is ? F dz = 1/2 V g (L-La)*ρ/T dz2

Note that this is independent of sign of z; the same work is done ascending as descending.

Because the temperature on arrival is different to ambient, heat has been transported. I could work out the flux, but it isn't very useful for macroscopic work. The reason is that not only is it signed, but separate motions convey heat over different segments, and there is no easy way of adding up. Instead, an appropriate scalar to compute is the entropy removed. Heat pumps do reduce entropy; that's why they require energy. Of course, entropy is created in providing that energy.

The simplest way to calculate entropy reduction is to note that the Helmholtz Free Energy U - TS (U=internal energy) is unchanged, because the motion is adiabatic. This means T dS and P dV (pressure volume work) are balanced. And P dV is from the buoyancy work. So:

T dS = -1/2 m g (L-La)*ρ/T dz2

where S is entropy

Assume there is a distribution of vertical velocity components v in a slice height dz. I can then re-express the work done as a power per unit volume: F v = 1/2 v.dx' g (L-La)*ρ/T

In Latex I'd use hats to indicate averages.

I've left in a dx' which was the old distance of rise, which determines the average temperature discrepancy between parcel and ambient. It's not obvious what it should now be. But I think the best estimate for now is the Prandtl mixing length. This is related to the turbulent viscosity, and in turn to the turbulent kinetic energy (per unit volume) TKE.

So now it gets a bit more handwavy, but the formula becomes

Average power/vol (taken from wind) ~ -g (L-La)/T * TKE

This follows through to the rate of entropy removal, which is

rate of entropy ~ -g (L-La)/T2 * TKE

(power divided by T)

Q = -k dT/dz

where k is a conductivity,

then the volume rate of creation of entropy is

dS/dt = -Q d(1/T) = -Q/T2dT/dz

= k La T-2

So what is k?. Molecular conductivity would contribute, but where GHG's are present the main part is infrared, which is transferred from warmer regions to cooler by absorption and re-emission. In the limit of high opacity, this follows a Fourier law in the Rosseland approximation

flux = 16 s G n2 T3 dT/dz

s Boltzmann's constant, G an optical parameter (see link), n refractive index. Three optical depths is often used as a rule of thumb for high opacity; we don't have that, but you can extend down by using fuzzy boundaries, where for eample there is a sink region where there is transmission direct to space.

Update: I forgot to say the main thing about G which is relevant here, which is that it is inversely proportional to absorptivity (with an offset). IOW, more GHG means less conductivity.

Update

I've made an error here. I assumed that the flow expansion was adiabatic. This is conventional, and relates to the time scale of the motion. But I've also assumed adiabatic for the entropy balance, and that is wrong. There is a through flux of energy, mainly as IR, as indicated. And that flux carries entropy with it. So the formula should be:

dS/dt = (k La - Q) T-2

where Q is the nett flow of heat. I'll correct below. It is significant, and may change the sign.

k La T-2 ~ g (L-La)/T2 * TKE

or

La - L ~ - (k La - Q)/g/TKE

Obviously, there is an unspecified constant of proportionality (with time units), which comes from the nature of turbulence. But I don't think it should vary greatly with, say, wind speed.

So what can we say about the discrepancy between environmental lapse rate La and theoretical DALR L (=g/cp)?

So what about moisture? That is what the difference between La and L is usually attributed to.

I think moisture is best accounted for within the DALR formulation itself. The DALR L is, again, L= -g/cp, where cp is the specific heat of the gas (air). But in the derivation, it is just the heat required to raise the temperature by 1 °C(OK, that is what sh means), and you could include the heat required to overcome phase change in that. That increases cp and brings down the lapse rate. The thing about the moist ALR is that water only has a big effect when it actually changes phase. That's a point in space and time. Otherwise moist air behaves much like dry. Of course, an environmental lapse rate is only measured aftre there has been much mixing

I developed these ideas in posts here, here and here. But I have wondered about the role of infrared radiation (with GHGs), and why the actual gradient is usually below the DALR. The latter is often attributed to latent heat of water, and called the moist ALR. But that is only effective if there is actual phase change (usually condensation).

I now see how it works. The heat pump reduces entropy, proportionally to the energy it takes from the wind. The entropy can indeed be related to the gradient and the effective thermal conductivity; the largest component of that is a radiative mechanism. So the lapse rate rises to the maximum level that the wind energy can sustain, given the conductive leakage.

I'll write a simplified argument first. Consider a parcel of dry air, mass m, which rises vertically dist dz for a time, at ambient pressure P=Pa, starting at ambient temperature T=Ta. The motion is adiabatic, but it then comes to rest and exchanged heat with ambient.

The temperature inside the parcel drops at the same rate as the DALR, so the difference : d(T-Ta)/dz = -(L-La)

The density difference is proportional to this

d(ρ-ρa)/dz = -(L-La)*ρ/T

I'm ignoring second order terms in dz.

The net (negative) bouyancy force is

F = V g (ρ-ρa)

dF/dz = -V g (L-La)*ρ/T

The work done against bouyancy (power) is ? F dz = 1/2 V g (L-La)*ρ/T dz2

Note that this is independent of sign of z; the same work is done ascending as descending.

Because the temperature on arrival is different to ambient, heat has been transported. I could work out the flux, but it isn't very useful for macroscopic work. The reason is that not only is it signed, but separate motions convey heat over different segments, and there is no easy way of adding up. Instead, an appropriate scalar to compute is the entropy removed. Heat pumps do reduce entropy; that's why they require energy. Of course, entropy is created in providing that energy.

The simplest way to calculate entropy reduction is to note that the Helmholtz Free Energy U - TS (U=internal energy) is unchanged, because the motion is adiabatic. This means T dS and P dV (pressure volume work) are balanced. And P dV is from the buoyancy work. So:

T dS = -1/2 m g (L-La)*ρ/T dz2

where S is entropy

Going macro

I've shown the work done and entropy generated by a single movement. I'll try to relate that to a continuum. I've used a particular artificial example to link work done with entropy removed. In fact, turbulence typically consists of eddy motions.Assume there is a distribution of vertical velocity components v in a slice height dz. I can then re-express the work done as a power per unit volume: F v = 1/2 v.dx' g (L-La)*ρ/T

In Latex I'd use hats to indicate averages.

I've left in a dx' which was the old distance of rise, which determines the average temperature discrepancy between parcel and ambient. It's not obvious what it should now be. But I think the best estimate for now is the Prandtl mixing length. This is related to the turbulent viscosity, and in turn to the turbulent kinetic energy (per unit volume) TKE.

So now it gets a bit more handwavy, but the formula becomes

Average power/vol (taken from wind) ~ -g (L-La)/T * TKE

This follows through to the rate of entropy removal, which is

rate of entropy ~ -g (L-La)/T2 * TKE

(power divided by T)

Temperature gradient as a source of entropy

If you have a steady temperature gradient, and a consequent heat flux Q determined by Fourier's Law:Q = -k dT/dz

where k is a conductivity,

then the volume rate of creation of entropy is

dS/dt = -Q d(1/T) = -Q/T2dT/dz

= k La T-2

So what is k?. Molecular conductivity would contribute, but where GHG's are present the main part is infrared, which is transferred from warmer regions to cooler by absorption and re-emission. In the limit of high opacity, this follows a Fourier law in the Rosseland approximation

flux = 16 s G n2 T3 dT/dz

s Boltzmann's constant, G an optical parameter (see link), n refractive index. Three optical depths is often used as a rule of thumb for high opacity; we don't have that, but you can extend down by using fuzzy boundaries, where for eample there is a sink region where there is transmission direct to space.

Update: I forgot to say the main thing about G which is relevant here, which is that it is inversely proportional to absorptivity (with an offset). IOW, more GHG means less conductivity.

Update

I've made an error here. I assumed that the flow expansion was adiabatic. This is conventional, and relates to the time scale of the motion. But I've also assumed adiabatic for the entropy balance, and that is wrong. There is a through flux of energy, mainly as IR, as indicated. And that flux carries entropy with it. So the formula should be:

dS/dt = (k La - Q) T-2

where Q is the nett flow of heat. I'll correct below. It is significant, and may change the sign.

Balancing it all - lapse rate determined

Now we have an entropy source term and a sink term. In steady state entropy can't accumulate, so they balance:k La T-2 ~ g (L-La)/T2 * TKE

or

La - L ~ - (k La - Q)/g/TKE

Obviously, there is an unspecified constant of proportionality (with time units), which comes from the nature of turbulence. But I don't think it should vary greatly with, say, wind speed.

So what can we say about the discrepancy between environmental lapse rate La and theoretical DALR L (=g/cp)?

- Proportional to k, the conductivity. So if GHGs transport heat in response to the temperature gradient, as they do, the lapse rate diminishes, away from L. With no GHG's, there is much less to separate L and La. Not so clear - see above correction.

- Inversely proportional to TKE (depends on wind speed). So stronger wind brings the lapse rate closer to L

- Proportional to (La -Q/k).

So what about moisture? That is what the difference between La and L is usually attributed to.

I think moisture is best accounted for within the DALR formulation itself. The DALR L is, again, L= -g/cp, where cp is the specific heat of the gas (air). But in the derivation, it is just the heat required to raise the temperature by 1 °C(OK, that is what sh means), and you could include the heat required to overcome phase change in that. That increases cp and brings down the lapse rate. The thing about the moist ALR is that water only has a big effect when it actually changes phase. That's a point in space and time. Otherwise moist air behaves much like dry. Of course, an environmental lapse rate is only measured aftre there has been much mixing

Thursday, October 23, 2014

Checking ENSO forecasts

I few days ago I commented here on the latest NOAA ENSO advisory:

""ENSO-neutral conditions continue.*

Positive equatorial sea surface temperature (SST) anomalies continue across most of the Pacific Ocean.

El Niño is favored to begin in the next 1-2 months and last into the Northern Hemisphere spring 2015.*""

I repeated this at WUWT, and someone said, but they have been saying that all year. So I ran a check on ENSO predictions.

The NOAA Climate Prediction Center posts a monthly series of CDBs (Diagnostic Bulletins) here. They are full of graphs and useful information. They include compilations of ENSO predictions (Nino3.4), nicely graphed by IRI. I downloaded the plots for each month of 2014, and overlaid with the observed value from this file.

It's an active plot, so you can click through the months. The year started out with a dip, mostly unforeseen. This coincided with the global cool in February. There was then a underpredicted recovery, and since then there has been a tendency for the index to be below predictions, esp June and July.

CPC warns that only modest predictive skill is to be expected, and that is fortified by the spread in forecasts. The index does indeed seem to move beyond the predicted range rather easily. It's not always overpredicted, though.

Here is the active plot. Just click the top buttons to cycle through the 9 months. The thick black overlay line are the monthly observations.

You'll see some minor discrepancies at the start. I don't think this is bad graphing - I assume minor changes to Nino3.4 between the monthly report and now. It looks like maybe a scaling error, but I don't think it is. I should note that I'm plotting the monthly value, while the foecasts are for three minth averages. I wanted to match the initial, which is one month. But Nino3.4 does not have much monthly noise, so I don't think averages would look much different.

""ENSO-neutral conditions continue.*

Positive equatorial sea surface temperature (SST) anomalies continue across most of the Pacific Ocean.

El Niño is favored to begin in the next 1-2 months and last into the Northern Hemisphere spring 2015.*""

I repeated this at WUWT, and someone said, but they have been saying that all year. So I ran a check on ENSO predictions.

The NOAA Climate Prediction Center posts a monthly series of CDBs (Diagnostic Bulletins) here. They are full of graphs and useful information. They include compilations of ENSO predictions (Nino3.4), nicely graphed by IRI. I downloaded the plots for each month of 2014, and overlaid with the observed value from this file.

It's an active plot, so you can click through the months. The year started out with a dip, mostly unforeseen. This coincided with the global cool in February. There was then a underpredicted recovery, and since then there has been a tendency for the index to be below predictions, esp June and July.

CPC warns that only modest predictive skill is to be expected, and that is fortified by the spread in forecasts. The index does indeed seem to move beyond the predicted range rather easily. It's not always overpredicted, though.

Here is the active plot. Just click the top buttons to cycle through the 9 months. The thick black overlay line are the monthly observations.

You'll see some minor discrepancies at the start. I don't think this is bad graphing - I assume minor changes to Nino3.4 between the monthly report and now. It looks like maybe a scaling error, but I don't think it is. I should note that I'm plotting the monthly value, while the foecasts are for three minth averages. I wanted to match the initial, which is one month. But Nino3.4 does not have much monthly noise, so I don't think averages would look much different.

Monday, October 20, 2014

More "pause" trend datasets.

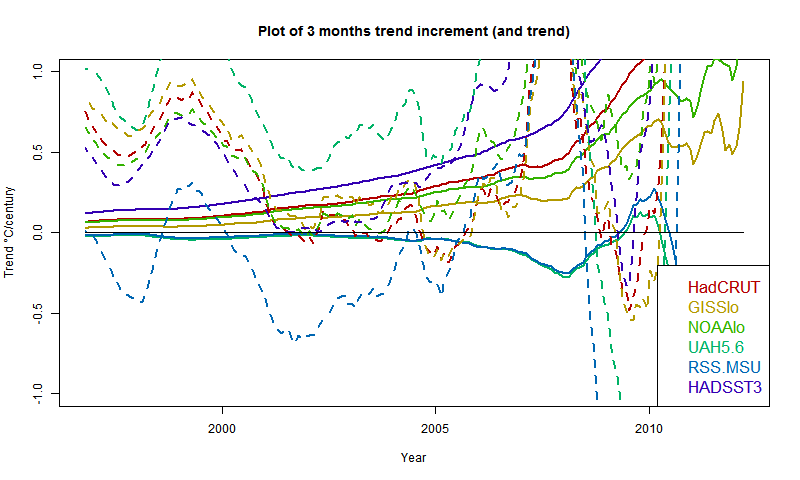

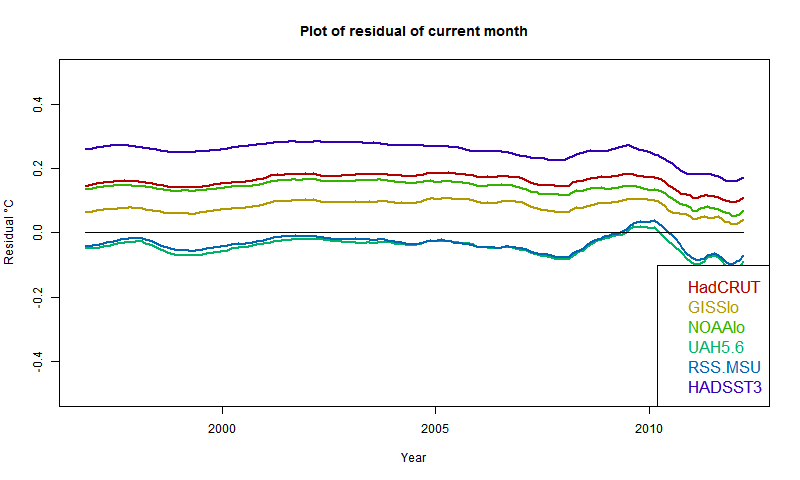

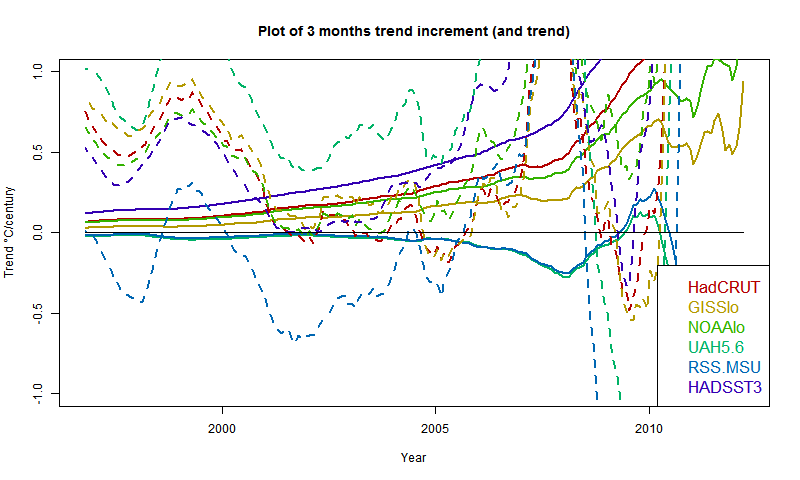

In two recent posts (here and here), I have shown with some major indices how trends, measures from some variable time over the last two decades and now, have been rising. This is partly due to recent warmth, and partly to the shifting effect (on trend) of past events, as time passes.

This has significance for talk of a pause in warming. People like to catalogue past periods of zero or negative trend. A senior British politician recently referred to "18 years without warming". That echoes Lord Monckton's persistent posts about MSU-RSS, which does have, of all indices, by far the lowest trends over the period.

Here I want to show results about other indices. Cowtan and Way showed that over this period, the trend in Hadcrut was biased low because of non-coverage of Arctic warming. I believe that TempLS with mesh weighting would also account properly for Arctic trend, and this would be a good way to compare the two, and see the effect of full interpolation. I expected GISS to behave similarly; it does to a limited extent.

So a new active plot is below the jump. You can rotate between datasets and months separately. There is also a swap facility so you can compare the images. And I have individual discussion of interpolation data vs grid data groups.

Here is the main plot. Buttons to rotate months and datasets. Emphasised set is in thicker black, on the legend too. (For some reason, NOAA emphasises as red). There is a reserved group of images for which the swap buttons work. It's initially empty, and you need at least two. In non-swap mode, click push to add the current image. In swap mode, click pop to remove the currently visible from the set.

General comments much as before. There is a big contrast between satellite indices MSU-RSS (long pause) and UAH (short). Trends are rising as the months of 2014 progress. I'm extrapolating to November assuming continuation of current weather, as described in previous posts. Trends are generally rising, which means it is getting harder to find long periods of non-positive trend ("pause").

It shows C&W and TempLS tracking fairly closely from 1997 to 2008, with GISS generally a bit below.

This has significance for talk of a pause in warming. People like to catalogue past periods of zero or negative trend. A senior British politician recently referred to "18 years without warming". That echoes Lord Monckton's persistent posts about MSU-RSS, which does have, of all indices, by far the lowest trends over the period.

Here I want to show results about other indices. Cowtan and Way showed that over this period, the trend in Hadcrut was biased low because of non-coverage of Arctic warming. I believe that TempLS with mesh weighting would also account properly for Arctic trend, and this would be a good way to compare the two, and see the effect of full interpolation. I expected GISS to behave similarly; it does to a limited extent.

So a new active plot is below the jump. You can rotate between datasets and months separately. There is also a swap facility so you can compare the images. And I have individual discussion of interpolation data vs grid data groups.

Here is the main plot. Buttons to rotate months and datasets. Emphasised set is in thicker black, on the legend too. (For some reason, NOAA emphasises as red). There is a reserved group of images for which the swap buttons work. It's initially empty, and you need at least two. In non-swap mode, click push to add the current image. In swap mode, click pop to remove the currently visible from the set.

| Data | Month | Swap |

General comments much as before. There is a big contrast between satellite indices MSU-RSS (long pause) and UAH (short). Trends are rising as the months of 2014 progress. I'm extrapolating to November assuming continuation of current weather, as described in previous posts. Trends are generally rising, which means it is getting harder to find long periods of non-positive trend ("pause").

Interpolation groups

As Cowtan and Way found, whether or not you see a pause depends a lot on whether you account for Arctic warming. TempLS typifies this - the grid version, like HADCRUT, effectively assigns to empty cells (of which Arctic has many) global average behaviour, missing the warming. TempLS mesh has full interpolation, like the kriging version of Cowtan and Way. So here is the comparison plot, with C&W, TempLS and GISS in dark colors:

It shows C&W and TempLS tracking fairly closely from 1997 to 2008, with GISS generally a bit below.

Grid surface data

And here for comparison are HADCRUT, NOAA Land/Ocean and TempLS grid. I expect these to be fairly similar. TempLS and NOAA have been very close lately, but over this longer range, TempLS is closer to HADCRUT.

External sources

| HadCRUT 4 land/sea temp anomaly |

| GISS land/sea temp anomaly |

| NOAA land/sea temp anomaly |

| UAH lower trop anomaly |

| RSS-MSU Lower trop anomaly |

| Cowtan/Way Had4 Kriging |