- Differential equations

- Fluids and Turbulence

- Error propagation

- GCM errors and conservation

- Simple equation analogies

- On Earth models

- Conclusion

Differential equations

An ordinary differential equation (de) system is a number of equations relating many variables and their derivatives. Generally the number of variables and equations is equal. There could be derivatives of higher order, but I'll restrict to one, so it is a first order system. Higher order systems can always be reduced to first order with extra variables and corresponding equations.A partial differential equation system, as in a GCM, has derivatives in several variables, usually space and time. In computational fluid dynamics (CFD) of which GCMs are part, the space is gridded into cells or otherwise discretised, with variables associated with each cell, or maybe nodes. The system is stepped forward in time. At each stage there are a whole lot of spatial relations between the discretised variables, so it works like a time de with a huge number of cell variables and relations. That is for explicit solution, which is often used by large complex systems like GCMs. Implicit solutions stop to enforce the space relations before proceeding.

Solutions of a first order equation are determined by their initial conditions, at least in the short term. A solution beginning from a specific state is called a trajectory. In a linear system, and at some stage there is linearisation, the trajectories form a linear space with a basis corresponding to the initial variables.

Fluids and Turbulence

As in CFD, GCMs solve the Navier-Stokes equations. I won't spell those out (I have an old post here), except to say that they simply express the conservation of momentum and mass, with an addition for energy. That is, a version of F=m*a, and an equation expressing how the fluid relates density and velocity divergence (and so pressure with a constitutive equation), and an associated heat budget equation.It is said, often in disparagement of GCMs, that they are not effectively determined by initial conditions. A small change in initial state could give a quite different solution. Put in terms of what is said above, they can't stay on a single trajectory.

That is true, and true in CFD, but it is a feature, not a bug, because we can hardly ever determine the initial conditions anyway, even in a wind tunnel. And even if we could, there is no chance in an aircraft during flight, or a car in motion. So if we want to learn anything useful about fluids, either with CFD or a wind tunnel, it will have to be something that doesn't require knowing initial conditions.

Of course, there is a lot that we do want to know. With an aircraft wing, for example, there is lift and drag. These don't depend on initial conditions, and are applicable throughout the flight. With GCMs it is climate that we seek. The reason we can get this knowledge is that, although we can't stick to any one of those trajectories, they are all subject to the same requirements of mass, momentum and energy conservation, and so in bulk all behave in much the same way (so it doesn't matter where you started). Practical information consists of what is common to a whole bunch of trajectories.

Turbulence messes up the neat idea of trajectories, but not too much, because of Reynolds Averaging. I won't go into this except to say that it is possible to still solve for a mean flow, which still satisfies mass momentum etc. It will be a useful lead in to the business of error propagation, because it is effectively a continuing source of error.

Error propagation and turbulence

I said that in a first order system, there is a correspondence between states and trajectories. That is, error means that the state isn't what you thought, and so you have shifted to a different trajectory. But, as said, we can't follow trajectories for long anyway, so error doesn't really change that situation. The propagation of error depends on how the altered trajectories differ. And again, because of the requirements of conservation, they can't differ by all that much.As said, turbulence can be seen as a continuing source of error. But it doesn't grow without limit. A common model of turbulence is called k-ε. k stands for turbulent kinetic energy, ε for rate of dissipation. There are k source regions (boundaries), and diffusion equations for both quantities. The point is that the result is a balance. Turbulence overall dissipates as fast as it is generated. The reason is basically conservation of angular momentum in the eddies of turbulence. It can be positive or negative, and diffuses (viscosity), leading to cancellation. Turbulence stays within bounds.

GCM errors and conservation

In a GCM something similar happens with other perurbations. Suppose for a period, cloud cover varies, creating an effective flux. That is what Pat Frank's paper is about. But that flux then comes into the general equilibrating processes in the atmosphere. Some will go into extra TOA radiation, some into the sea. It does not accumulate in random walk fashion.But, I hear, how is that different from extra GHG? The reason is that GHGs don't create a single burst of flux; they create an ongoing flux, shifting the solution long term. Of course, it is possible that cloud cover might vary long term too. That would indeed be a forcing, as is acknowledged. But fluctuations, as expressed in the 4 W/m2 uncertainty of Pat Frank (from Lauer) will dissipate through conservation.

Simple Equation Analogies

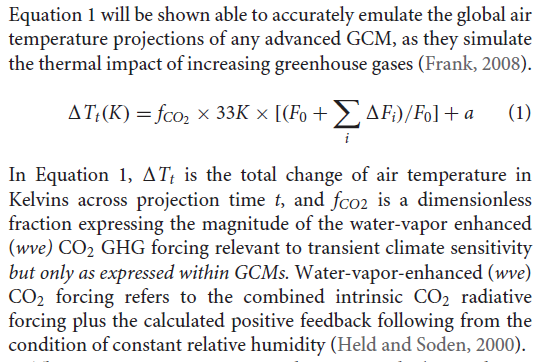

Pat Frank, of course, did not do anything with GCMs. Instead he created a simple model, given by his equation 1:

It is of the common kind, in effect a first order de

d( ΔT)/dt = a F

where F is a combination of forcings. It is said to emulate well the GCM solutions; in fact Pat Frank picks up a fallacy common at WUWT that if a GCM solution (for just one of its many variables) turns out to be able to be simply described, then the GCM must be trivial. This is of course nonsense - the task of the GCM is to reproduce reality in some way. If some aspect of reality has a pattern that makes it predictable, that doesn't diminish the GCM.

The point is, though, that while the simple equation may, properly tuned, follow the GCM, it does not have alternative trajectories, and more importantly does not obey physical conservation laws. So it can indeed go off on a random walk. There is no correspondence between the error propagation of Eq 1 (random walk) and the GCMs (shift between solution trajectories of solutions of the Navier-Stokes equations, conserving mass momentum and energy).

On Earth models

I'll repeat something here from the last post; Pat Frank has a common misconception about the function of GCM's. He says that"Scientific models are held to the standard of mortal tests and successful predictions outside any calibration bound. The represented systems so derived and tested must evolve congruently with the real-world system if successful predictions are to be achieved."

That just isn't true. They are models of the Earth, but they don't evolve congruently with it (or with each other). They respond like the Earth does, including in both cases natural variation (weather) which won't match. As the IPCC says:

"In climate research and modelling, we should recognise that we are dealing with a coupled non-linear chaotic system, and therefore that the long-term prediction of future climate states is not possible. The most we can expect to achieve is the prediction of the probability distribution of the system’s future possible states by the generation of ensembles of model solutions. This reduces climate change to the discernment of significant differences in the statistics of such ensembles"

If the weather doesn't match, the fluctuations of cloud cover will make no significant difference on the climate scale. A drift on that time scale might, and would then be counted as a forcing, or feedback, depending on cause.

The real problem Nick is that usually convergence can only be proved using some kind of a stability assumption for the underlying system and a stable numerical scheme. For nonlinear systems its a lot more complex because of strange attractors. In the absence of information about Lyoponov exponents, very little can be said.

ReplyDeleteReynolds' averaging when applied to steady state Navier-Stokes is completely different than time accurate eddy resolving simulations. In the steady state case, its possible that there are unique solutions and an infinite grid limit even though for more challenging problems involving separation that's not the case as we have lots of counterexamples.

Time accurate eddy resolving simulations suffer from no practical ability to assess much less control numerical error. That's pretty serious. There is no theory for an infinite grid limit. There is some evidence that there isn't one for LES anyway. So you run the code varying the hundreds of parameters until you get an answer you like. That's pretty much the state of the art. That's not really science, its selection bias masquerading as science.

Paul Williams had a good talk a decade ago where he showed that the climate of the attractor could be a strong function of the time step for example.

David,

DeleteIt may be hard to prove convergence, but I am describing how error propagation actually happens, not whether you can calculate it. It isn't by random walk. There may be better things than Reynolds averaging - I quote that to show how an acknowledged source of error (turbulence) translates into identifying a solution bundle (mean flow plus some bounds, admittedly not usually calculated) which then propagates without endless expansion, and which reaches a balance between new error (k) and dissipation. It's basically just to show that things are more complex than a random walk, but also manageable. And despite Pat Frank claiming that he is the only scientist, because he understands these things, in fact CFD folk have done a lot of thinking about it. They have to, else their programs blow up.

Let Eli try and simplify this. Let's say we measure some quantity every day and then we average over each year and get (this is a simpified example) 15 +/- 1 each year. Never mind the Units. And let's say we do this for 25 years.

ReplyDeletePat Frank says we have to accumulate the errors so the Frank answer after 30 years is 15 +/- 25.

The rest is just persiflage.

Isn't the real point of difference being that when measuring each year you have a constant base (within that year) for your +/- 1, as opposed to projecting forward in time where the start of each sequential year is building on the +/- 1 from the end of year 1? That is the real difference between modelling the past from measurements and modelling the future as projections.

DeleteOr to put it another way, what is the basis for resetting the error bounds at the start of each future year?

If the distribution stays the same, the point at which any period starts or ends will not make a difference, which is what Nick was saying when he showed that Frank's accumulation postulate fails utterly if you consider different periods such as months or seconds. It's the same distribution that is being sampled from.

DeleteLet me try to simplify this. Ignore P.Frank and D.Young and read this

ReplyDeleteNick, thanks for the detailed explanation. It makes good sense to me. Ages ago I programmed a one-level (500 mb) baroclinic weather model for the US domain using FORTRAN IV and lots of punch cards and trial and error (1976-1977 for my Master's Degree course work). So I do have a simplistic inkling of how GCMs work. It appears that Pat Frank is barking up the wrong tree.

ReplyDeleteMy concern about current long-range climate models is that they could potentially be subject to confirmation bias in the tuning process and selection of runs that are within an "expected" range while rejecting outliers, from what I have read. Setting an "expected" range is basically speculation and not science if my understanding is correct.

Bryan,

Delete"selection of runs that are within an "expected" range while rejecting outliers"

Do you know that they do that? I imagine weather forecasters do, and I expect climate model outliers get looked at closely for possible faults. But there isn't any real reason to reject them. They do ensembles, and outliers are just part of the picture.

The effects of tuning are overstated too. They use it to try to pin down properties, often to do with clouds, that are hard to pin down otherwise. Basically using surplus equations to balance rank deficiency. They don't use tuning, as is often said, to make models match the recent surface temperature record. In fact, of course, surface temperature is just one of many variables that a GCM has to get right.

Nick,

DeleteI'm not at all an expert on long-range climate models. All that I have learned is second hand. So I'm not certain outliers are rejected from the ensemble sets, and that practice may vary from one modeling project to another.

I recall reading about tuning for particulate matter in the atmosphere for fitting the past and assumptions about it in the future, for example. It may be quite possible to get the right answers for model fitting for the wrong reasons and therefore the model results will be close to worthless.

I support long-range climate modeling, but I don't support using that information for policy at present. The models are only in their infancy now. I suspect it will take decades and maybe even centuries before climate models will ever be able to reliably portray the future for years and decades, much less centuries and millennia (I would like to see climate models that can predict future glacial cycles). Until the climate models are fully validated with many decades of data, they should not be used to make any policy decisions.

Bryon, There is a good post by Isaac Held on tuning a model for average temperature that shows just how sensitive even this averaged quantity can be when parameters are tuned.

DeleteI actually think one real problem is that computing costs are so astronomical for climate models that the ensembles can't cover even a very small part of the parameter space. This dramatically underestimates uncertainty even assuming that the statistics are normal (little evidence for this I believe). As LES advances in CFD (at a glacial pace) they have the same problem. It takes 6 months even to simulate 1 second of real time.

In any case, climate model grids are so coarse that truncation errors are at least as large as the changes in fluxes they attempt to model. In this setting, tuning really just causes cancellation of large errors to get a few quantities to match observations. I actually think the more honest climate modelers know this. Seems Held said something like this before he stopped blogging. Don't have time to look it up right now.

David Young said:

Delete"It takes 6 months even to simulate 1 second of real time."

With my approach it takes about a millisecond to calculate 150 years of ENSO behavior. Work smart not hard.

Paul Pukite, As usual you are totally missing the point and blur critical technical differences. LaPlace's tidal equation is a dramatically simplified linear model. It could be that it could improve climate models as some suggest.

DeleteBut the overall picture (which you always deflect from with irrelevancies) is that climate models are like LES cfd simulations in that they are trying to model chaotic dynamics by resolving large eddies. Thus they are subject to the same limitations (which are sever) as LES calculations.

There is value in simple models of course, but your constant hyping of your "discovery" of this tidal model adds nothing to any discussion of climate or weather models.

David Young, Your naivete is showing. By far the biggest factor in natural climate variability is the ENSO signal, and this is essentially a standing-wave dipole that shows no turbulence or eddy component. You're constant hyping of turbulence is annoying and pointless. Just give it up.

DeleteDavid,

ReplyDelete"There is a good post by Isaac Held on tuning a model for average temperature"

The post is shown on the blogroll on the right (astthe bottom). The case you are talking about is not really tuning, but their attempts to include a model for indirect effects of aerosols via clouds. They did show that three different versions had substantially different effect on surface temperature. It is likely that this was factored into their final implementation. But that isn't quite the same thing as tuning to match the record. He discusses that in a general way, and whether it would be a good thing, but AFAIK doesn't say that people are doing it.

OK, but the point here is that with truncation and sub grid model errors at least as large as the changes in fluxes we are trying to model, there is no expectation of skill in any quantity except those used in tuning. That's a very serious drawback. It makes tuning a largely fruitless exercise since you are just causing large errors to cancel. A more meaningful area of research would be looking for Lyopanov constants in much smaller scale calculations.

DeleteI question the need for an ensemble of simulation runs to model the climate variability. Consider another case of geophysical variability, that of the delta in the earth’s length-of-day (LOD) over time. This variation is well characterized since ~1960 and exhibits changes on various time scales. On the scale of long-period tides, the detailed agreement to a tidal forcing model is excellent: https://geoenergymath.com/2019/02/13/length-of-day/

ReplyDeleteThat’s the kind of focus that we need to understand natural global temperature variability. The equivalent of the LOD variation is the ENSO-caused variation in global temperature. These are both tidally-forced -- the consensus just doesn’t realize the latter yet because they don’t understand how to handle the non-rigid response to a forcing that’s as obvious as the rigid-body response due to the moon’s orbit around the solid-body earth. If they did, they would recognize that ENSO in terms of a fluid volume is just as deterministic a response as LOD is in terms of a solid body to a long-period tidal forcing. It’s just that the nonlinear response is a complicating factor, just as the Mach-Zehnder response is nonlinear in a LiNbO3 waveguide.

You mentioned Isaac Held, who directed research for a geophysical fluid dynamics lab, and has that now-quiescent blog. In one of his last blog posts I wanted to comment on a topic where he mentioned the vanishing of the Coriolis effect, but Dr. Held wouldn’t allow it, replying via email: “I did not accept your comment on post #70 because it was not on topic. The topic of the post is not the Coriolis force, with which most readers of my blog will be familiar.” Well, Dr. Held, geophysical fluid dynamics is a subset of geophysics and until we get our act together and start to understand how the fluid response differs from a rigid response, you won’t get anywhere understanding climate variability. I'm sure that your readers are all geniuses that realize the implication of the Coriolis effect vanishing at the equator, and this fact allows a simplification in Laplace’s Tidal Equations to where one can generate an analytical tidal response and just punch in the numbers to calibrate to the ENSO behavior. And that’s why you didn’t allow my comment, right ? ;)

The LOD model fit takes a millisecond to generate, and using precisely the same tidal forcing, the ENSO model fit takes a millisecond to generate. There is no ensemble to worry about because this is the real deterministic response. All the ensemble trajectories that we see in the GFD results are an oversight of models that don’t happen to incorporate this forcing. The reason that the ensemble is there is to provide a crutch to capture the variability that they can’t seem to reproduce via the current GCMs. That will change with time as this recent paper asserts : Switch Between El Nino and La Nina is Caused by Subsurface Ocean Waves Likely Driven by Lunar Tidal Forcing.

Except Paul that most scientists and the IPCC agree that climate is chaotic. You are just denying real science using oversimplified models. Look at Sligo and Palmer for example. The topic is climate models. Can you say anything that is true or even relevant to this topic? It seems you can't. That you persist in obfuscating these issues says more about you and your limitations.

ReplyDeleteDavid Young,

DeleteWho says climate is chaotic? Is it the people of northern latitudes that look out the window in January and see snow? Or is it the people that put on a blanket at night? Is it the sailors that rely on tide tables? Is it the scientists who notice the seasonal barrier for El Nino activation? None of these are indicators of a chaotic pattern in climate.

Your only job as a would-be climate scientist is to (1) either come up with a model better than the Laplace's Tidal Equations solution or (2) debunk LTE somehow.

This is a path forward for you, according to the Lin & Qian paper:

“Our findings suggest two possible ways to improve the current ENSO forecasts: (1) Adding the subsurface ocean wave to statistical ENSO forecast models and improving its representation in CGCMs, which may lead to an improvement of the 12-month ENSO forecast. Right now, none of the statistical models considers the subsurface ocean wave. In fact, the only two ENSO forecast models that can make good 12-month forecast, the NASA GMAO model and GFDL FLOR model (Supplementary Fig. 2), are assimilating carefully subsurface temperature. (2) Adding lunar tidal forcing to statistical models and CGCMs may provide important long-range predictability. Currently, the ocean-atmosphere coupled runs of climate models, such as the IPCC models historical runs and projection runs, are called “free runs” and are not expected to capture the timing of ENSO events in the real world. Adding lunar tidal forcing may help to simulate the correct timing of ENSO events, in addition to improving the simulated oscillation period and amplitude of ENSO. Recently, the ocean modelling community show strong interest in lunar tidal forcing because there are more and more evidences that tidal mixing plays a key role in global ocean circulation.”

You know who first said that "tidal mixing plays a key role in global ocean circulation"? That would be Carl Wunsch, who is the reviewer that gave the Patrick Frank paper a passing grade. Read this Nature article from 2000 by Wunsch: Moon, tides and climate

Of course this is all relevant to the topic at hand.

And since you said it will take years to run these models, I suggest you get cracking :)

Paul, This is tiresome. You are just too lazy to look for yourself. Slingo and Palmer Philosophical Transactions of the Royal Society A.

ReplyDeletehttps://royalsocietypublishing.org/doi/10.1098/rsta.2011.0161

"Lorenz was able to show that even for a simple set of nonlinear equations (1.1), the evolution of the solution could be changed by minute perturbations to the initial conditions, in other words, beyond a certain forecast lead time, there is no longer a single, deterministic solution and hence all forecasts must be treated as probabilistic. "

The paper is an excellent overview of how ensembles of simulations are needed to assess uncertainty. Weather and climate forecasting is all based on this science.

Your simplistic denial of this science is not scientific.

LOL, Lorenz is a toy equation. GCMs use Laplace's Tidal Equations. If you don't know the basics, go hit the books.

Deletem"scientists and the IPCC agree that climate is chaotic. "

ReplyDeleteDepends on the timescale and the level of detail you wre interested in.

Over a few hours you can regard weather as deterministic. Over several decades you can regard global climate as deterministic.

It is over the intermediate period from a couple of weeks to a decade that chaotic behaviour dominates system behaviour.

You have zero evidence for this claim because there is no real science about whether climate is predictable much less computable. The attractor can have very high dimension and lots of bifurcations and saddle points. We simply don't know even what the Lyopanov constants are.

Delete"It is over the intermediate period from a couple of weeks to a decade that chaotic behaviour dominates system behaviour."

ReplyDeleteHow can you say this when climate has a clear non-chaotic annual cycle?

And at the equator, where the annual cycle is nearly nulled out, ENSO dominates and that is not chaotic.

Pp

DeleteConsider weather forecasting. Given data for Middday on Tuesday, it is possible to produce a detailed and accurate forecast for 3pm Tuesday because on that timescale the system is deterministic. Repeated model runs with slightly different starting conditions agree.

Now use the same data to forecast a month ahead. Different runs can produce widely different forecasts because the system is chaotic on longer timescales.

Forecasting climate over decades is a different problem. You are not trying to forecast detailed weather. You are trying to project the average state of the system, which is not chaotic.

In terms of complexity, weather forecasting projects the instantaneous state of a system moving around a strange attractor over a short time scale. Climate models project how the position of the strange attractor changes over long timescales.

Use the same data

"In terms of complexity, weather forecasting projects the instantaneous state of a system moving around a strange attractor over a short time scale."

DeleteI will grant you that weather forecasting is more difficult that climate forecasting because the spatial scale is smaller. Turbulence always grows towards the small scale and it can stabilize or form an inverse energy cascade towards the larger scale. Look at the size of the "eddies" for ENSO -- which aren't really eddies but standing waves -- these standing waves are on the scale of the Pacific ocean. This is evidence of an inverse energy cascade with an attractor aligned to the annual signal and lunar tidal cycles and constrained by the boundary conditions of the equator and basin size. There is no way that an attractor with a standing wave dipole on the scale of the Pacific Ocean wold form unless it was the result of an inverse energy cascade -- i.e. a non-turbulent transfer of energy.

So the question remains as to why climate science have had a hard time determining the cyclic pattern of ENSO. This is likely due to the nonlinear response of the topologically-defined wave equation. The solution that I found for Laplace's Tidal Equations (not the stupid toy Lorenz equations) also has a nonlinear response. This happens to be exactly the same mathematical formulation as a Mach-Zehnder modulator/interferometer (MZM), which are often used for optical modulation applications. I wrote about this yesterday at the Azimuth Project forum. The MZM-lookalike modulation explains the difficulty that climate scientists are having with unraveling the ENSO pattern. Just consider that MZM is used for encrypting optical communications, resulting in waveforms that are scrambled beyond recognition. This is a world of signal processing that climate scientists have not been exposed to.

So the bottom-line is this is not a chaotic regime, but a case of nonlinear modulation that is beyond the abilities of climate science to discern. I have a brute force technique that seems to work, but understand that scientific progress is not a smooth road. It's so much easier to be like David Young and just punt, blaming "chaos" on our inability to make any headway. That's exactly what the deniers want us to do -- hide behind the Uncertainty Monster that Judith Curry has fashioned for us.

https://www.nytimes.com/2017/01/12/movies/a-monster-calls-j-a-bayona-patrick-ness.html

Wrong URL link on that last line

Deletehttps://www.wsj.com/articles/hey-ceos-have-you-hugged-the-uncertainty-monster-lately-11568433606

PP, Once again you throw out a word salad with no substance. If you are able to make an accurate ENSO forcast, you would have more than zero credibility.

Delete"If you are able to make an accurate ENSO forcast, you would have more than zero credibility."

DeleteScientific progress is measured by producing a better model than the current one. If it was between you, me, and a grizzly bear, I don't need to be able to out-run the grizzly -- all I need to do is out-run you. That's the reality of research.

Correct me if I'm wrong, but this is how I see it. I'll change from Climate/GCMs to a rocket for illustrative purposes.

ReplyDeleteWe have made a new rocket fuel and our models suggest the rocket can reach 0.8c. Pat has made a model of rockets accelerating that fits current speeds (up to 0.1c) with known errors in measuring the thrust (but he doesn't consider relativity nor the speped of light). He runs this for the new fuel and gets an uncertainty range of 0.4c.

So, everyone is pointing out to Pat that 1.2c is impossible, but he's responding by saying "where's my MATHS wrong" and "it's uncertainty not error ranges". Well, sorry Pat, I don't think that's the error. In this case the physics (speed of light and relativity) are relevant and once his model moves away from the current conditions there is no guarantee that his model still applies as a good proxy to the real models (or the real world for that matter).

Back to climate/GCM's. Pat has shown that HIS model has large uncertainty. He now needs to show that this is relevant to the ACTUAL models before he can use his model uncertainty with the GCM's.

My naive question. Isn't Pet's model classic straw man?

ReplyDeletejf,

DeleteWho is Pet?

He probably meant Pet Frank. Without using the term "strawman" that's how I framed the PubPeer entry. I stated that P. Frank's initial premise was invalid, which is the working definition of a flawed strawman argument.

Deletehttps://pubpeer.com/publications/391B1C150212A84C6051D7A2A7F119

JF,

DeleteYes, it is. You can't say from the fact that two differential equations share a common solution that they share a common error structure.

For example

y'= -y

and y''=y

both share the same solution exp(-t), but the error structure is totally different. The first is easy to solve, the second very hard (forward in time). because it also has the solution exp(t) which completely dominates error propagation.

I meant Pat. Sorry, not proficient with cell phone.

ReplyDeleteClimate isn't chaos and chaotic motion doesn't change the climate state. In the absence of a forcing change, a slight perturbation in global mean temperature damps in time. Random volcanos, ENSO. and the 11-year solar cycle all have very predictable short-term effects on global temps without impacting the long-term average.

ReplyDeleteChubbs

I just wanted to try to summarize the most important points here because its a topic that is mostly not understood as the comments here illustrate.

ReplyDelete1. Nick is correct that Franks article is not correct. It mischaracterizes the nature of errors when solving PDE's.

2. That said, the truncation and sub grid model errors in climate models are much larger than the changes in fluxes being computed.

3. That means skill can only be expected in quantities involved in tuning the model. Everything else will be wrong to one degree or another. And in fact that's pretty much the case.

4. Global average temperature is probably dictated by conservation of energy and tuning for TOA radiation balance and ocean heat uptake and that's perhaps why it is reasonably well predicted.

5. There is a lack of progress or even any fundamental research on the critical scientific issues involved such as how attractive the attractor really is. This will determine if climate is predictable or not.

6. There is a lot of work that just runs these models over and over again without addressing if those runs are meaningful. This is good for modelers to progress in their careers but is not good for science generally.

"5. There is a lack of progress or even any fundamental research on the critical scientific issues involved such as how attractive the attractor really is. This will determine if climate is predictable or not."

DeleteThis is mostly negativity swill. Do you really think you're going to be successful in dissuading scientists from trying to advance the research? There is clear evidence that ENSO is not close to being chaotic or random. Consider if a forcing signal is convolved with an annual impulse, the Fourier spectrum will be symmetric about the 0.5/year frequency. This is very easy to check with the ENSO time series:

https://imagizer.imageshack.com/img922/2671/YmyFPN.png

The spectral intra-correlation clearly shows an inverse energy cascade locking in to an annual impulse. The annual signal is not seen in the spectrum because it splits the forcing into multiple pairs of satellite peaks. (If the forcing is perfectly balanced to a mean of zero, such as with a tidal forcing, the annual cycle precisely zeros) Basic signal processing stuff that you, David Young, are apparently too lazy to do, but not lazy enough to stop with the concern troll act.

Pukite, You didn't respond to anything I said despite quoting from it. My point is general and is about numerical solution of PDE's and not about ENSO. I know you are fascinated by your discovery of something trivial about simple models, but its irrelevant in a general context.

DeleteYou really are slow aren't you? We can solve Navier-Stokes analytically in the form of Laplace's Tidal Equations along the equator and thus reproduce the ENSO time-series precisely. Everyone else is free to calibrate their numerical solutions against this model (you can thank me for saving gazillions of CPU cycles). Described in this chapter: https://agupubs.onlinelibrary.wiley.com/doi/10.1002/9781119434351.ch12

DeleteDavid - Without references or examples of "numeric" error you are arguing from authority which is not very convincing. You are drawing you predictability experience from small-scale flows with completely different length scales. Also it is much easier to predict the average effect of a constant perturbation to a chaotic system than the chaotic details.

DeleteSince ENSO has been mentioned, below is a recent paper that finds strong evidence for an ENSO mode in the Indian Ocean during the last glacial maximum. The point being that knowledge of the details of chaotic motion are not needed for useful prediction.

Chubbs

https://agupubs.onlinelibrary.wiley.com/doi/10.1029/2019PA003669

Well Paul Williams has a great example for the Lorentz system on how numerical time step errors can produce a vastly different "climate" than a much smaller time step. You can find it at his web site I think.

DeleteThis is all just 1st year numerical analysis of ODE's and PDE's. You approximate a derivative by a finite difference resulting in a truncation error that is typically second order in the mesh spacing. These errors decay for an elliptic system as you go away from the source of the error. For hyperbolic systems they do not. They usually don't blow up either but how accurate the long term averages are is not well understood for chaotic systems. There is often a disturbing lack of grid convergence, casting doubt on even the existence of a unique "climate."

"Useful" predictions can indeed be qualitative in nature. Quantitative predictions are however usually needed to guide decision making.

ENSO is a standing wave dipole, you better deal with it David Young. You can't just close your eyes and continue to rant that it's turbulent motion, because it isn't.

DeleteAn unlinked reference – that's compelling. Probably irrelevant anyway since climate models aren't Lorentz systems. Williams certainly doesn't appear to be troubled by the issues you raise since he uses and improves climate models. Thumbed through his latest paper which stated that “the spatial pattern of clear-air turbulence over the North Atlantic diagnosed from reanalysis data is successfully captured by the climate model “.

DeleteEven Frank's paper argues against your position. Disregarding the bogus error analysis, he finds that an simple emulator, linear in forcing, fits climate model predictions well i.e. model results are always closely linked to forcing. As Nick points out model behavior is constrained by conservation equations. Lo and behold, Franks has just discovered simple energy balance physics, same formulation as Nick Lewis. Temperature = Forcing times a constant. Tune it up and its good to go. That's why quantification is perfectly fine for policy. Warming is proceeding just as expected.

Forgot to sign comment above - Chubbs

DeleteChubbs, You of course didn't respond to any of the science I mentioned. You instead deflected to irrelevancies. None of us knows Paul Williams inner thoughts. We know his results which show weaknesses that are not easily fixed. Eddy resolving chaotic simulations all have serious issues with controlling numerical error, i.e., classical are completely useless.

DeleteThere is an actual well developed science of numerical analysis. I've been working in this field for 40 years. If there is a counter argument, I'd like to hear it. Nick Stokes says "yes but Rossby waves are much easier than aeronautical flows." That argument has some truth even though its based on weather models which use vastly finer grids than climate models. But there are also clouds and convection and all experts admit these are very weak points in our modeling ability. They are also critical to getting the energy flows right.

Sod's last post illustrates very well and copiously that climate models are poor at predicting patterns of change. They might get average temperature roughly right because of conservation of energy, but energy balance methods do that too.

What you have said here is really an appeal to authority. You quote various papers in a field that is less rigorous and less well founded on theory than numerical analysis and then you speculate about another scientist's opinions. Why would you make such a weak argument?

David - Funny, to me you also are providing an argument from authority. One that doesn't jibe with my experience. I worked on turbulence parameterization for weather forecasting models 40+ years ago. You are harping on issues that are irrelevant or that were solved by experts decades ago.

DeleteI have the same feeling about your evidence that you do about mine. A lukewarm blog article doesn't cut it for me. To convince me you need to describe how the errors you describe would manifest and then point to examples in climate model results.

We can agree that climate model results follow a simple energy balance, with a close link to forcing changes. There is uncertainty in cloud feedbacks, hence the spread in ECS, but this is a physics issue, not numerical imprecision. The only way to make progress is by using fluid modeling and observations. Fortunately we have improved observations from satellites, and high-resolution cloud models; so steady progress is being made. There is now strong evidence for positive cloud feedback from: deeper tropical convection, low clouds over warming tropical SST, and shifting of storm tracks N into regions with weaker sunlight. All simple thermodynamic effects that are independent of the state of chaotic motion, and that can be well-captured by climate models.

Per your last para, I don't care if you find scientific evidence weak. I am not going to change my approach.

Chubbs

Chubbs, You are not even able to repeat accurately what I am saying which is complicated. Short term predicting of Rossby waves is vastly different than long term attractor properties.

Delete1. Rossby waves are rather easy to model by fluid dynamics standards. Pressure gradients are usually quite mild and quite dense grids and small time steps can be used. On longer time scales, no one really knows what the effects of lack of correct dissipation and turbulence modeling are as far as I can see. Held makes a further argument that they are essentially a 2D phenomenon. That would need quantification as I don't see a strong argument for it. 2D flows also have chaotic behavior such as vortex streets whose onset and dissipation rate are very sensitive to details. And there is separation which is always problematic to model.

2. Most CFDers would say that in 2D even eddy resolving simulations are still problematic for determining long term behaviors. In 3D, no one knows because a single simulation takes months to years of computer time. That's with a single grid, model, and other parameters of the method of which there are hundreds. No expert believes these are practical for even very simplified engineering simulations anytime soon. There is essentially no fundamental science here or any math and vastly inadequate experience.

3. Climate models are so far outside the usual parameters of fluid simulations in that they can't pass even simple tests such as grid convergence, sensitivity to parameters, etc. Part of this is just the immense computational resources required. This is proven I think by the truncation error argument. But sub grid models perhaps have larger still errors.

4. You are just wrong about SOD's post. It's very carefully sourced. Mainstream climate science has acknowledged this in the last decade with regard to the pattern of SST warming, i.e., models get it wrong and that pattern causes them to warm the atmosphere too fast. That's all been proven by just running the models themselves.

5. It's a fundamental part of settled fluid dynamics that turbulence must be modeling in virtually all flows which have turbulent features to get accurate results. This effect is order 1 in many situations and is orders of magnitude larger than the changes in fluxes we are about. Tropical convection is a prime example of a situation where modeling is inadequate. Perhaps its the reason models show such a strong tropospheric hot spot. BTW, real climate has an excellent permanent page showing the discrepancy between troposphere measurements and climate models.

6. There are some excellent papers out there for example Slingo and Palmer in the Transactions of the Royal Society I believe that lays things out really well. The best atmospheric scientists are actually more honest about these things than many engineering fluid dynamicist. That's probably because its impossible to hide things like failed forecasts which happen all the time and everyone knows about. Climate modeling might be a tractable problem in 70 years, just like LES might be viable in that time frame. Right now, its largely an academic exercise.

7. On clouds, you selectively cite the literature. There are plenty of studies that show that "reasonable" parameters for cloud and convection models can give a very broad range of ECS's. No one really knows because a full study would take decades of time. I recall one paper where the parameters had to be chosen outside the best empirical values to get a reasonable climate. I recall another that showed that low ocean clouds would remain unchanged until we reached 1200PPM CO2. I would place low confidence in the latter paper too. However, the model to model comparison papers are probably correct. Nic Lewis cited cloud fraction as a function of lattitude. Models are all over the place and none looked skillful.

There is plenty of evidence out there to draw these conclusions. However, different situations have different prognoses.

All those points that David Young has written down are practically useless. What is someone going to do, happen along and read them and then get depressed and sulk home? No, what an enterprising scientist or engineer will do is ignore them and take the equations and see if they can figure out a simplifying ansatz and apply that to some problem. That is ALWAYS how research breakthroughs are made. Pessimists such as Young are poison to a research environment.

DeleteThe point here PP is that science should be about truth and objectivity. It is not about your mental health or motivations (which is obviously not optimal) or that of other scientists. Right now, we have a huge problem with bias and positive results bias in science. People like you who deny these facts are contaminating science with bad results and pseudo-science.

DeleteIn medicine for example, people deserve the best science and objective facts. It's a complex subject just like climate. There are thousands of used car salesmen out there promoting various treatments and supplements as keys to good health. If science isn't reliable, there is a lot of harm done with harmful "treatments" that cost a lot of money. My motivation is to uncover the truth and to advance fundamental science and mathematics. If that's not enough for you, you are part of the problem. Your track record of personal attacks and misrepresentations of the fundamentals of fluid dynamics shows that you need to do some introspection.

There are plenty of very interesting problems to work on. They are hard of course, but the best scientists revel in the complexity and challenges.

David Young, "mental health" -- we all have our cross to bear LOL

DeleteThere are essentially 3 arguments in regards to geophysical fluid dynamics going on here. (1) There is the argument about turbulence at the small scale. (2) There is the emergence of erratic standing wave behavior (ENSO) and periodic forcings (annual, etc) at the large scale. 3) And there is the gradual change in the mean climate brought about by GHGs, volcanic activity, and long-term precession.

ReplyDeleteThe issue is that one can't determine what impacts the latter has on the former unless you have understand the fundamental behavior of each one very well. So let me go back to an interesting feature of ENSO that hasn't been characterized much at all. This chart shows the Fourier amplitude spectrum of ENSO folded against itself about the semiannual frequency, 0.5/year.

https://imagizer.imageshack.com/img922/2671/YmyFPN.png

Since the two frequency bands are strongly correlated, all of the features of this spectrum should be considered as satellite sidebands of the annual frequency. What this means is that an annual impulse provides the primary window for the forcing, but that this annual window is modulated by another forcing that is more mysterious. The conventional thinking would point to wind, but the more recent idea involves tidal forces directing the subsurface waves (link up in the thread).

If one tries to fit small-scale turbulence into this or a temperature perturbation due to AGW, I think you will be heading down a dead-end. The temperature perturbation of AGW on the ENSO annual impulse window may be a no-operation for all we know. The annual factor and any lunar tidal actors may override the scale of the AGW term.