Since I did quite a bit of reading about it, I thought I would set down the issues here. The basic point is that there are a large number of thermometers around the world, trying to measure the environment for various purposes. Few now are primarily for climate science, and even fewer historically. But they contain a lot of information, and it is the task of climate scientists to select stations that do contain useful climate information. The main mechanism for doing this is the archiving that produces the GHCN V3 set. People at WUWT usually attribute this to NASA GISS, because they provide a handy interface, but it is GHCN who select the data. For current data they rely on the WMO CLIMAT process, whereby nations submit data from what they and WMO think are their best stations. It is this data that GISS and NOAA use in their indices. The UKMO use a similar selection with CRUTEM, for their HADCRUT index.

At WUWT, AW's repeated complaint was that I don't care about accuracy in data collection (and am a paid troll, etc). That is of course not true. I spend a lot of time, as readers here would know, trying to get temperature and its integration right. But the key thing about accuracy is, what do you need to know? The post linked above pointed to airports where the sensor was close to the runways. This could indeed be a problem for climate records, but it is appropriate for their purpose, which is indeed to estimate runway temperature. The key thing here is that those airport stations are not in GHCN, and are not used by climate scientists. They are right for one task, and not used for the other.

I first encountered this WUWT insistence that any measurement of air temperature had to comply with climate science requirements, even if it was never used for CS, in this post on supposed NIWA data adjustments in Wellington. A station was pictured and slammed for being on a rooftop next to air conditioners. In fact the sataion was on a NIWA building in Auckland, but more to the point, it was actually an air quality monitoring station, run by the Auckland municipality. But apparently the fact that it had no relation to climate, or even weather, did not matter. That was actually the first complaint that I was indifferent to the quality of meteorological data.

A repeated wish at WUWT was that these stations should somehow be disqualified from record considerations. I repeatedly tried to get some meaning attached to that. A station record is just a string of numbers, and there will be a maximum. Anyone who has access to the numbers can work it out. So you either have to suppress the numbers, or allow that people may declare that a record has been reached. And with airport data, for example, you probably can't suppress the numbers, even if you wanted. They are measured for safety etc, and a lot of people probably rely on finding them on line.

Another thing to say about records is that, if rejected, the previous record stands. And it may have no better provenance than the one rejected. WUWT folk are rather attached to old records. Personally, I don't think high/low records are a good measure at all, since they are very vulnerable to error. Averages are much better. I think the US emphasis on daily records is regrettable.

The first two posts in the WUWT series were somewhat different, being regional hot records . So I'll deal with them separately.

Motherwell

The WUWT post is here, with a follow-up here. The story, reported by many news outlets, was briefly this. There was a hot day on June 28 in Britain, and Motherwell, near Glasgow, posted a temperature of 91.8°F, which seemed to be a record for Scotland. A few days later the UKMO, in a blog post, said that they had investigated this as a possible record, but rules it out because there was a parked vehicle with engine running (later revealed as an ice-cream truck) close by.As might be feared, this led in comments to accusations of dishonesty and incompetence at the UKMO, even though they had initiated and reported the investigation. But one might well ask, how could it happen that that could happen at a UKMO station?

Well, the answer is that it isn't a UKMO station. As the MO blog explained, the MO runs: "a network comprised of approximately 259 automatic weather stations managed by Met Office and a further 160 manual climate stations maintained in collaboration with partner organisations and volunteer observers" Motherwell is a manual station. It belongs to a partner organisation or volunteers (the MO helps maintain it). They have a scheme for this here. You can see there that the site has a rating of one star (out of five), and under site details, in response to the item "Reason for running the site" says, Education. (Not, I think, climate science).

So Motherwell is right down the bottom of the 400+ British stations. Needless to say, it is not in GHCN or CRUTEM, and is unlikely to ever be used by a climate scientist, at least for country-sized regional estimates.

So to disqualify? As I said above, you can only do this by suppressing the data, since people can work out for themselves if it beats the record. But the data has a purpose. It tells the people of Motherwell the temperature in their town, and it seems wrong to refuse to tell them because of its inadequacy for the purposes of climate science, which it will never be required to fulfil.

The WUWT answer to this is, but it was allowed to be seen as a setter of a record for Scotland. I don't actually think the world pays a lot of attention to that statistic, but anyway, I think the MO has the right solution. Post the data as usual (it's that or scrub the site), and if a record is claimed, vet the claim. They did that, rejected it, and that was reported and respected.

Ouarglu, Algeria

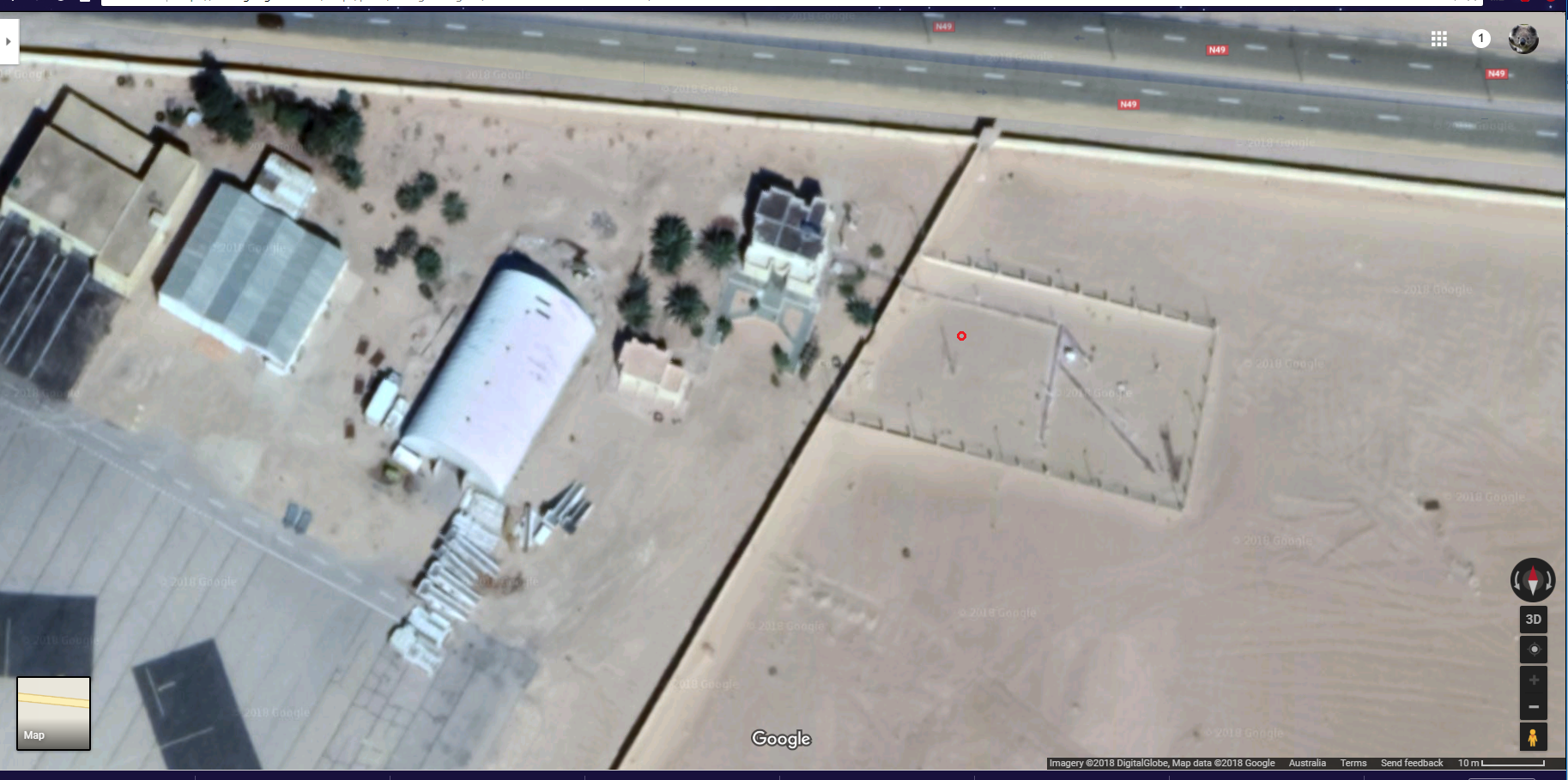

The WUWT post is here. On 5 July, this airport site posted a temperature of 124°F, said to be a record for Africa. There have been higher readings, but apparently considered unreliable. The WUWT heading was "Washington Post promotes another potentially bogus “all time high” temperature record"But "potentially bogus" is what they would call a weasel word. In fact, all that is known is that the site is an airport (not highly trafficked). There is speculation on where the sensor is located, and no evidence that any particular aircraft might have caused a perturbation. The speculated location is below (red ring).

It is actually 92m from the nearest airport tarmac, and 132 m from the nearest black rectangle, which are spaces where an aircraft might actually be parked. It seems to me that that is quite a long way (It is nearly 400 m to the actual runway), and if one was to be picky, the building at about 25m and the road at 38 m would be bigger problems. But these are not airport-specific problems.

A point this time is that Ouarglu is indeed a GHCN monthly station. For the reasons I have described, it does seem relatively well fitted for the role (assuming that the supposed location is correct).

Los Angeles

The final post (to now) was on high temperatures around Los Angeles on 6 and 7 July. Several places were said to have either reached their maximum ever, or the maximum ever for that day. The WUWT heading was "The all time record high temperatures for Los Angeles are the result of a faulty weather stations and should be disqualified"But it is very odd to suggest that a station should be disqualified from expressing its own record high. That is just the maximum of those figures, so if you disqualify the record high, you must surely disqualify the station. But why only those that record a record high?

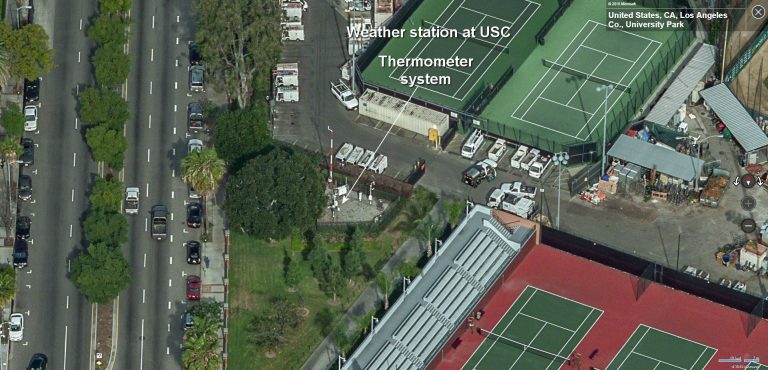

Anyway, the complaints came down to the following (click to enlarge):

|  |  |  |

| USC | LA Power and Light | Van Nuys Airport | Burbank Airport |

There were also sites at UCLA and Santa Ana Fire Station, which were on rooftops. Now the first thing about these is that are frequently quoted local temperature sites, but apart from USC, none of them get into GHCN V3 currently (Burbank has data to 1966). So again, they aren't used for climate indices like GISS, NOSS or HADCRUT. But the fact that, whatever their faults, they are known to locals means that the record high, for that site, is meaningful to LA Times readership. And it is apparent from some of the WUWT comments that the suggestion that it was in fact very hot accords with their experience.

Again, the airport sites are clearly measuring what they want to measure - temperature on the runway. And climate scientists don't use them for that reason. UCLA seems to be there because it is next to an observatory. I don't know why the Fire Station needs a themometer on the roof, but I expect there is a reason.

As a general observation, I think it is a rather futile endeavour to try to suppress record highs on a generally hot day because of site objections. Once one has gone, another will step up. And while one record might conceivably be caused by, say, a chance encounter with a plane exhaust or aircon, it would be a remarkable coincidence for this to happen to tens of stations on the same day. Occam would agree that it was a very hot day, not a day when all the planes aligned.

or you can use it and adjust

ReplyDeletehttp://berkeleyearth.lbl.gov/stations/29063

http://berkeleyearth.lbl.gov/stations/167409

For those who believe that the US temperature trend is too high because of poor location of temperature stations or nefarious adjustments of raw data, please consider the following:

ReplyDeleteThe US temperature trend based on the USCRN network (state of the art, pristine locations) is 0.128 C/decade larger than that of Climdiv, the big adjusted network (trends from Jan 2005 til now).

I think you got the unit or decimal wrong somehow. The difference is very small, but it is interesting that the pristine network shows more warming. Homogenisation does not work too well near the end and the last 1.5 years are even explicitly not homogenised; so one should be careful interpreting the differences at the end. Climdiv needs homogenisation for accurate trend estimates. But still.

DeleteIt is also a climate "sceptical" legend that urban station have to warm more. A station that is surrounded by an urbanizing environment may show too much warming, but urban station also regularly relocate to better locations, which are typically cooler. Whether the long-term trend has a warming or cooling error will depend on where the station started and where it is now. That is an empirical question and as stations often started in the centre of cities it is easily possible that the current surrounding is less urban.

VV "climate "sceptical" legend"... you are generous. I call it a Conspiracy Theory :-)

DeleteVictor,

DeleteI have checked again and find no error. With the last month , June, included the trend difference is 0.1264 C/decade.

It's the trend of differences, USCRN minus ClimDiv, month by month, with 5/9 conversion for degrees Celsius.

I agree that the trend difference sounds large, and not "sustainable" in the long run, but it is only 13 years so far, so the difference has not become very large yet.

I can think of various explanations in terms of difference between pristine countryside and the usual location of temperature stations. However, I suspect that there might be a drift caused by successive addition of new USCRN stations, that hasn't been fully compensated for..

Using just the annual data (2005-2017) I get a difference of 0.114 C/decade, close enough to Olof's that it could be explained by his inclusion of additional months.

DeleteUSCRN: 0.567 C/decade

CLIMDIV: 0.453 C/decade

Intriguing. Will have a look at the data and report back.

DeleteOlof: Could you point me to a good source for up-to-date USCRN temperature trends (and/or USCRN/USHCN comparisons)? I'm aware of Hausfather et al. 2016, but not of more recent work.

ReplyDeleteNick: It seems like there are two issues here:

1) Temperature records for a given site: for these, poor siting is only a problem when temperature contamination might be changing over time (e.g., an ice cream truck, or building a NEW tarmac). If the cause of the poor siting is a constant over the life of the temperature sensor, then the record should be valid.

2) Temperature records for a region: The problem here is that regional records are absolute temperatures, not anomalies, and therefore have the problem that opening a new site, or closing an old one, can easily create a record or reduce the chance the record is surpassed.

But in general, single time-period records at single locations are not going to be great climate indicators because generally, climate trends are clearest in comparison to natural variability when averaged over large regions and long time periods.

But the irony here is in the fact that the site that has historically promoted records based on single time periods at single stations representing large regions: WUWT. E.g., https://wattsupwiththat.com/2014/01/28/no-new-continent-hottest-temperature-records-since-1978/

-MMM

I think the issue is that this is not as hard as it seems. There are sensor readings at two sites, Darwin and Tahiti (comprising ENSO), that essentially provide the principal component of temperature variability for the planetary average.

DeleteIf the temperature of all the sites collected do not reflect this same variability, then the data is not corrupted. On the other hand, if the data was corrupted, the global temperature variability would no longer match that of ENSO. End of discussion.

The discussion would then turn to how to predict ENSO. Now, that's what I would consider real climate science :)

MMM,

DeleteI got the data here, National Temperature index for CONUS:

https://www.ncdc.noaa.gov/temp-and-precip/national-temperature-index/

The claim of badly-sites weather stations is Anthony Watt's "thing" so I guess his reaction to you calling him out is understandable. He runs a Conspiracy Theory blog after all, hostility is their MO.

ReplyDelete"I think the US emphasis on daily records is regrettable."

ReplyDeleteNick thanks for making this point. It IS regrettable when you think about it - one wonders if it has more to do with the demand for media headlines than anything else. I am pretty sure the Australian BOM tends to use monthly records, I feel better about those.

I have worked with temperature measurements for over 40 years, including data validation and analyses, primarily associated with air quality measurements and weather forecasting, but also as a hobby in learning about micro and meso scale meteorology. I have been monitoring temperature for fun in backyards at our old house where there was heavy shade from trees and at our new house where there is no shade. I also routinely look at the many thousands of temperature observations reported on various weather networks, including the Weather Underground, OGIMET, and NOAA's Aviation Weather global map. The variability is amazing and much of it is real, but I often see examples that appear to be influenced by very localized siting issues (microscale) and not very representative of a larger area, even 10s of meters away. I discussed some of these issues here.

ReplyDeleteIn my mind, the biggest challenge for climate scientists is monitoring and hopefully avoiding changes in these local site influences over time. Ideally, all of our climate sites should be at rural locations where the local site features change little over time and thus cannot have an influence on temperature trends. I realize this is much easier said than done, although I commend the USCRN for doing a very good job in that regard. I've said this here before, but I believe we need a GCRN with similar design and attributes used by the USCRN. Again much easier said than done, but if enough people are in agreement it can be done. I suspect a small percentage of GHCN sites might qualify already, but we need many more. Many of the other sites are still useful for other purposes, such as tracking urban heat island effects that can sometimes be very significant.

Nick, as you have said many times, working with anomalies helps to minimize spatial differences in temperature related to siting for better making local, regional, and global integrations and resulting trends. However, it does not account for changes in local siting issues over time which can affect the anomaly trends. It's those changes over time that all too commonly occur and greatly reduce the accuracy of temperature trend assessments, whether they be local, regional, or global. I realize that various homogenization schemes have been developed to try to get around this issue, but I still believe homogenization is not likely to reduce the overall uncertainty in temperature anomaly trends much, if at all. After several decades of operation, the USCRN should provide data that could allow a better assessment of how siting issues may or may not affect other nearby sites. If I manage to live that long, it will also be interesting to look at regional effects as well, especially in areas where there are a lot of changes in siting influences over time.

"I realize that various homogenization schemes have been developed to try to get around this issue, but I still believe homogenization is not likely to reduce the overall uncertainty in temperature anomaly trends much, if at all. After several decades of operation, the USCRN should provide data that could allow a better assessment of how siting issues may or may not affect other nearby sites. "

ReplyDeleteCheck out Hausfather et al: https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1002/2015GL067640. It shows both the difference in noisiness between raw sites & USCRN, but also how homogenization actually does a decent job of bringing stations into better (but not perfect) alignment.

Thanks for the link. I downloaded the PDF. The results for 10 years are encouraging, but I would still like to see 30 years or more for a more thorough analysis.

Delete