I dealt with a rather similar occurrence of this issue last year. There I showed an example where Melbourne daily maxima given to 1 decimal (dp) were averaged over a month, for several months, and then averaged again after rounding to nearest integer. As expected, the errors in averaging were much less than 1°C. The theoretical is the standard deviation of the unit uniform distribution (sqrt(1/12) approx 0.29, divided by the sqrt of the number in the average, and the results were close. This time I did a more elaborate averaging with a century of data for each month. As expected this reduced the error (discrepancy between the 1dp mean and the 0dp mean) by a factor of 10.

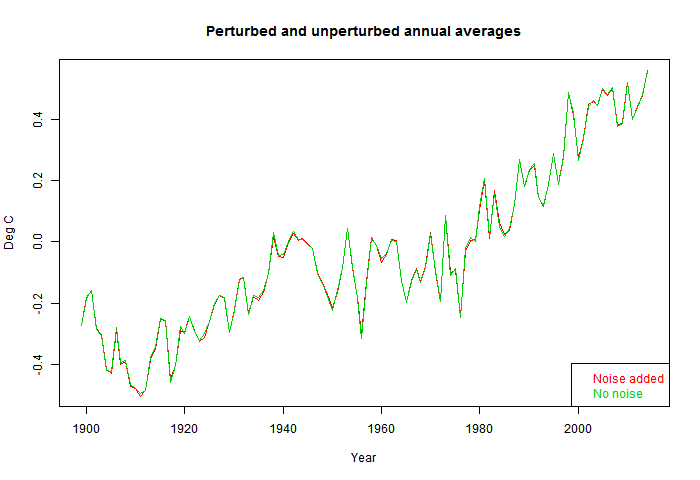

I also showed here that for the whole process of averaging over time and globally over space, adding white noise to all monthly averages of amplitude 1°C made almost no difference to the global anomaly time series.

The general response that there is something special about the measurement errors which would make them behave differently to the rounding change. And there are usually arguments about whether the original data was really as accurate as claimed. But if one could somehow have perfect data, it would just be a set of rather similar numbers distributed over a similar range, and there is no reason to expect rounding to have a different effect. Nor is there any kind of variation that could be expected to have different effect to rounding, as long as there is no bias; that is, as long as the errors are equally likely to be up or down. If there is bias, then it will be propagated. That is why bias should be the focus.

Here is a table of contents for what is below the fold:

Random walk

I think a useful way of viewing this is to think of the process of building the average. I'll stick to simple averages here, formed by just summing N numbers and dividing by N. The last step is just scaling, so we can focus on the addition. If the elements just have a mean value and a random error, then the cumulative sums are a classical one dimensional random walk, with drift. If the mean can be subtracted out, the drift is zero, and the walk is just the accumulation of error over N steps. Now if the steps are just a unit step either up or down, equally likely and independent from step to step, the expected distance from the origin after N steps is sqrt(N). It could be up or down, or of course it could be zero - back at the start. The reduced result from N steps reflects the inefficiency of random walk as a way of getting anywhere. With averaging, the step is of varying length, from a distribution, but again if independent and with sd σ, the expected distance is σ*sqrt(N). If not independent, it may be larger. For the simplest autocorrelation, Ar(1), with correlation factor r between 0 and 1, the distance is amplified by the Quenouille correction sqrt((1+r)/(1-r)). But it would take a very large correlation to bring the distance up close to σN.Extreme rounding

At WUWT, a commenter Mark S Johnson was vigorously arguing, and I agree, that it was all about sampling error, and he said that you could actually round the heights of US adult males to the nearest foot, and still get an average to the nearest inch. I blanched at that, but he insisted, and he was right. This is the test I described:I tried it, and Mark's method did still do well. I assumed heights normally distributed, mean 5.83, sd 0.4. Centered, the expected numbers were

4.5 5.5 6.5 7.5 190 6456 3337 17Weighted average is 5.818, so it is within nearest inch.

Mark suggested 5.83 was the correct mean, and I chose a sd of 4 to ensure that 7 ft was infrequent but not unknown. I was surprised that it did so well, but I had an idea why, and I was interested. Here's why it works, and what the limits are:

Binning and Poisson Summation.

Numerically, the discrete separation of data into "bins" through rounding has a lot in common with integration formulae. The mean is ∫p dC, where C is the cumulative density function (cdf); the rounded version makes a sum Σ pΔC. There are a great variety of finite interval integration formulae, going back to Newton-Cotes, and the almost equally ancient Euler-MacLaurin formula, which relates the sum of regular spaced samples ito the integral with error terms involving the powers of the spacing h and the end-point derivatives. The error here is polynomial in h, and depending on the first derivative that is discontinuous at the end-point. But that raises an interesting question - what if there isn't an end-point, or if there is and all the derivatives are zero? It turns out that the approach to the integral, with diminishing h, can be faster than any power of h. The key is a very elegant and powerful formula from nearly 200 years ago - the Poisson summation formula.At its simplest, this formula equates the sum of equally spaced samples of a function with a similar sum of samples of the Fourier transform:

Σ f(ih) = Σ F(k/h)

where F is the Fourier transform (FT) in the convention F(ω)=∫f(t) exp(-2iπt) dt and h is the spacing.

Sums and integrals here are from -∞ to ∞, and summed over whatever looks like an index (i,k etc).

This is an integral approximation because F(0) is the integral, and F(1/h) will be the first error term. If F tapers rapidly, as it will when f is smooth, and if h is small, the error will also be small.

From above, mean M = Σ kh ΔC_k.

The cdf C does not work so well with the Fourier transform, because it doesn't go to zero. But if we let the mean μ of the probability density function (pdf) P vary, then the derivative

dM/dμ = Σ kh ΔP(kh+μ), since P is the derivative of C

and with this shift, summing by parts

Σ kh ΔP(μ)_k = Σ hP(kh+μ) = Σ Þ(k/h)exp(2kπt/h)

using now Þ for FT of P

The practical use of these series is that the k=±1 terms are enough to describe the error, unless h is very large indeed. So in real terms, since for pdf the integral is 1, then

dM/dμ = ≅ Σ kh ΔP(μ)_k = 1 + 2*Þ(1/h)*sin(2πμ/h) + ...

or M = μ + Þ(k/h)*h/π * sin(2kπμ/h)

This tells us that the binned mean M is exact if the mean μ of the pdf P lies on an integer point of the binning (a ruler mark) and oscillates between, with another zero half-way. The maximum error is Þ(k/h)*h/π.

In the case of the normal distribution assumed here, Þ(1/h)*h/π = h/π exp(-2*(πσ)²). And in our particular case, h=1, σ=0.4, so the maximum excursion (if the mean were 5.75ft) is 0.0135, or about 1/6" (inch). That's still pretty accurate, with a ruler with 1 foot spacings. How much further could we go? With 2ft spacing, it gets dramatically worse, about 1.75" error. Put more generally, binning with 2.5σ spacing is good, but 5σ starts losing accuracy.

I think that robustness is remarkable, but how much does it depends on normal distribution? The Poisson formula gives a way of thinking about that. The FT of a gaussian is another Gaussian, and tapers very fast. That is basically because the pdf is maximally smooth. If higher frequencies enter, then that tapering that governs convergence is not so fast, and if there is actually a discontinuous derivative, it reduces to polynomial order. And of course we never really know what the distribution is; it is generally inferred from the look of the histogram. However there are often physical reasons for it to be smooth. Or perhaps that overstates it - the most powerful argument is, why not? What physics would cause the distribution to have kinks?

Caerbannog has been pushing this sort of thing. Here are a couple of his best that might amuse you

ReplyDeletehttps://twitter.com/caerbannog666/status/914501386905124865

https://twitter.com/caerbannog666/status/893469378125078530

But the last time we discussed this Eli pointed to how voltages are measured to arbitrary accuracy

https://moyhu.blogspot.com/2016/04/averaging-temperature-data-improves.html?showComment=1461420363354#c6502775355858010117

Thanks, Eli,

DeleteYes, the Tooth Bunny's addition of noise is very similar. I see that I had promised to raise the AVR example, but I forgot. I suspect there will be a sequel. Someone brought up something similar here, but without the convincing link.

Hi Nick

ReplyDeleteI did some simple experiments with this. From memory (details may not be exact):

I took the GHCN3 data and added a random error with an SD of 1C to every monthly observation. Then I fed the station data though both HadCRUT-like and GISTEMP-like temperature averaging algorithms, and compared with the results from the original data. The RMS error in the resulting monthly global means was IIRC 0.01C for the HadCRUT algorithm and 0.02C for the GISTEMP algorithm for recent decades. (Infilling reduces coverage bias at a cost of increasing sample noise. Since coverage bias dominates, that's a win. The statistics on this were done in the 70's by a group in Moscow, some of whom later went to NOAA, but I don't think are widely understood by the user community.)

Note that this is 1C in the monthly temperatures (not the daily obs). So we'd either need a much bigger error in the dailies (bit enough to be immediately obvious), or errors which persist over a month. I wondered if correlated errors could contribute more, and so instead of adding a random error to each station for each month, I picked a random error for each station and year. The results were effectively unchanged.

Kevin

Kevin,

DeleteOn correlated errors, I think a good rule of thumb is the Quenouille factor, sqrt((1+r)/(1-r)), applied to the sd. r is the autocorrelation coefficient that you would use in Ar(1). It's not so good for r close to 1. But you could use it for the daily data; it will give an expansion of the error which won't change much with the monthly/annual scale processing. But in your case, clearly even allowing for that still leaves plenty of noise damping at the annual/spatial scale.

I see that Poisson summation was popularised by the crystallographer Ewald. It was called "Ewald's trick" in those more innocent days.

The Ewald summation as is known, is used to sum the electrostatic contributions to the force and energy in molecular simulations since the are very long range and traditional summation and radius cut off methods give a large error.

DeleteSame things happens with gravitational forcing. The long-range power law is 1/(r0+r(t))^3 for tidal disturbances, and with r(t) smaller than r0 , this will expand in a Taylor's series and the terms will alternate in sign. Bookkeeping of these cyclic terms in reciprocal space is straightforward and it is then easy to see how something like ENSO derives directly from the lunar and seasonal cycles.

Deletehttps://i0.wp.com/imageshack.com/a/img923/919/0RjPtp.png

Still have to do the diffEq solution in real space otherwise people won't believe you.

I mainly go to WTFUWT? to watch old man Watts repeatedly dust off the same old and tired language cliches. I also go to WTFUWT? to watch their extremely long and very bad blog science. Then their are those topics that I know Nick will reply to, those threads, that's all I look for, Nick's very patient factual posts and the never ending illiterati replies (there are very few people over there that are able to provide a sanity check, don't you know) .

ReplyDeleteEli's green plate theory also seems to have outed another bunch of illiterati.

I'm sort of thinking most of them stopped going to school at age 12 so that they could drive the family tractor.

The math, the proof, is right there, staring them in the face. Yet they don't believe it, because they can't believe it, because it doesn't fit into their own worldview. Something must be wrong with your math, because They believe in their math, so much so, that it is as strong a belief as knowing, for sure, that God exists with 111% certainty.

Note to self: I personally don't think that The Bible makes for a very good math book.

Yes Everett!

DeleteI'm actually reading (little parts of) the dissertation of Anders Knutsson Ångström, Knut's son:

A study of the radiation of the atmosphere

to be found in

https://openlibrary.org/books/OL18016447M/A_study_of_the_radiation_of_the_atmosphere

Within this 150 pages long stuff you find, at the beginning of chapter 3:

The outgoing effective radiation of a blackened body in the night must be regarded as the sum of several terms: (1) the radiation from the surface toward space given, for a "black body" by Stefan's radiation law; (2) the radiation from the atmosphere to the surface, to which must be added the sum of the radiations from sidereal bodies, a radiation source that is indicated by Poisson by the term " sidereal heat."

and on page 49 you see:

These considerations have given a value of the radiation from a perfectly dry atmosphere, and at the same time they lead to an approximate estimate of the radiation of the upper atmosphere, which is probably chiefly due to carbon dioxide and a variable amount of ozone.

And all that was written in... 1915.

When ignoring the source, stubborn skeptics of course immediately would deny that as 'warmist pseudoscience'.

As another example, Faraday and Lord Rayleigh determined the sloshing dynamics of the ocean long ago:

Delete"On the other, there is a link to the first historical observation of the period-doubling route to chaos. This is an experiment by Michael Faraday" with a periodically driven nonlinear system and dates back to the year 1831.87 Faraday constructed a large, 18-foot-long vibrating plate which held a shallow layer of water. He observed how 'heaps' of liquid were oscillating in a sloshing motion. In particular he reported that "each heap recurs or is re-formed in two complete vibrations of the sustaining surface." In other words, he observed the first step in a period-doubling cascade. Later, in 1883, Lord Rayleigh confirmed these results, and today the sophisticated methods of chaos theory and modern computer-controlled experiments are performed to study these transitions from order to chaos in similar system"

From looking at the current research, just about every GCM develops biennial oscillations in their models of ENSO. That's essentially a period doubling of the yearly cycle. Which means that they haven't really improved much in this area in comparison to what Faraday (1831) observed and Rayleigh (1880) explained.

Why are you arguing with a guy that lives on a boat wandering around the Caribbean because he is likely wanted for tax evasion in the USA?

ReplyDeleteHe's expressing an all too common line of thinking in a forum with lots of readers. I think the proper science needs to be stated there.

DeleteAnybody who has experience with experimental science understands the role of precision and what significance is required when taking measurements. This guy Kip Hansen is clearly like a Wondering Willis -- no bunker experience, no formal education, and no clue.

Deletewhut,

DeleteMy first comment in the thread began:

"You do have over a century of scientific understanding against you. And you give almost no quantitative argument. And you are just wrong. Simple experiments disprove it."

But I think the reasons need to be set out for the readership, which I tried to do.

Nick,

ReplyDeleteTowards the end of that thread on error propagation, you appeared to express an interest in possibility theory ...

Bounding probabilistic sea-level projections within the framework of the possibility theory

http://iopscience.iop.org/article/10.1088/1748-9326/aa5528

This is only provided in the secular sense, not in either an advocacy or dissent sense (it is not paywalled).

Thanks, Everett,

DeleteI expressed some interest mainly because I challenged them to come up with something substantive, and someone did. But I noted that the version I saw seemed to require dipping into multi-valued logic, which meant "we aren't in Kansas any more". If you have a result that can only be communicated to people comfortable with multi-valued logic, that is not a lot of progress.

Your link does seem to seriously try to communicate something with possibility theory. I liked their characterisation "The general principle of these methods can be viewed as assigning imprecision to probabilistic measures". The uncertainty of the uncertainties. And that seems to be what they come up with, though it is pretty crude. I'll read more.

If you need "uncertainty of uncertainty", look up superstatistics by Christian Beck. I use similar models frequently. Beck mainly focuses on Gaussian but it works for other pdfs.

DeleteHi, Nick. Nice job with this. You are very patient with the people at WUWT. I gave up posting there a long time ago, after (a) they began deleting my comments, and (b) Anthony started making public remarks about my identity based on my (private) email address.

DeleteKip seems to have constructed an unrealistic scenario in which averaging doesn't provide any benefit. I think you do a good job of pointing out what the problem is there.

Many of the other commenters clearly don't understand what's going on. They raise a bunch of issues that are all different from what Kip is talking about in his post. Some of the claims that commenters raised in response to your example:

(A) Averaging doesn't reduce error from bias in the observations

(B) Averaging doesn't work when the underlying process is non-stationary

(C) Averaging doesn't work when the errors are non-Gaussian

IMHO those are partially wrong (A), mostly wrong (B) and wholly wrong (C).

For fun, I did some tests using the following model:

(1) Time ranges from 0 to 0.999 in increments of 0.001.

(2) Some real-world phenomenon is represented by a numerical value at each time step. These values are assumed to be "true" and can be stationary, or vary according to a user-selected function (linear or sine, with the user choosing the mean, amplitude etc.)

(3) 1000 observations are made at random points during the time period.

(4) Each observation has a bias and a random error. The biases can be identical (all observations have the same bias), or can follow a distribution with some mean and spread (user choice of uniform random bias with a mean and range, or Gaussian distribution of biases with mean and standard deviation). The random errors can also follow a distribution that's either uniform random or Gaussian.

If stations' mean bias is small in comparison to the random error, then averaging will make a large improvement. In this case, the random error is Gaussian with a mean of 0 and a stdev of 2, and the individual station biases are also Gaussian with a mean of 0.1 and a stdev of 0.3. The absolute error in the average of all observations is much smaller than the absolute error of the median station:

https://i.imgur.com/IHeM3jc.jpg

If the bias across stations is larger in proportion to the random error, the value of averaging decreases. Here the mean error is Gaussian with a mean of 0 and a stdev of 1, and the bias is Gaussian with a mean of 1 and a stdev of 2. Now the averaging process only reduces the error by about 50%:

https://i.imgur.com/5zdEvGi.jpg

The worst-case scenario is where the biases are consistent across all stations, and are not smaller than the random error. This example has a uniform bias of 1.0 across all stations, and random errors that follow a uniform (non-Gaussian) distribution from -0.5 to +0.5. In this case, the error in the average of all stations can be slightly lower or higher than the error in the median station (i.e., averaging has little effect):

https://i.imgur.com/5zdEvGi.jpg

In the case of (e.g.) temperature observations at surface stations, many efforts are made to reduce biases, and the distribution of residual station biases is probably not uniform across all stations (in fact we know that over time some stations are warming faster than the global mean and others are warming slower). So the effect of averaging should be large (like the first image above).

Sorry about the lengthy comment.

Whoops ... one of the three links to images in the previous comment was accidentally duplicated. Here they are again, and this time linked:

DeleteExample 1: https://i.imgur.com/IHeM3jc.jpg

Example 2: https://i.imgur.com/5zdEvGi.jpg

Example 3: https://i.imgur.com/u7vT2il.jpg

Ned, a few comments here:

ReplyDeleteOn (A), bias in measurement is a bit more complex than your discussion is showing, so while averaging by itself doesn't always help things, other aspects of the analysis method can.

There are at least four types of bias that I can think of off the hat, (a) instrument offset bias (this is addressed by using anomalies), (b) instrument scale bias (this is addressed by periodic calibration of instruments), (c) bias associated with spatial undersampling (d) bias associated with partial sampling of the annual period in some sites.

(c) is an issue here because the temperature field does not warm at a uniform rate in response to climate change. At lowest order, the most important effects are latitude, land/costal/marine and station elevation. Nick has partly addressed these in some of his prior posts on his blog.

(d) From memory, this is a problem for Arctic sites mostly, and is I believe more of an issue for historical data, where we didn't receive data for winter months. The best you can do for this is correctly fold in the uncertainty associated with the missing data. My perception is the resulting uncertainty is small in global temperature trend compared to the measurement uncertainty.

Regarding (C), averaging still works, but arithmetic averaging may no longer be optimal. E.g., harmonic averages or even median might yield better estimates of the central tendency of the distribution.

I thought when you mentioned random walk, that you understood what you were talking about but clearly not.

ReplyDeleteThe problem is that all your assertions ONLY work for white noise. Any real world noise approximates to 1/f (aka flicker) noise, where far from averaging reducing the noise, it has the opposite affect, of reducing the signal more than the noise.

There are ways to alleviate this issue, but as I find most people's comprehension doesn't go beyond white noise, and they sincerely believe you can get rid of noise by averaging ... I'm probably not going to get anywhere attempting to explain.

As I said in that paragraph:

Delete"With averaging, the step is of varying length, from a distribution, but again if independent and with sd σ, the expected distance is σ*sqrt(N). If not independent, it may be larger. For the simplest autocorrelation, Ar(1), with correlation factor r between 0 and 1, the distance is amplified by the Quenouille correction sqrt((1+r)/(1-r)). But it would take a very large correlation to bring the distance up close to σN."

I believe you're thinking of 1/f^n, n >2 noise. For this (not totally physical model), the standard deviation increases with the length of a window. You can still beat this down by windowing the data, then you get a 1/sqrt(number_of_windows) factors that reduces the standard error of measure.

DeleteAnyway, 1/f (power spectrum here) is typical but it's not true that "any real world noise" approximates this. Turbulence has a 1/f^(5/3) velocity spectrum for example ("Kolmogorov" turbulence).

In this particular example, there is typically a lower frequency cut-off to turbulence (related to the maximum time it takes a parcel of air to leave the ground reach the top of the convective region, then return to the ground), so a von Karman wind turbulence model is more accurate. More generally, energetics considerations always places a lower frequency limit to the 1/f^n region (otherwise you end up with infinite power as f->0 which is totally unphysical).

The Von Karman spectrum don't have this property of an increasing standard deviation with arbitrarily long increasing averaging time. Nor does any "real world" spectrum.

"Any real world noise approximates to 1/f (aka flicker) noise ..."

ReplyDeleteYou lie ...

Balanced source terms for wave generation within the Hasselmann equation

https://www.nonlin-processes-geophys.net/24/581/2017/npg-24-581-2017.pdf

(see Figures 7 and 17)

OK, so how about we estimate the noise empirically?

ReplyDeleteI've already got some code to do this for gridded data. I hold out a 3x3 block of grid cells, and then infill the field by kriging. Then I take the difference between the original value of the central and the infilled one. This should be an overestimate of the cell noise, because it also includes an contribution from noise in the surrounding cells and spatial contributions, although this may be reduced by the averaging of many cells (assuming averaging reduces the noise - if it does not, then the overestimation will be more serious, so we're going to overestimate the noise by more).

Then start from the hold-out reconstructed field, add back in different realizations of the inferred noise back using a bootstrap-like method, and average.

Ideally we'd do that at a station level rather than a grid cell level. That would need some new code (the Berkeley Earth code could probably be adapted). However to justify the effort there would need to be worthwhile paper in it, and currently I don't see an interesting scientific question that it addresses. The gridded version is easy enough that I might get round to it.

Kevin,

DeleteI wrote two posts in March, here and here, looking at residuals after various kinds of smoothing. This is using station data, along with culled gridded ERSST. I do this as part of trying to improve spatial integration; the idea is to subtract anything that can be recognised, leaving a noise residual that should have zero integral. There are various sum squares analyses.

I do the LOESS with radial basis functions.

The following is the basic fallacious reasoning that AGW deniers at places such as WUWT use when applying random walk arguments.

ReplyDeleteThere are many different flavors of random walk. If the WUWT's are referring to a pure Brownian motion random walk, the ultimate excursion is unbounded. What that means is that on a long enough measured interval, the excursion from the mean can be just about any value. This is well known as the "gambler's ruin" problem.

But much of real physics is bounded, and that's why you find instead the Ornstein-Uhlenbeck random walk, which will always revert to the mean. Predictably, the ordinary WUWT fan would ignore this version and prefer the unbounded random walk to better match their preconceived notions.

They would also avoid understanding that much of the natural variations observed are not random or chaotic at all and that a variation such as ENSO is actually a bounded oscillation forced by the tidal signal, which obviously is bounded in gravitational strength.

The only aspect that is not bounded is the growth of CO2 in the atmosphere, which Watts and Willis and the other WUWT'ers are somehow driven to deny.