I have put up version 2. It has some extra capabilities, and some improvements in layout. Like V1.1, it is eparated into a preprocessor and a main processor. You should only need to run the preprocessor once, unless a new v2.mean comnes along (or a new code version, as here).

I think users will find this version more convenient and powerful in selecting categories. You can control the year intervals for plotting and trend calculation (separately). The preprocessor now passes a file statyrdata.txt which gives the start and end of each station record, and the length (total years).

The code is here and at the document store.

Update. Carrick pointed out a bug a while ago, which I found frustrating, because I couldn't reproduce it. It turns out that it was due to bad treatment of the end year, and I couldn't see it because I was using a Jan version of v2.mean which didn't have 2010 data. Anyway, it's fixed now (thanks, Mosh) - so I'll update with v1.2.1 files.

A summary of this series of posts is here.

User variables

At the top of the file you will see this section:

#### Parameter definitions - these vars are ones that you might want to change

# Input file locations - change to path where yours are kept

input1 = "v2mean.txt"; # Made by preprocessor - should be in current dir

input3="statyrdata.txt"; # Made by preprocessor - should be in current dir

input2 = "v2.temperature.inv";

# subset selection - title names appear in graph and text output - you need to write a matching condition below

title = c("Global","NH","SH","ConUS","Airport","Rural","Urban","Cut","Kept","BC","Africa");

choices = c(1,4,8,9,10,11); # select items from "title" for this run;

# plot selection;

yr0 = 1880; # Start of the analysis period - don`t ask for trends or plots before this date;

yr1=2009; plotyrs = matrix( c(1900,yr1, 1978,yr1),nrow=2) #list year pairs, as many plots as you want;

# trend selection start and finish;

trendyrs = matrix( c(1900,yr1, 1978,yr1),nrow=2) #list year pairs, as many trends as you want;

This collects in one place the variables you are most likely to want to change.

Trend and plot years selection

Here you just list in the form c(1900,yr1, 1978,yr1) the pairs of years that you want plots and trends. This refers to trends calculations reported on the output file. Trends will be calculated for plots too, but determined by the plot years.

The format of the list is start,end,start,end... It's up to you to ensure that there are matching ends. The use of yr1 is optional, but may help when 2011 rolls around. You can have as many pairs as you like (but be reasonable).

Station subset selection

There are three steps:

1. Decide on a set of names

2. Program a set of criteria. This is the harder part. Down in the code, you'l find a list like this:

if(tc == "NH") i01 = i01[tv$lat>0];

if(tc == "SH") i01 = i01[tv$lat<0];

if(tc == "ConUS") i01 = i01[tv$country == 425 & tv$lat<51 & tv$lon > -130];

if(tc == "Airport") i01 = i01[tv$airpt == "A"];

if(tc == "Rural") i01 = i01[tv$urban == "A"];

if(tc == "Urban") i01 = i01[tv$urban != "A"];

if(tc == "Cut") i01 = i01[tv$endyr<1992 & tv$length>50];

if(tc == "Kept") i01 = i01[tv$endyr>2005 & tv$length>50];

if(tc == "Africa") i01 = i01[tv$country <190];

if(tc == "BC") i01 = grep(",BC",tv$name)

The key task is deciding on the logical expression. It helps to have some knowledge of the v2.temperature.in file. The list of attributes of the object tv, taken from this file, is:

colnames=c("country","wmo","mod","name","lat", "lon","alt","ialt","pop","ipop", "topo","startyr","endyr","length","airpt", "town", "veg","urban");

I've used three of the more obscure locations to put the startyr, endyr, length information. You can use any of this info to create subsets. For example, I've used the year info for Cut and Kept - to try to separate the stations "dropped" in the early 90's. It would be best to run these with appropriate year selection.

You can see how R extends the possibilities with the "BC" example. The .inv file follows Canadian and US sites with the province/state abbreviation. So you can search with grep, and do just those entities. You could combine then if you like c(grep(..), grep(..))

Update - OK, it was late, and I forgot 3). Which is the decision for any run on what subsets to use. That's the declaration of choices, as a list of numbers.

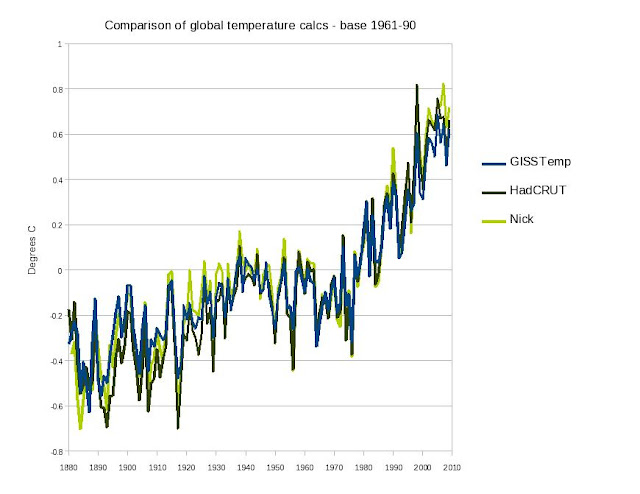

Results

Well, it's late tonight, and I wanted to post the code to solve some current problems. I'll post some result plots tomorrow.