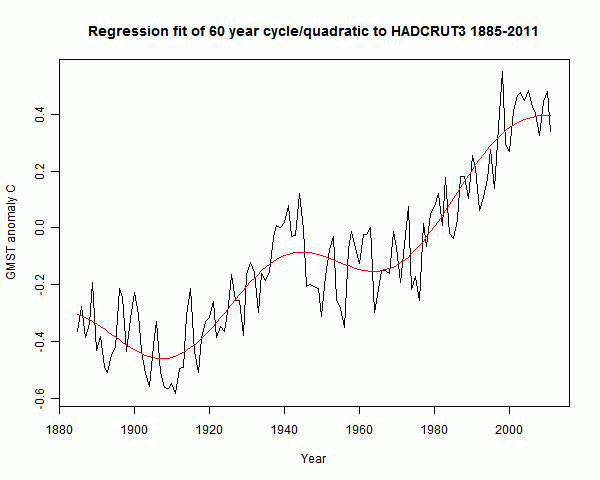

At WUWT, Girma Orssengo, PhD, has a guest post showing some of the empirical models that he often posts in comments at Climate etc and elsewhere. It shows a parabolic+sinusoid fit to HADCRUT3:

The key plot is the one on the right. It's often been observed that a 60-year (or thereabouts) cycle plus a linear or quadratic does fit in this way. The question is, what does it mean? And in particular, can it be used for prediction?

Girma says that it isn't meant as a basis for physics interpretation. But when challenged about prediction, he said:

" Girma says: September 4, 2012 at 12:53 am

Nylo

A model is only useful if it allows predicting future behaviour. I see no predictions by the author that could be later falsified by the real outcome.

The model established a pattern as shown in Graph “f”. From this graph, It is easy to predict the climate if the pattern continues => Little warming in the next 15 years."

So prediction does seem to be an aim.

The problem with curve fitting is that behaviour outside the range is determined by the rather arbitrary selection of basis functions. I tried a regression fit in R using the same basis functions, with similar results:

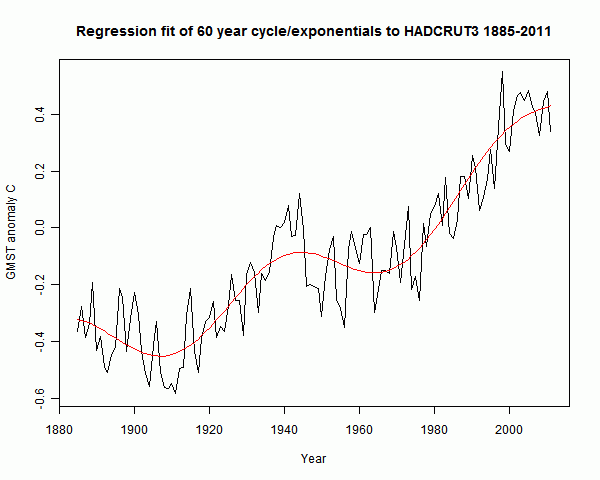

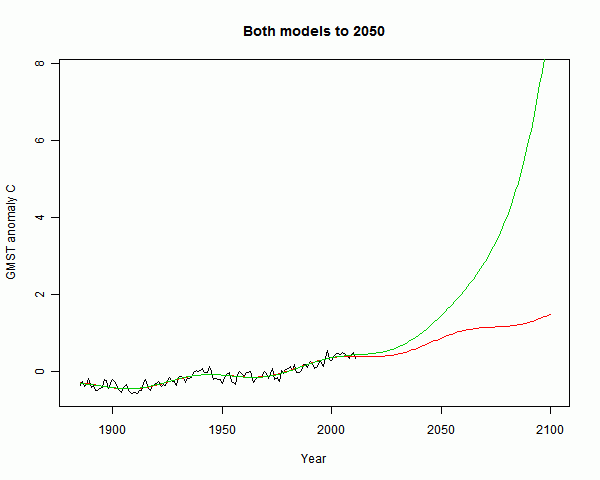

Then I tried the same sinusoid, a 60-year cos function with min at 1910, but instead of t and t2 I used exp(t/20) and exp(t/100):

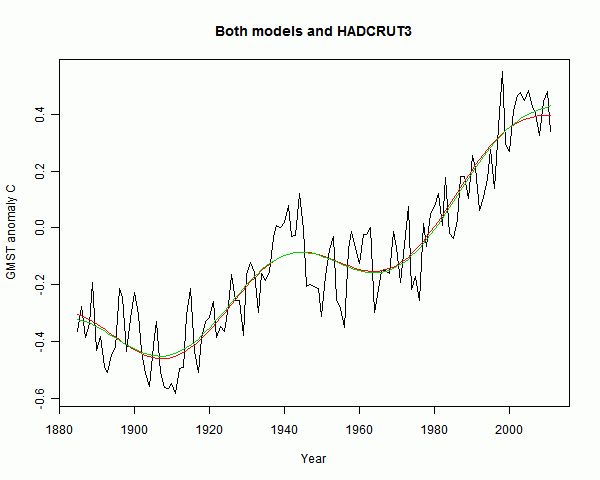

The fit is very similar, and it's not easy to declare that one is better than the other. Here they are on one plot:

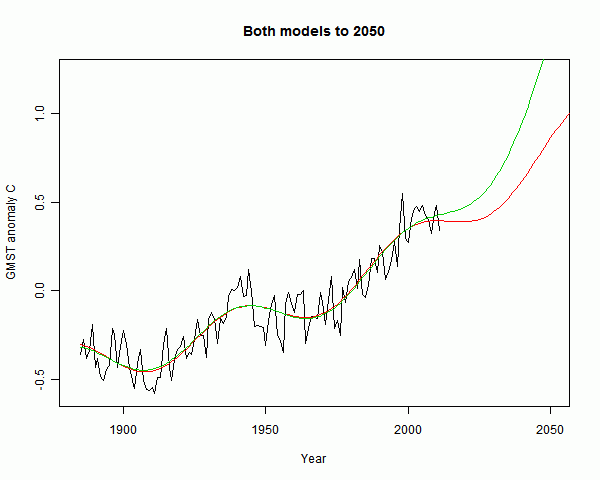

But they give very different predictions. The divergence looking forward to 2050 is not so huge:

But looking forward to 2100, the exponential model (green) runs right away, warming by 8°C. Grim.

The bind with fancy curve fitting is that it really can't be used much outside the range. And within the range, it isn't telling you anything you didn't already know, unless you are confident that you can make a useful deduction about the physics.

So what about linear regression - very commonly used? Well, it isn't "fancy" curve fitting. But the underlying principle is there. If it is a time series, you need to be open to the possibility that there is a real underlying rate of change. Then regression (curve fitting) can estimate the rate for you. But it doesn't prove that the linear model is right.

One fake skeptic on Facebook--and one I gather with at least some math chops--wrote "with the kind of statistics [climate scientists] use, they could show anything." Of course, in the same post she complained that urban heat island effects were ignored...

ReplyDeleteSwanson did a graph of the 20th Century in his PNAS paper on natural variability. The claim is there is no CO2 signal in Girma's graph. Swanson's dashed line is obviously the driver of 20th Century warming. If that could be pulled out of Girma's graph, what would the graph look like?

ReplyDeleteSwanson's curve looks pretty like Girma's underlying quadratic (or the fitted exponentials). So I think taking it out would pretty much leave the apparent 60 year oscillation plus noise.

ReplyDeleteOne of the ironies here is that Girma's model is pretty much in line with AGW driving. The fundamentals of GHG emission say that it's more exponential that quadratic, but I've tried to show that it can be hard to tell the difference over an interval. As for the 60-year "cycle" - folks at WUWT have lots of ideas. But you can't say that two ups and downs prove periodicity.

Nick: But you can't say that two ups and downs prove periodicity.

ReplyDeleteIt's in much longer data-length proxy data too. See e.g. here.

(Note we don't have to discuss what the proxy measures to say it's present in the proxy index.)

My condolences on trying to make sense of that WUWT post.

Carrick, I'm open to the idea that there may be a sixty year cycle. I'm just saying that you can't prove it with 135 years of data. If there was a physical argument behind it, the curve fit would be more interesting.

DeleteSee also this figure, same study.

ReplyDeleteThere are a whole host of mechanisms offered up as causes for different parts of the oscillations at different times. Changes in the earth's core and magnetic field; global dimming and brightening; sulphate aerosols; synchronized chaos; world wars; etc.. I sincerely doubt there is a 60-year cycle. I do think there is a conspiracy of coincidences.

ReplyDeleteJCH, I don't see how it persists for proxies that are more than 1000 yrs in duration. That one's a bit hard to explain away.

ReplyDeletePlayed this game with Girma 18 months ago

ReplyDeletehttp://rhinohide.wordpress.com/2011/01/17/lines-sines-and-curve-fitting-9-girma/

http://rhinohide.wordpress.com/2011/01/18/lines-sines-and-curve-fitting-10-nls/

http://rhinohide.wordpress.com/2011/01/19/lines-sines-and-curve-fitting-11-more-extrapolation/

http://rhinohide.wordpress.com/2012/02/16/lines-sines-and-curve-fitting-14-more-extrapolation-revisited/

Yes, Ron, I see I could have just linked. I noted this comment:

Delete"Girma, you seem quick to claim victory … slow to acknowledge a defeat :)"

He's still going.

Just noticed that the R-generated plots were Girma's plots. He was still using excel plots back then. Wonder if I can take credit for teaching him R? :lol:

DeleteRon, you have more interest in Girma, than probably anybody, except maybe his mom, who's wondering when he will stop with the squiggly lines and get a real job.

ReplyDeleteOne of the obvious problems with the extrapolation is, even if you have a 56 year cycle present in global mean temperature that doesn't imply it's stationary, in fact it's probably not. There are other cycles with nearly the same frequency (e.g., length of day), and there's well known nonlinear phenomena of entrainment, frequency locking or phase slipping and good old fashion amplitude suppression that would cause the amplitude to vary over time.

ReplyDeleteAnd the point of that is any forcing with a similar frequency content will strongly interact with a putative oscillation at this frequency.

There simply is no way to know, sans a real model, how the amplitude of the putative oscillation will vary in future years. These sorts of extrapolations in the absence of a physical basis are totally useless.

Thus ... :D

DeleteTesting an astronomically based decadal-scale empirical harmonic climate model versus the IPCC (2007) general circulation climate models (2011)

Theodor Landscheidt invented that game. Go look at USENET sci.environment. Stoat had some interesting comments on how it is done.

DeleteNick

ReplyDelete"arbitrary selection of basis functions"

The sea level rise is quadratic. As a result, the secular GMST should also be quadratic.

The selection of the basis functions is not arbitrary.

Girma,

DeleteWhat's the basis for saying that sea level rise is quadratic? That's just another function fit.

Nick

ReplyDeleteHere is the fit for the sea level rise and its R-squared is 98.9%.

http://orssengo.com/GlobalWarming/SeaLevel.png

Don’t you think both the Secular GMST and the Secular Sea Level Rise should have the same function form?

Why do you want to fit the more complex exponential function instead of the simpler quadratic function to the data?

Differentiation (rate of change of the variable in question) of a quadratic function gives you a linear function, but the differentiation of an exponential function still gives you another exponential function. They are not equivalent.

What do you think?

Girma,

DeleteWhether sea level or GMST, you can't say that they follow a quadratic, in any meaningful sense, just because of an R2 value. There is no uniqueness. Lots of other curves can do as well in R2. And arguing that one is established because it is simpler than another is just human subjectivity (or handwaving).

You can sometimes argue that a curve fit affirms some form based on a priori theory, and even that it supports some range of parameter values. But "simpler" isn't such a theory.

This comment has been removed by the author.

DeleteI agree

DeleteNick

ReplyDeleteI derived the GMST function from the 30-years least square GMST trend for the 20th century.

The GMST function is just the integration of the GMST trend.

Well, that's guaranteed to give a quadratic.

DeleteFor any data.

For a linear data.

DeleteNote that I calculated the least square trends for every year from 1885 (for the 30-years trend period from 1870 to 1900) to 1996 (for the 30-years trend period from 1981 to 2011).

ReplyDeleteNick

ReplyDeleteYour exponential model, after about 2002 and until 2011, diverges from my model. That is the butterfly flutter that caused the hurricane that caused you to say “Grim” because it gave you 8 deg C for 2100.

Remove that flutter and let us see what you get.

Girma,

DeleteThe test is not whether it is identical to your model. The test is whether you can say your model is a better fit. In fact, so much better fit that my model can be ruled out as not fitting.

Nick

DeleteMy correlation coefficient between the observed GMST and the smoothed GMST for HADCRUT3 from 1885 to 2011 was 0.94. What is yours?

Girma,

DeleteFWIW, I got 0.9301 for your model and 0.9308 for mine. The difference is negligible.

But I don't see that correlation coefficient is a suitable figure of merit anyway. It's normally something that you compute between two stationary random variables. You have to divide by the sd of each, and I don't see that the sd of the fitted curve makes much sense.

Girma,

DeleteJust checking on the discrepancy there, I think you must have used the variance adjusted version, Hadcrut3v. With that I get 0.9404 for your model, 0.9411 with mine.

Thanks Nick.

DeleteNick

ReplyDeleteDo you believe IPCC's 0.2 deg C per decade warming for the next two decades projection?

I strongly don't.

The IPCC projections are in chap 10, and given with appropriate error bounds. In the SPM they said:

Delete"For the next two decades, a warming of about 0.2°C per decade is projected for a range of SRES emission scenarios."

No error bounds are given. I don't believe it will be exactly 0.2°C per decade, but it seems a plausible order of magnitude. Certainly your curve fit does not help there.

You have already plotted my projection (Red curve) and it shows flat trend until 2025.

DeleteNick why do you think Lucia insists on testing exactly 0.2C?

DeleteWhatdo you think would be a good test, to tst the 'about 0.2C'?

Strangely enough (or maybe not so strangely) many others have complained about this exact same issue

http://moregrumbinescience.blogspot.com.au/2008/08/testing-ideas-2.html

It's completely bizarre.

Cheers

Nathan

Nathan

What about the cooling of the Multidecadal Cyclic GMST shown in the first figure of this post?

ReplyDeleteWhich I believe is related to Figure 4 of the following paper:

http://www.meteo.psu.edu/holocene/public_html/shared/articles/KnightetalGRL05.pdf

Nick: No error bounds are given.

ReplyDeleteIf I think back to how many times I've had drilled in my head something to the effect "in empirical science, estimates of central values should always be accompanied by the uncertainty in that quantity. Only numbers that are exact should appear without an uncertainty value."

Apparently the IPCC didn't get that memo.

Anyway 0.2° was their forecast and "not a plausible order of magnitude somewhere around 0.2°C per decade." You just can't rewrite the text with appropriate waffle words for other people when they've gotten it wrong. To me this is just another example of another unfortunate and related tendency, namely to understate the uncertainty in estimates like this (as with this case, by leaving that analysis out entirely).

Carrick,

DeleteYes, I agree that it should. This was, however, the SPM, and scientists don't have a lot of control on how it emerges.

One can criticise the omission. But it doesn't make sense to undertake an analysis assuming they are zero. There are plenty of error indications in Chap 10.

Carrick,

DeleteHow is 'about 0.2C for the next two decades' (which is 2007- 2027?) the same as 0.2C over the last 30 years (or some other recent interval)? She can't test what she's trying to test, because she has ignored the uncertainty and the future hasn't happened yet.

It's a very strange test. And many people have explained to her why it's bizarre from Grant Foster to James Annan to Robert Grumbine.

Nick didn't re-write the IPCC's words, he quoted verbatim.

Lucia is dong the re-interp because she doesn't like what the IPCC wrote.

Figure SPM 5 gives error bounds for the projected increase. In fact almost every other projected temp increase in the doc gives error bounds.It hilarious that she chooses to test the one statement that doesn't give an explicit error bound. It implies it several times with the repeated use of the word 'about'. But in her desperation to 'disprove the IPCC' she just ignores what the IPCC actually says.

Did the paper she wrote ever get published?

Nathan

Carrick, read through this commentary again. It's the same problem for Lucia. She's been banging on about this for about 5 years (and no I don't mean exactly 5). She's been told many times why her analysis is meaningless, yet she refuses to acknowledge it.

ReplyDeletehttp://julesandjames.blogspot.com.au/2010/05/assessing-consistency-between-short.html

Nathan

I don’t agree with IPCC’s claim of 0.2 deg C per decade warming for the period 2000-2030. This projection is not supported by the data. The climate pattern is governed by the enormous heat stored in the oceans. This pattern has been unique for more than 100 years as shown in Nick’s graphs above, and it is not going to change in the next decade or two. This pattern, this data, says little warming in the period 2000-2030.

ReplyDeleteAs a result, the warming trend for the period 2000-2030 should be closer to zero than 0.2 deg C per decade.

DeleteCarrick

ReplyDeleteHere is the critical comment by James in that post. He summarises far more eloquently the pointlessness of Lucia's excercise than I ever could:

"James Annan said...

Lucia,

That's an awfully elaborate narrative (or series of narratives) that you've got there. It rather seems like you are trying to interpret things in a way that enables you to put "IPCC" and "falsified" in the same sentence, rather than investigate how well the IPCC models match up to reality.

BTW, it might be worth noting somewhere that the IPCC figure you refer to never even claimed to present the GCM projections, rather it presents the output from a simple climate model (SCM) which was tuned to emulate the GCMs. This precludes the possibility of that graph representing short-term natural variability in any way whatsoever.

Regarding the multi-model mean, there are some confused statements in the literature, but the following are actually trivial truths: (1) the MMM will be better than an average model for anything you choose to look at, (2) the MMM is very unlikely to be better than all models in matching the actual temperature trend, and of course (3) the MMM is going to be biased one way or another, it is basically impossible for it to coincide precisely with the truth. So rejecting the "nill hypothesis" that the MMM coincides with the truth would be a pretty worthless exercise.

Something that we haven't discussed here, but also seems relevant, is the difficulty of accurately determining an underlying trend in a short time series with unknown autocorrelation structure. This was a major error in the Schwartz paper a couple of years back. Using monthly data and fitting AR(1) enables you to deduce a rather low level of multi-year variability, but it is far from clear that this is actually correct. An obvious test would be to apply the same analysis to short sections of GCM output and see how often the 95% confidence intervals actually exclude the forced trend. I'd be interested in seeing the results of this."

Nathan

Nathan,one thing you may not be aware of is Lucia folds weather noise in with model noise using quadruture. Hence it makes sense to use a fixed 0.2°C in that sense. Anyway, 0.2° is a forecast, like "it's going to be sunny and mild". Not being sunny and mild doesn't mean you throw away your weather models. Knowing how well you can forecast tells you how useful the forecast is. It does have policy implications.

DeleteIt's not useful to say "well it could be cold and rainy too, and you didn't test that too" because that wasn't the forecast. Nick is right it could be a 0.1°C/decade instead of a 0.2°C/decade. But so what? That's not the number they chose.

Regarding James comments, he makes the same mistake you and others have, which is conflating falsifying a forecast with falsifying a model. I understand the distinction, I think James does too, I think he just did a knee-jerk reaction. Lucia definitely understands the distinction to. She also doesn't rely on Schwarz (she Monte Carlos to verify her statistical methods and has been doing so for as long as I've been on her blog).

I don't think the mean of the IPCC model ensemble is particularly that useful, I agree with James on that, but many other people use it besides Lucia, including the writers of AR4 and other climate scientists, so it's a bit one sided criticism to level it at her as if she invented it, and not acknowledge it's a generic problem with the field. Nor really is it in appropriate for somebody to say 'they use this metric, so I'll test this metric'. (Honestly I think James is a bit sexist and it shows up in the sort of one sided criticisms such as we see here. )

Carrick,

DeleteIt makes no sense to test 0.2C, to disprove or falsify the IPCC when they said 'about 0.2C'. Everywhere in the doc they use the word 'about' it's clearly saying that there is uncertainty there. Rather than trying to imply that 0.2 is the only rational choice, why not actually attempt to give it some error bounds? I suggested 0.15 - 0.25 as the test, that range is actually 'about 0.2'. Just eyeballing her results, they pretty much pass that test. Which is probably why she's resisting adding in some uncertainty into the test target.

I am not conflating the MMM with models. In Lucia's last thread I told her you can't use the test on the MMM to test anything about the models, which is a big part of why her test is pointless.

"but many other people use it..." Like whom?

It's a marketing strategy for lukewarmerism. That's what she does. She attempts to give people who want to believe in lukewarmerism something to hang their philosophy on.

This is why you're claiming James just had a 'knee jerk' reaction - it's you tryingto play down his dismissal of her idea. Remember she's been doing basically the same thing for 5 years, people have seen what she's been doing for a long time. And they don't think it's very good.

And James is sexist? Good grief!

Carrick, you get what you need from Lucia, which is a way to say that Lukewarmerism could be right. People working in the field think it's rubbish and she can't get her work published. That's a pretty clear indication that it's not useful.

You even make the mistake yourself of thinking that the recent trend implies a lower sensitivity. Not so, whatyou are looking at in this data is transient sensitivity, not equilibrium sensitivity. You need to read Isaac Held's blog.

Nathan

James is human, and yes, I think among the data points that he is human, ... I think he's bit of a sexist. It's a not that uncommon trait of European males in general, at least from my experience or those of my female colleagues. (There is a lot of behavior that I think is bigoted, and it is interesting that people always come up with excuses for why it's right).

DeleteAnyway this is my opinion, you're allowed to assume that James isn't sexist if you want, and whether that makes any difference to you, I didn't come to this conclusion from seeing his reaction to Lucia's comment.

Carrick, you get what you need from Lucia, which is a way to say that Lukewarmerism could be right.

Assumption of motive on your part. My own SWAG is climate sensitivity is probably around 2.5°C/decade, which isn't that far below the model ensemble, and I think 3°C/decade has by no means been ruled out by data. If there are problems with the forecast they speak to uncertainty in forcings, unmodeled short period variability in climate rather than sensitivity. I've said this a number of times before. ON Lucia's blog.

Even if she accepts my criticism, that doesn't mean she cant' continue to study a statistical problem she finds interesting, regardless of whether she plans on publishing it or not.

There isn't anything wrong with what Lucia's done, and it doesn't bother me that James after cursory glance at her work and a brush off comment thinks otherwise. I'm technically savvy enough to know how to do this type of analysis, and if anything, her uncertainty bounds are slightly conservative.

People working in the field think it's rubbish and she can't get her work published.

"People in the field?" Something doesn't have to be publishable to be interesting, much of what is published isn't interesting, and you are doing an extreme overgeneralization here.

The methods she uses are quite standard, not "rubbish" that makes it not publishable (at least in long article format, though if she were interested I bet she could get it published as a short note or letter). You really frankly are completely ignorant of statistical methods and based on a few knee jerk comments by a few researchers, think you are suddenly fully informed. I suppose the reason you're over on Nick's blog posting this, is you've gotten tired of getting spanked when you try and post your silly arguments on her blog.

You even make the mistake yourself of thinking that the recent trend implies a lower sensitivity

The 0.2°C/decade forecast is based on the assumption of a 3°C/decade equilibrium model sensitivity. I wasn't involved in that forecast, so if you think somebody is mistaking transient for equilibrium sensitivity, that would be the people who produced the forecast.

Carrick,

DeleteThat was a load of waffle.

It seems you're content with her claims that she 'falsified the IPCC'. But it's lightweight.

I think that just shows a serious lack of skepticism on your part.

It's also pretty lame to bring in claims of sexism.

Carrick.

DeleteTo claim that the equilibrium sensitivity in the models is too high, because they run higher than what we have observed is a baseless claim.

I think you need to actually read what I said before commenting, starting with the part where I said the failure of the 0.2°C forecast doesn't necessary indicate that the equilibrium sensitivity is too high:

DeleteI think 3°C/decade has by no means been ruled out by data.

Seriously, how do you expect anybody to take you seriously, if you can't even parse what they are saying in plain English? As it stands, that's why I don't.

As to lame, I'd be careful what qualifiers you use, since l lame is actually a pretty good description of somebody posting on a third-party blog behind somebody's back because, I presume, he's tired of getting spanked when he posts someplace else.

Carrick,

Deleteon Lucia's blog you claimed that the sensitivity was lower because the models were running high. If you think otherwise, fine.

You keep saying I got spanked and moved here.

She asked me to leave. Or rather said she wouldn't talk to me anymore, so I left.

It's not 'behind her back' It's the same criticism I left on her blog, that she refused to address.

Carrick you also need to adress the fact that the IPCC projection is for the next two decades, most of which hasn't even happened yet.

ReplyDeleteThe projection comes from looking at the mean slope from the models over 20 years. You can go to 10 years, and find the slope isn't very much different.

DeleteI think it's a fair criticism to say, 10 years isn't long enough to test the models, given the problems we know they have with short period variability. Suggesting you need 20 years to test the model is not an unreasonable argument,. That is another comment I've made on her blog, and not just once.

You keep making the mistake of assuming that because I frequently find problems with your arguments, that I always disagree with your conclusions. You're one of these people with a extreme problem with confirmation bias, and you'd do better with a bit more critical thinking. (One of the precepts of critical thinking is being self-aware of your own biases and challenging them.)

Went back and looked...

DeleteCMIP's A1B scenario 2001-2012 median trend is 0.20°C/decade.

The median over all models for A1B is 0.24 °C/decade. For A2, all models, it's 0.21°C/decade. For B1 it's 0.22°C/decade.

Since, their number is a bit 'low ball", it looks like they weighted their forecast on their intuition about which model is more likely to be correct (of course, that's what a forecaster does).

DeleteHey Carrick, what's the uncertainty interval... See the uncertainty in the graphs? That's the bit Lucia has left out.

If you want to blame somebody, blame the IPCC writers who wrote 0.2°C without an actual error bound around it. Be carefully or you'll get into an argument over what "is" means. We've already had the discussion of what "about" means, so that one's taken. ;-)

DeleteLucia does that with the multiple runs on the models, like she's doing right now: If you want to look at just how cartoon-like James Annan criticisms were to Lucia's actual thinking compare and contrast his comments to her actual comments and where she is going with this.

Where Lucia and I diverge is I don't think the short-period weather is very interesting until the models get good enough to be able to actually predict it. We know they have trouble on scales less than 5-years, the fact that annual noise is too high in the models, is just a reflection IMO of the breakdown in the models (probably related to the physical scale that was forced on them by the available computer memory at the time).

I believe this means, for strict forecasting, that these models do unquestionably fail to replicate Earth-like climate, and in ways already documented in IPCC AR4.

What that means for the amount of certainty in the model outcomes... well, I think the hope was that on a 20-30 year interval they might be still be able to get the trend right enough though they have problems at shorter time periods (but it's actually more than a future model in say 15-years using the exact forcings they have forecast with won't agree with the 30 year trends predicted from the AR4 models, starting say from 2001).

Carrick, you gave the estimates from the projection graph. On that graph (Figure SPM 5) it has the uncertainty intervals. I blame you for ignoring it. You are clearly ignoring the uncertainty.

DeleteShe can test the recent temps against 0.2C if she likes, but that is not an honest representation of the IPCC prjection.

For 2001-2020, the model ensemble numbers go to 0.23 for A1B, 0.21 for A2 and 0.23 for B1.

ReplyDeleteAs I said, 10 years (-ish) versus 20 years doesn't affect the trends very much. Lucia knows this, the forecasters know, this, you would know this if you stopped being such a reactionary and took a little time to look at the data yourself.

It doesn't affect the trends much, but it affects the uncertainties.

DeleteOn Nathan's arguments, I've been critical of Lucia's focus on testing 0.2 C/dec in isolation. I think there's nothing wrong with doing that on its own, but it doesn't relate to any sensible account of model performance. The logic seems to go - well the SPM said ... and they didn't mention error bounds. Well, perhaps they should have - that's a criticism. But when you've beaten to death the analysis vs 0.2, you're still none the wiser about whether models are actually failing - all that has been tested is the adequacy of a statement in the SPM. And there are more direct ways of making that criticism.

They give error bounds in the graphical representation.

DeleteI agree that you can test against 0.2C, but that doesn't 'falsify the IPCC' or whatever she claimed she was doing.

A: I assume you are referring to the model ensemble? She's looked at that too.

DeleteNick & A (I assume Nathan):

I don't personally find whether the 0.2°C trend (with or without error bounds) meets expectations to be that interesting. I don't think there's much difference between Nick and I on that issues (I could pretty well have written what he wrote). It's not something I would personally test, but I suppose Lucia's interested in the statistical methods themselves. People often do blog about things that aren't that exciting, but are just nice applications of statistical methods.

I also don't like the model ensembles, since we already know that "size matters" here, and some models are clearly poor performers that IMO shouldn't have been included (I realize there's political reasons that trump the science here). Like the 0.2°C, I don't think you can fault IPCC AR4 results that Lucia decides to test. They didn't put errors on 0.2° and they somehow decided that the ensemble mean of this particular suite of models would be "truth centered". (James Annan has some very directed comments on that issue.)

What Lucia's done recently in comparing individual models is more apropos IMO, and it's the approach I would have taken. Except I'm convinced that models failing to validate over 10-year periods doesn't imply they won't validate over longer term trends. I do think it does mean something that they don't validate on 10-years, but I don't think we have enough information to assign clear meaning to it yet. (Probably have to wait until we have 20-years of data to test against.)

Nick, as I mention the models themselves predict temperature trends greater than 0.2°C, presumably this relates to the sensitivity of the models (as well as the forcings).

ReplyDeleteSo while you could say it could be smaller, that does pull implications in along with it.

I do agree about the comment about larger uncertainties for the shorter period trends. For the recent studies she's doing the individual model's variability (and real weather variability) are fed into the analysis.

DeleteIf you spend some time looking at the AR4 comparisons of ENSO it's pretty clear they get this wrong.

In the comment that I linked to from Lucia, she makes the point that besides getting this wrong, the model short-period variability is actually too high and in spite of this, they still fail to validate against real observations over a roughly 11-year period.

I think the meat of the problem is the models don't produce trustworthy short-period behavior---it's not Earth-like as Lucia says in her comment, and she's right.

The problem we're at right now reminds me of some modeling I did where people were stuck using very small array sizes based on computer memory availability. When I came into that field I had access to a high-end vectoring processing computer, and one thing I did was wrote modeling code that bumped up the number of discretizations. Probably not to any surprise to you, increasing the discretizations can make a big difference.

In this particular case, it was pretty easy to show why their codes were breaking down and giving wrong results--as I pointed out to them, straightforward WKB calculations yielded an estimate of the wavenumbers required for an accurate model, and they were simply using too coarse of a grid to fit that physics into it. (They of course came immediately to the conclusion that the WKB was wrong, even though I could replicate the WKB predictions with my higher resolution grid model, some people are just dumb).

I think the problem for (older AR4) models is precisely the same---the grids are too coarse to accurately portray climate on that spatial and temporal scale. There may be other problems with the models, but until very recently climate models couldn't directly resolve eddy physics, and trying to parametrize that at "source region" scale lengths is a very questionable approximation to make. I also think they know all of this, and none of this is a surprise to people like Isaac Held.

Carrick.

DeleteThis is where the problems lie, Carrick, yes. I am sure people are working on getting better models. I guess we'll have to wait until the next IPCC report - or have you kept a close eye on model progress from the literature.

What I am curious about (and I have asked her this driectly before) is why she doesn't try and use the real world data to see if the models are running too hot because they've incorrectly adressed ENSO and TSI etc.

This would be a far more useful test of the models.

Carrick

ReplyDeleteHer latest posts are much better, and they include caveats about this implying that particular aspects of the models are wrong, rather than a blanket 'the models are wrong'.

Much better.

What's will be more exciting is comparing the AR4 results to the newest results...